Introduction

GenerativeAgent is the foundational AI system that powers ASAPP’s Customer Experience Platform (CXP), a platform that orchestrates end-to-end customer experiences across chat and voice through a unified, open architecture.

GenerativeAgent’s security is built around three pillars: context, strategy, and execution.

Our context (sourced from regulatory requirements, our understanding of the risks and threat landscape, and customer expectations) shapes a strategy that keeps GenerativeAgent on secure rails: strict scope, grounded answers, privacy-first data handling with Zero Data Retention (ZDR), and defense-in-depth with classical security controls.

Execution delivers these commitments through robust input and output guardrails, strong authorization boundaries, best-in-class redaction, a mature Secure Development Lifecycle (SDLC), real-time monitoring, and independent testing. This enables the efficiency and quality gains of generative AI while protecting data, brand, and reliability at scale.

Context: What shapes our approach

Regulations and standards establish the baseline (e.g., GDPR, CCPA; sectoral regimes like HIPAA and PCI when applicable; SOC 2 and ISO standards). We also align with emerging guidance, including the NIST AI Risk Management Framework and planned future alignment with ISO 42001 for AI.

Our risk perspective integrates novel AI threats (e.g., prompt injection, model manipulation, grounding failures) with classical security concerns (e.g., identity and access, multi-tenant isolation, software supply chain, operational resilience).

Customer expectations are consistent across the market: prevent data exposure from AI-specific attacks, ensure reliable and on-brand outputs, guarantee safe handling with foundational LLMs (no training on customer data or sensitive data storage by LLM providers), and demonstrate robust non-AI security fundamentals.

These three inputs are typically aligned and mutually reinforcing.

Strategy: On the rails by design

We do not just rely on model "good behavior." GenerativeAgent operates within a tightly defined customer service scope enforced in software and at the API layer. Responses must be grounded in approved sources, not speculation. Privacy-by-design is foundational: we apply ZDR with third-party LLM services and aggressive redaction after model use and before storing any transcripts. Customers can also choose not to store any call recordings or transcripts, depending on their data retention preferences. Controls are layered:

- Input screening and verification

- Session-aware monitoring,

- Output verification, and

- Strict authorization

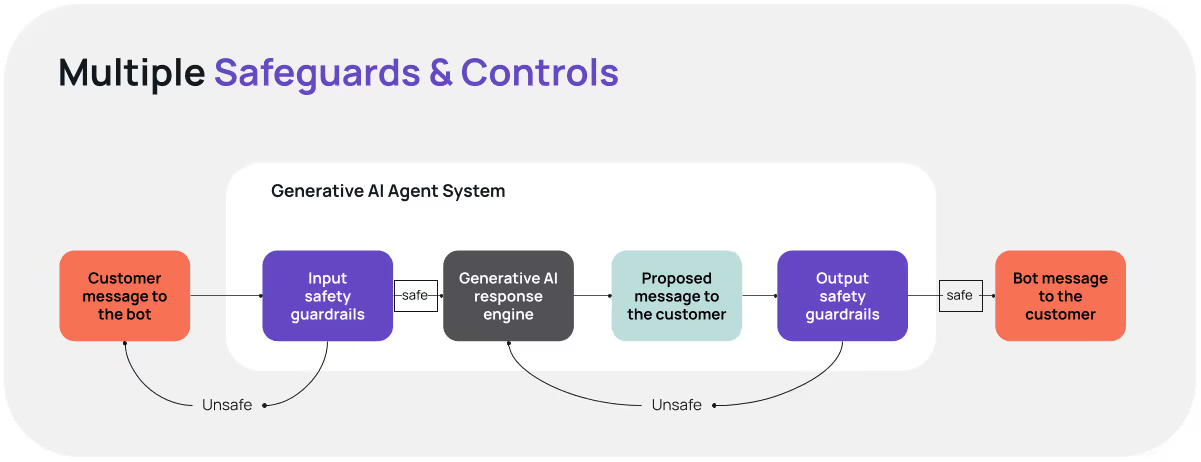

Unlike traditional software, generative AI does not offer deterministic guarantees, so we implement multiple safeguards and controls.

There is no single point of failure. Security is embedded from design through operations ("shift left") and validated continuously by independent experts.

Execution: Delivering on customer priorities

1. Preventing data exposure from novel AI threats

Addressing the risk of AI Misuse, mainly injection attacks:

Before GenerativeAgent is allowed to do anything - retrieve knowledge, call a function, or execute an API action like canceling a flight - inputs pass through well-tested guardrails. Deterministic rules stop known attack patterns; ML classifiers detect obfuscated or multi-turn "crescendo" attacks; session-aware monitoring flags anomalies and can escalate to a human.

Even if a prompt confuses the model, strict runtime authorization prevents data exposure: every call is tied to the authenticated user, checked for scope at runtime, and isolated by customer with company markers. Cross-customer contamination is prevented by design.

2. Ensuring output reliability and brand safety

Addressing the risk of AI Malfunction, mainly harmful hallucinations:

Grounding is the primary control against hallucinations: the AI agent answers only when it can cite live APIs, current knowledge bases, or other verified sources. When information is insufficient, it asks for clarification or hands off; it does not guess. We generate the complete response and then apply output guardrails to verify it is safe to return to the end user. These checks detect toxic or off-brand language, prompt or system-instruction leakage, and inconsistencies with the underlying data. Failures trigger retry with guidance, blocking, or escalation to a human.

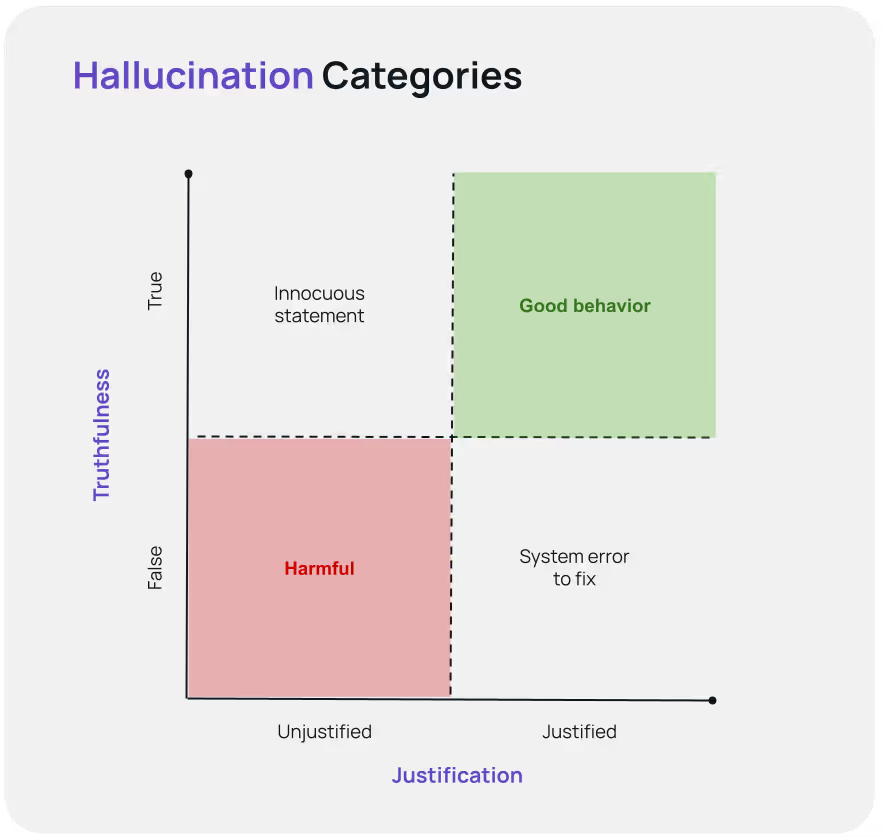

Not all hallucinations are harmful. We classify any hallucination by type and severity to prioritize reductions in harmful, brand-impacting cases.

Based on these axes, we can classify hallucinations into four categories:

- Justified and True: The ideal scenario where the AI’s response is both correct and based on available information.

- Justified but False: The AI’s response is based on outdated or incorrect information. This can be fixed by improving the information available. For example, if an API response is ambiguous, it can be updated so that it is clear what it is referring to.

- Unjustified but True: The AI’s response is correct but not based on the information it was given. For example, the AI telling the customer that they should arrive at an airport 2 hours before a flight departure. If this was not grounded in, say, a knowledge base article, then this information is technically a hallucination even if it is true.

- Unjustified and False: The worst-case scenario where the AI’s response is both incorrect and not based on any available information. These are harmful hallucinations that could require an organization to reach out to the customer to fix the mistake.

3. Safe data handling with foundational LLMs

Addressing customer concerns about their data being used to train models for others or stored in an LLM provider’s cloud.

We meet this with best-in-class redaction and ZDR. Based on customer requirements, our redaction engine can remove PII and sensitive content in real time for voice and chat, and again during post-processing for stored data. It is multilingual, context-aware, and customer-tunable so masking aligns with each client’s policies and jurisdictions.

We operate with zero data retention with third-party LLMs like GPTs: prompts and completions are not used for LLM training across customers and are not stored beyond processing and critical internal needs. Data remains segregated and encrypted throughout our pipeline.

4. Foundational (non-AI) security that carries most of the risk

Our most sophisticated customers recognize that much of GenerativeAgent’s risk is classic, not specific to AI. It’s about protecting the integrity and confidentiality of data.

We operate a mature, secure development lifecycle with software composition analysis, static and dynamic code analysis, secrets management, and enforced quality gates through architecture and design reviews. We maintain least-privilege access, strong cloud tenant isolation, and encryption in transit and at rest.

Independent firms (e.g., Mandiant, Atredis Partners) conduct recurring penetration tests, including AI-specific abuse scenarios: prompt injection, function-call confusion, privilege escalation, multi-tenant leakage, and regressions. Findings feed directly into code, prompts, runtime policies, and playbooks. We monitor production in real time, retain only necessary operational data, and maintain disaster recovery for AI subsystems.

We are developing an exciting new capability with Continuous Red Teaming, enabling ongoing adversarial evaluations against GenerativeAgent, rather than relying solely on scheduled, point-in-time assessments and red team exercises.

How ASAPP stands out in security and compliance

How do ASAPP’s security and compliance capabilities measure against other AI-driven customer experience providers?

Our strongest differentiator is not a single certification or security capability, but the trust earned from years of securing data for large, risk‑averse enterprises.

That track record—end‑to‑end security reviews, successful audits, and durable relationships in regulated sectors—consistently carries weight with enterprise buyers and shortens the path to approval.

ASAPP’s current posture is enterprise‑grade: SOC 2 Type II, PCI DSS coverage for payment workflows, and GDPR/CCPA alignment, underpinned by strong encryption AES‑256 at rest, TLS encryption in transit, and recurring third‑party testing/audits. For AI risk, we apply production guardrails that mitigate prompt injection and hallucinations and use robust redaction to reduce data exposure. These are controls that make ASAPP well suited for high‑stakes contact center use in financial services and other regulated industries.

Why secure generative AI matters for the business

Secure generative AI is more than compliance - it’s risk management, trust, and growth.

By building security and privacy in from the start, we harden our posture and increase resilience. Controls like strict tenant isolation, zero data retention, and runtime guardrails prevent exposure and enable systems to fail safely.

These safeguards streamline compliance and audit readiness, aligning with data protection laws and emerging AI governance. They build customer confidence, shorten procurement cycles, and open doors for enterprise customers.

Security also drives efficiency. Guardrails keep outputs on‑brand; early detection lowers remediation and engineering effort; fewer incidents mean steadier, more scalable operations.

As GenerativeAgent becomes core to customer operations, safe scale requires auditable controls - not promises. Continuous validation against standards turns security and trust into competitive advantages, protecting data and reliability while enabling sustainable growth.