The promise of AI for contact center operations—especially through a generative AI agent—is clear: more capacity, a better customer experience, and lower cost to serve. It offers more capacity, a better customer experience, and lower cost to serve. But the actual value you realize depends heavily on performance, safety, and flexibility. That makes choosing the right AI agent for your business critical.

We want to be candid here. We want ASAPP to be the answer. We would love for you to choose GenerativeAgent® as your AI agent for customer service. But only if that’s the right choice for your business. With that in mind, here’s our best advice on what to consider as you decide.

This buyer’s guide is extracted from the “Should you buy an AI agent or build your own?” eBook.

What does a robust AI agent solution include?

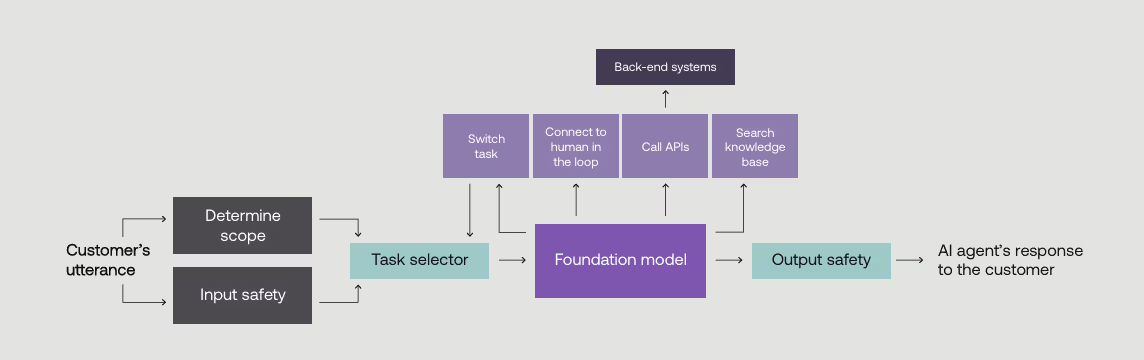

A safe and reliable AI agent is far more than a large language model (LLM) grounded in your internal knowledge sources. It’s a sophisticated system that integrates

- multiple LLMs,

- layered input and output safety mechanisms,

- automated QA for responses,

- secure connections to external systems,

- transparent documentation of the agent’s behavior, and

- no-code tools that allow business teams to manage and optimize performance with agility.

This complexity is essential to achieve the safety, accuracy, and performance that enterprise operations demand. It’s also what separates a functional prototype from a mature, production-ready solution that delivers consistent results at scale.

A safe and reliable AI agent should include:

Multiple LLMs

No one model will be best suited for every task. So, it’s important to incorporate multiple models into your AI agent solution, using each one for what it does best. One model might be tasked with primary reasoning, while another serves as a judge to assess the accuracy and relevance of responses before they are sent out to your customer. This real-time evaluation is critical because LLMs tend to favor their own content, increasing the likelihood of inaccurate or irrelevant responses.

AI models are evolving quickly, so flexibility is essential. The best model for a task today may not be the best model for that task in the future.

Secure, seamless integration with external systems

As your AI agent works to resolve customer inquiries and manage customer calls effectively, it will need access to other systems like CRMs and billing platforms, to retrieve data and take action on behalf of the customer. The AI agent will rely on APIs to access those systems.

In many cases, relevant APIs likely already exist in your technology ecosystem; however, they may not deliver data in a format your AI agent can process directly. Your technical teams will either need to modify or wrap those APIs, or develop another method for translating their output so your AI agent can use it.

Model orchestration

LLMs are powerful but there are still serious limits to what they can accomplish on their own. They sometimes struggle with multi-step processes and applying contextual information in a meaningful way. Using multiple models, each for a specific purpose, can help overcome these limitations – but only with nuanced orchestration.

Model orchestration manages the complex workflow across components and processes, including the interaction between the various LLMs, API calls, data retrievals, prompt management, and more. This orchestration layer is actually the backbone of any enterprise-ready agentic AI solution.

Data security

Because your AI agent will access other systems using APIs, security and authentication in the API layer are critical. They ensure that the AI agent can access data only for the customer it’s actively interacting with and only for the task it’s currently performing, and that it cannot retrieve data it is not authorized to use.

During customer interactions, personal identifiable information (PII) is sometimes part of the conversation and is often necessary to resolve issues for the customer. To maintain data privacy, this PII should always be redacted before the data is stored. If you use third-party components in your solution, you’ll need to be able to guarantee that if any data is shared with a third party, it’s redacted before leaving your infrastructure.

Input safety

Effective agentic AI solutions need multi-layered defenses against malicious or manipulative inputs. These defenses must detect and block exploits such as prompt injection, abuse attempts, or out-of-scope requests that could harm your brand. A layered approach—covering prompt filtering, harmful language detection, and safeguards to keep the AI within defined boundaries—is crucial.

Output safety

Even with strong grounding, LLMs can hallucinate, producing inaccurate or misleading responses. AI agents must include safety checks that review outputs before sharing them with customers, along with clear escalation paths for when AI-generated responses fall short. Whether the AI retries or transfers to a live agent, these processes are essential to maintain trust and service quality.

Human-in-the-loop

Few enterprises are ready to rely fully on AI for customer service without clearly defined processes for human intervention and oversight. In other words, there’s broad agreement that it’s important to keep a human in the loop. The question is, what do those processes look like, and what exactly do these humans in the loop do? You have a range of options to consider:

- Treat the live agents as an escalation point to take over an interaction when the AI agent fails, much like they do with traditional deterministic bots

- Require human oversight and approval for every action the AI agent takes, which severely limits the impact the AI agent can make on your contact center’s capacity

- Define a middle ground between complete AI independence and total human oversight by creating a solution that can work collaboratively with human agents.

Whatever path you choose, your solution will need to enable the human-AI relationship you’re envisioning.

See why Forrester says the ASAPP human-in-the-loop capability enables the transition to AI-led customer service by capturing the tacit knowledge of human agents.

Intuitive controls for business users

Customer service operations must adapt constantly. To fully realize the benefits of customer service automation, AI agents need no-code tools that empower non-technical business users to update processes, refine tone, and launch new use cases—without waiting on development resources. This agility prevents bottlenecks and ensures the AI stays aligned with evolving business needs.

Production monitoring

Generative AI behaves probabilistically, which means its outputs can shift unexpectedly over time. Continuous, real-time monitoring is essential to catch issues early, understand performance trends, and intervene before small problems escalate.

Production testing

Generative AI generates new information and content based on patterns, often producing varied responses that can be difficult to predict. This variability makes testing an AI agent exceptionally difficult, but it is a crucial component in any enterprise-grade solution. Your AI agent should include a robust pre-production testing process that combines multiple techniques, including simulation-based testing from production data.

GenerativeAgent comes with easy-to-use testing and simulation tools to help you ensure that it performs as you expect, even at scale. These tools include mock APIs, mock data, and simulation testing interfaces.

Keeping pace with AI innovation

As quickly as the technology is evolving, keeping up with every model improvement and new technique for applying AI in the real world can seem overwhelming.

Value realization

In the end, the question of which agentic AI solution to choose for your contact center isn’t really about technical complexity. It’s about which option delivers the best value for your business. Some of the factors that drive the relative value of each option aren’t necessarily obvious. Your AI agent should come with a clear explanation of specifically how it will deliver value.

It's more than the tech

Choosing an AI agent for your contact center isn’t just a technology decision—it’s a business decision with long-term implications. Generative AI is advancing rapidly, and so are customer expectations. The pace of innovation, coupled with the unique risks and opportunities of large language models, makes this a different kind of investment than traditional software. You’re not just buying a tool; you’re selecting a partner to help you navigate a fast-moving and often uncertain landscape.

At ASAPP, we believe the right AI agent is one that balances innovation with operational rigor. One that delivers measurable value while protecting your customers and your brand. We’ve built GenerativeAgent with this balance in mind—and we’re committed to helping you realize its full potential if it’s the right fit for your organization.

Whatever you decide, we hope this guide has helped you ask better questions and make a more informed choice.