The path from good to exceptional

Building an AI agent is easy. Even building a good AI agent that gets it right 40-50% of the time is easy. Anybody can do it. It could use Retrieval-Augmented Generation (RAG) to answer questions using your KB as a reference. It could use tools like APIs and Model Context Protocol (MCP) servers. You can pretty it up by working on its tone and personality. You could even instruct an AI agent to build you an agent to handle your use case. What could go wrong?

Well, everything. Customer service is about trust. When your customer uses your chatbot or voicebot and it doesn’t understand them, help them solve their problem, or is just plain irksome to use, they will not use it again. Ever. The bot has to hit it out of the park to gain that user’s confidence so they are willing to continue using that mode of interacting with your brand. Perhaps with trust gained, the user might forgive the bot if the 19th interaction was dissatisfying. But if it’s bad the 1st or 2nd time—you’ve lost them.

So how do you build trust? You get there by building a bot that performs brilliantly almost all the time. You take your AI agent from good to exceptionally good.

Which gets us to the beginning: the problem with do-it-yourself quick assembly of an agent (“upload your documents, point us to your endpoints, stick in your prompt”) is that they may be good some of the time, but do some crazy, unexpected stuff some of the time as well.

Why is this?

Well, enterprise use cases require nuanced business logic that uses some determinism, some reasoning, and some judgment based on a variety of policies described in arcane and often conflicting sources of business policy, compounded by using backend systems that are far from AI-ready.

Large Language Models (LLMs) don’t do well with this. You could try all kinds of prompting tricks. Tell your bot to follow the policy. Then plead with it to follow the policy. Threaten it that bad things will happen if it won’t follow the policy. SAY IT USING ALL CAPS. And remind it once more at the end of your prompt.

ASAPP has been building AI agents at production scale for large enterprises—not RAG agents, not agents that are conversational wrappers around deterministic systems—but agents that run complex workflows. When we began, our agent was slightly better than a deterministic rule-based bot…but a whole lot more expensive. Fortunately, we’ve learned plenty of lessons since then—we’re now able to create agents that perform exceptionally well, quickly.

One of the keys to how is context engineering. ASAPP’s platform uses a fleet of models that are orchestrated into a symphony of understanding, reasoning, and action. But for high performance, it is critical to present the system with a view of the world that is necessary and sufficient to perform its task at each state—and no more. We do this through five ways:

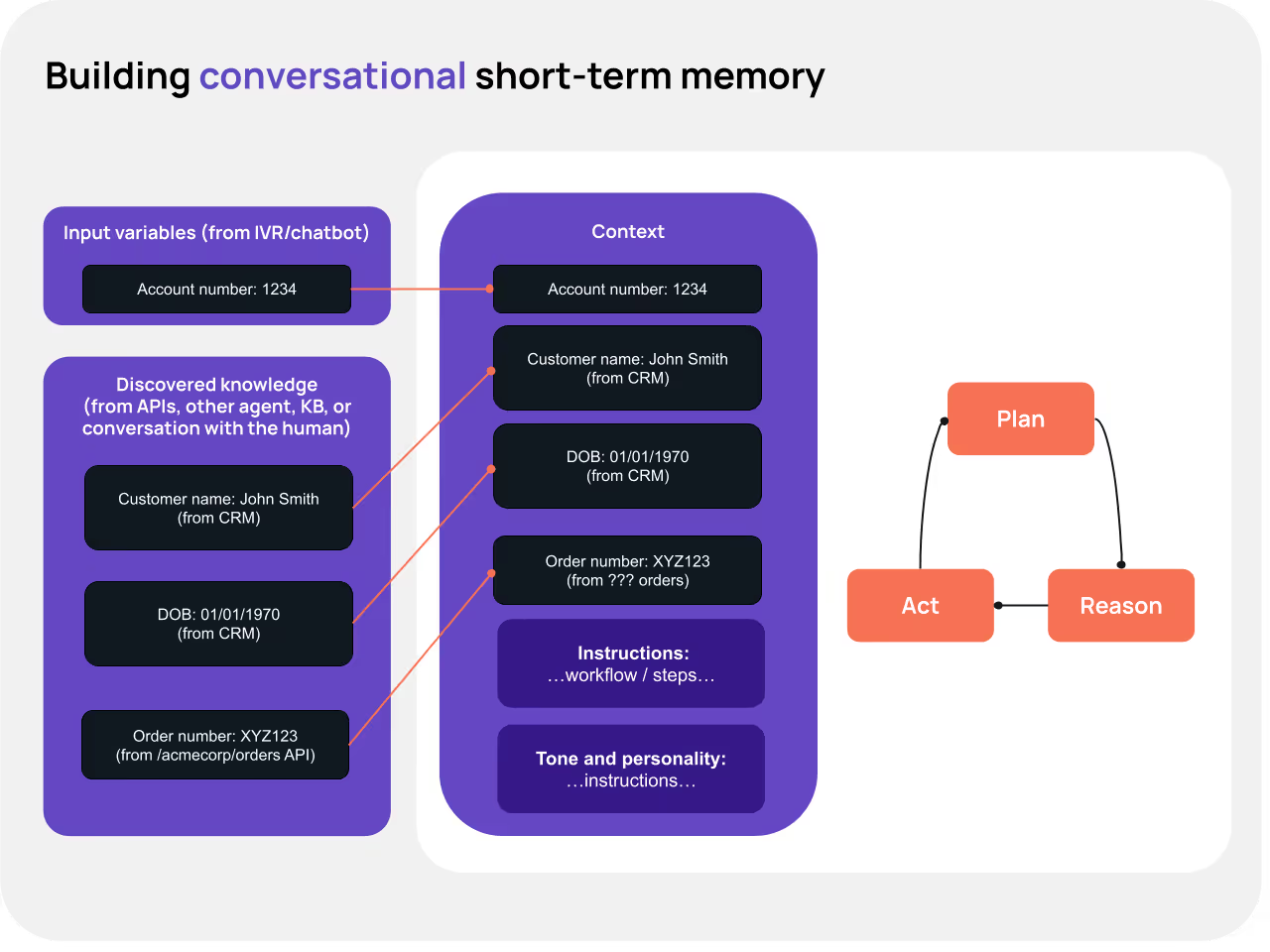

1. Short-term memory management:

ASAPP’s GenerativeAgent includes methods to define variables from API responses, injected context, or other sources of information. These variables are presented to the system in a special structured form for the life of the conversation, across the various types of actions the agent might need to take, improving the reliability of tool calling and reasoning. So it never forgets the account number, or calls the API that requires this data incorrectly.

2. Conditional and templated prompting

Wrestling with prompt instructions to manage the behavior of an agent is hard. GenerativeAgent comes with pre-defined variables (such as the channel—voice versus chat), which, when combined with short-term memory, provide powerful ways to create conditional prompts.

Consider providing a customer a refund for a returned product, but having different policies for a premium customer versus a standard customer. Use cases like this are extremely common—and rather than write a prompt with an if-then-else for the model to interpret, GenerativeAgent allows for explicitly hiding the prompt-based on variables.

The effect is that for complex logic, the agent sees only the instructions that matter, making a huge difference to task adherence. Add to this the ability to specify arbitrary logic using Jinja templating, and you have the foundations of a powerful way to program the prompt.

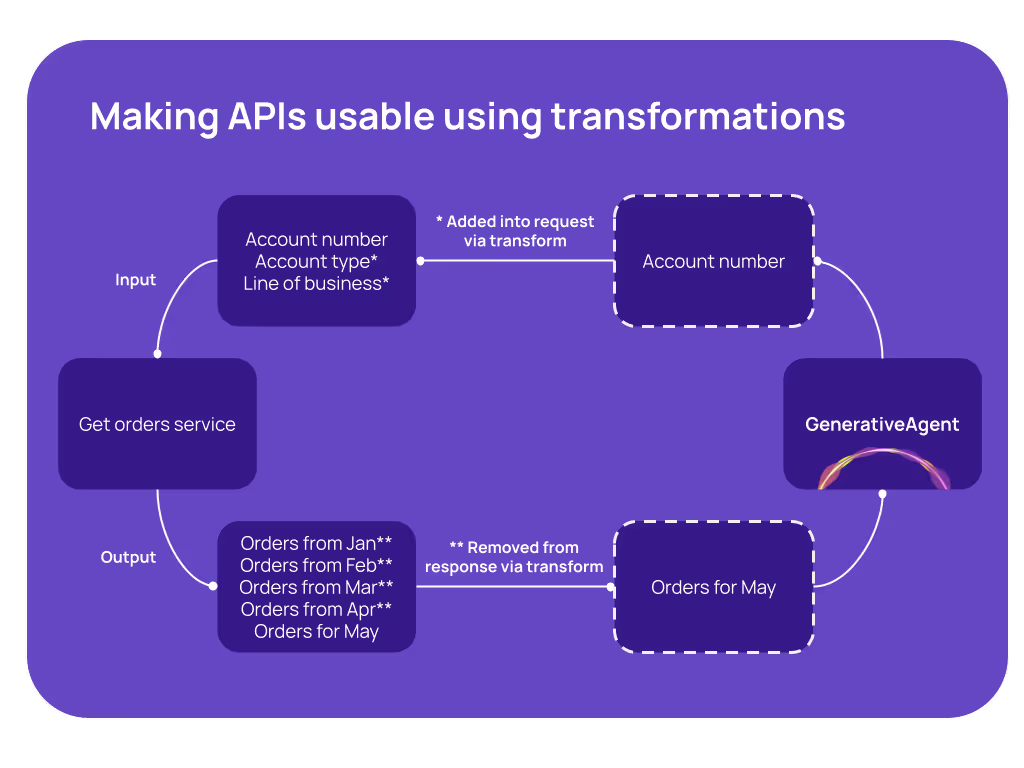

3. API transformations

Enterprise APIs come in many flavors. Perhaps the one thing they have in common is that they were never designed for AI to make sense of them. APIs with poor documentation and field names with ambiguous or unintelligible meaning are common. Some endpoints are monolithic and return too much data, while others are overly fragmented. GenerativeAgent separates the integration of an API with how it is exposed to GenerativeAgent, and allows for the specification of input/output transformations. This allows developers to expose only the data that is required to the AI, making it easier to interpret and, therefore, perform actions accurately. This transformation layer also comes with the option of built-in redaction, so you can obfuscate data, such as PII or PHI data, that you don’t want to expose to the AI. In one particular deployment, we used these tools to map a response containing 6 months of transaction history to only the last month’s transactions.

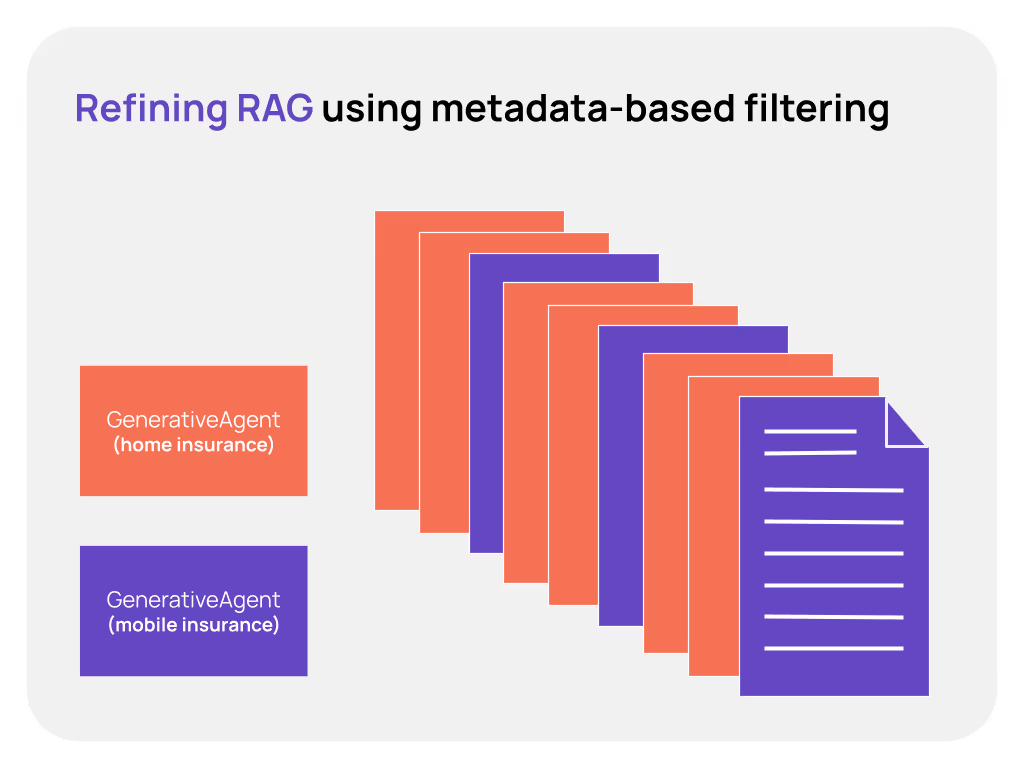

4. Prodding RAG via metadata association

Getting an agent to look up a knowledge base via RAG for question answering is basic functionality. However, when you have a large set of content in many formats, and many agents accessing all of it, squeezing out every ounce of performance is critical. We do this by narrowing the set of snippets and documents that are candidates for any search query, using pre-defined metadata to match content with specific tasks that the agent might need to perform. So, for example, if there’s a use case for filing a claim for your mobile insurance line of business, our tagging system will ensure that we won’t attempt to search more than what is needed.

5. Context injection from external systems

Good customer service is customer service from an agent who knows you. With GenerativeAgent this is straightforward because it has integrations with CRMs like Salesforce. This allows the system to preload context relevant to the conversation (such as a summary of the last customer service interaction, or recent transactions) and inject these into the conversation—essentially using the CRM system as a store for the long-term memory required to power a personalized and better experience.

What it takes to create an impact with AI in CX

When we first launched GenerativeAgent, customers were impressed. While conversations were clearly more pleasant than with rule-based bots, there were sometimes mistakes made, and the uplift in performance was good but not great.

In the past 12 months, we’ve discovered that this is solved not with one magic trick, but many small ones, mixing cutting-edge research with cleverness and common sense. Our average containment has gone up from 40% to 80% for most use cases, and it takes us less time than ever to get to the 80% mark. This engenders trust—between us and our customers, but more importantly, between their customers and them.

We keep hearing about AI pilots failing, and we aren’t surprised—we know what it takes to actually make AI create an impact. And if you want to create an impact too, you should try GenerativeAgent!