There’s a common misconception in customer service automation that the goal of a generative AI agent is to replicate a human agent 1:1. It’s an appealing idea, but it misunderstands both the problem we’re solving in customer service and the potential of AI technology itself.

Mimicking human tone and behavior may create a familiar experience, but it doesn’t guarantee accuracy, speed, or safety. It brings us back to one of the most frequently asked questions: Is AI replacing humans?

The answer is more nuanced than yes or no, because it’s the wrong question. The real objective isn’t replacement; it’s results. A generative AI agent isn’t designed to imitate a human. It’s built to improve the system by solving problems faster, adhering to policy, escalating to a human only when necessary, and doing all of that consistently - even in complex, high-volume environments.

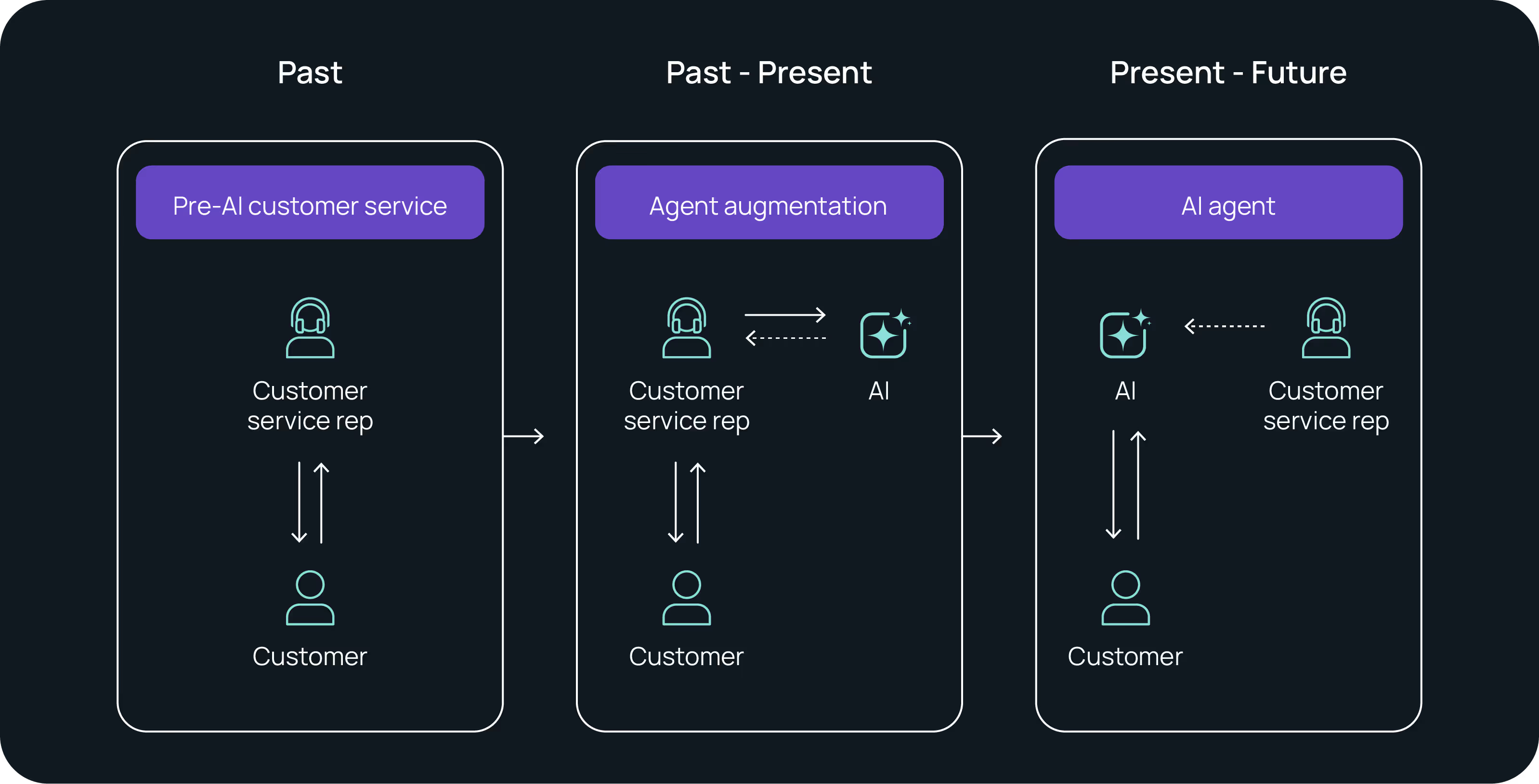

We’ve shifted from a model where AI supports human agents who support customers, to one where humans support AI that supports the customers. This doesn’t diminish the human role— it elevates it. And it allows us to finally address long-standing challenges in customer service through technology that can withstand the pace, pressure, and complexity human agents have shouldered alone for decades.

What makes generative AI essential to contact center efficiency isn't mimicry—it’s consistency. Its ability to operate across nuanced knowledge bases and intricate workflows is the real test. And the real opportunity.

What a “human-like” generative agent really means

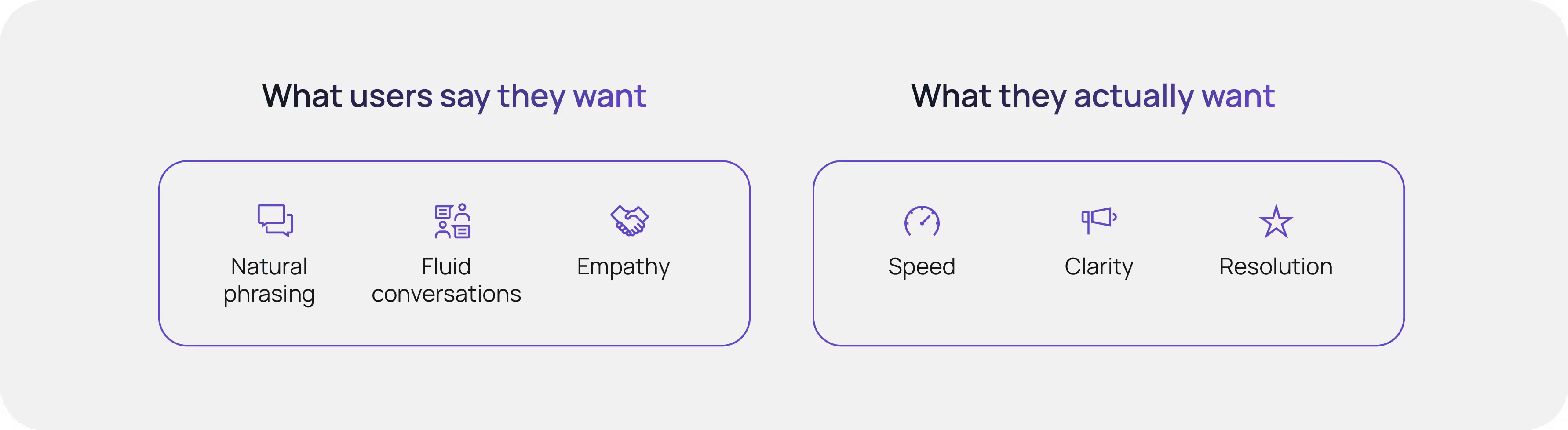

When people say they want AI to feel “human-like,” most solution providers take that literally. At ASAPP we asked real users who interact with enterprise brands every day what “human-like” means to them. The answers were consistent: natural phrasing, fluid conversation, and maybe even a touch of empathy. It’s an understandable instinct. If human agents are the default comparison point for generative AI agents, it’s only natural that we ask for AI technology to sound and behave like them.

But when we dug deeper through real-world deployments and user feedback, a more practical truth emerged. What customers actually want is speed, clarity, and resolution. Upfront, a more “human-like” voice experience may build trust, but that trust fades quickly if the agent can’t resolve the issue, requires extensive manual configuration, or fails to operate safely.

This isn’t just a surface-level upgrade. It's a one-of-a-kind transformation.

The next era of customer experience won’t be defined by how closely AI resembles a human, but by how reliably, safely, and efficiently it delivers outcomes.

When generative AI agents combine conversational fluidity with depth and business alignment, we create a true harmony between human expectation, technology, and operational scale.

Human and/or generative agents

Probabilistic systems are often compared against the benchmark of human parity—a goal that guides much of artificial general intelligence research. But contact centers are a different context entirely. They are domain-specific, bound by policy and compliance, and optimized for consistent intent resolution. In this environment, success isn’t general intelligence but reliably delivering outcomes that drive business impact.

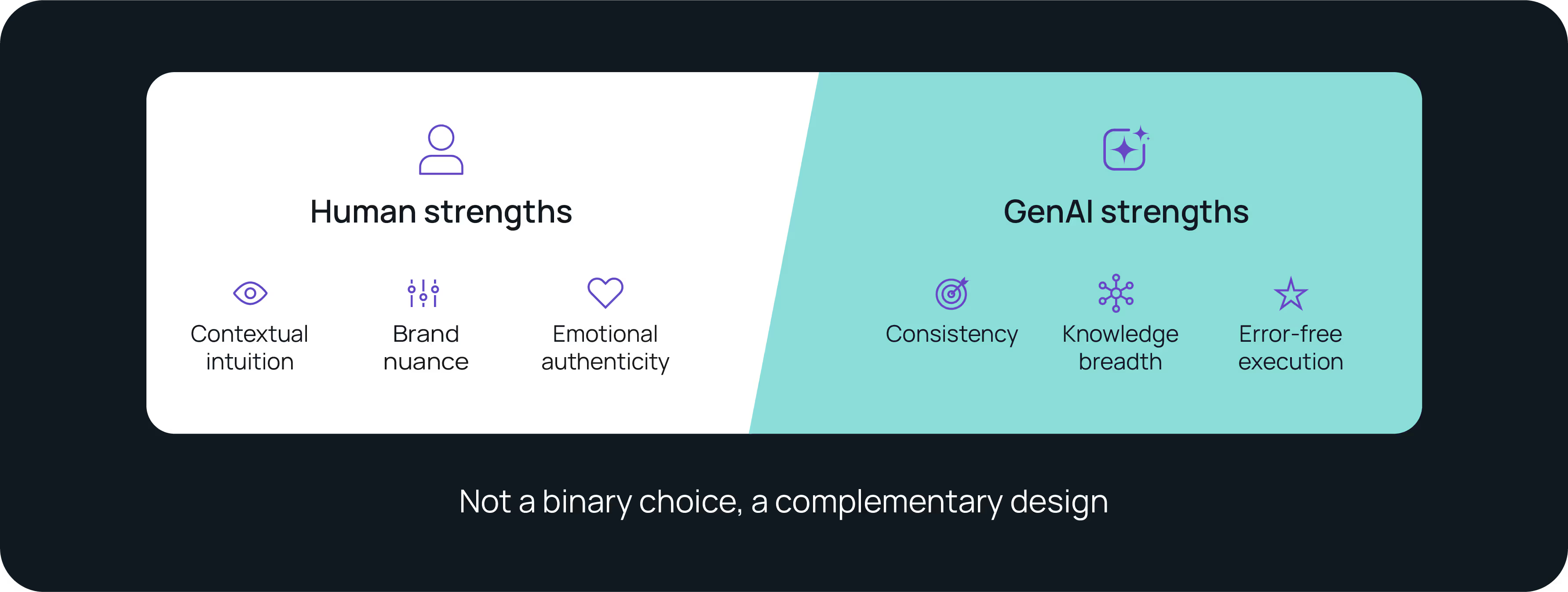

Comparing the performance of humans and generative agents is still a useful exercise. Human agents bring deep historical context, learned experiences, and situational intuition. But they also introduce variability—bias, fatigue, misinterpretation, and inconsistency. These everyday fluctuations become coaching opportunities, but they also expose the limits of human scalability, particularly in high-volume environments or interactions that require niche knowledge or wide-ranging reference points.

Generative AI agents excel in those domains, where consistency, precision, and instant access to a vast knowledge base matter most. Yet, there are still elements of interaction where humans lead: interpreting subtle intent, applying brand-specific nuance, and expressing empathy in a way that feels authentic. These are not weaknesses of generative systems, but areas of ongoing learning, refinement, and replication.

The path forward isn’t binary. It’s not about choosing human or AI. It’s about designing a system where both play to their strengths.

A new take on “human-like” in the contact center

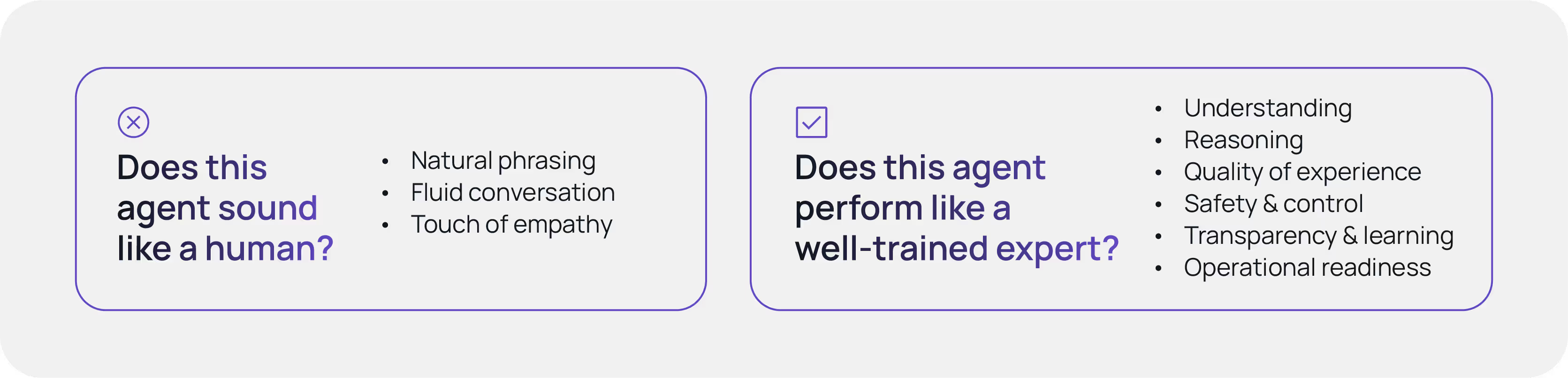

So if “sounding human” is the wrong goal, what should “human-like” mean in the context of generative AI agents?

It should mean capable. It should mean reliable. An AI agent should be intelligent enough to resolve problems, match tone and rhythm, and be able to operate safely in a system that demands compliance, consistency, and visibility. Here’s an example:

Incorrect framing: Does this agent sound like a human?

Better framing: Does this agent perform like a well-trained expert?

This is exactly the standard we should be meeting. But it’s also one that no human can guarantee.

Pillars for human-like performance that matter

When we think about performance, we mean more than just outputs. We mean consistent, repeatable behaviors under pressure. To meet enterprise-grade expectations, a generative AI agent needs to demonstrate these six core capabilities:

- Understanding: Does the AI agent detect the user’s intent on the first turn? Does it use precise context? If the AI agent doesn’t understand, that isn’t just inconvenient to your operations, but costly, as well.

- Reasoning: Does the AI agent maintain logic across multi-turn interactions? Does it adapt when the customer course-corrects? Does it know when to ask for clarification instead of guessing?

- Quality of experience: Does the AI agent answer quickly without redundancy or latency? Do its interactions feel direct and efficient, not overly conversational?

- Safety and control: Does the AI agent operate within your defined guardrails? Does it expose confidence levels? Does it escalate to a human when thresholds aren’t met? If the system isn’t observable, it’s not governable.

- Transparency and learning: Does it improve over time based on real interactions? Does it adjust without retraining from scratch? Does your agent provide rationale when needed, especially when decisions affect policy, billing, or personal data?

- Operational readiness: Does the AI agent integrate into real-world workflows and not just lab conditions? Does it support monitoring, logging, and version control? Systems need to scale and be serviceable.

What emotional intelligence really looks like in a generative AI agent

Too often, empathy in AI systems gets reduced to a script: “I’m sorry to hear that.” “I understand how you feel.” But do those statements actually build trust? Rarely. Predictable, respectful service does.

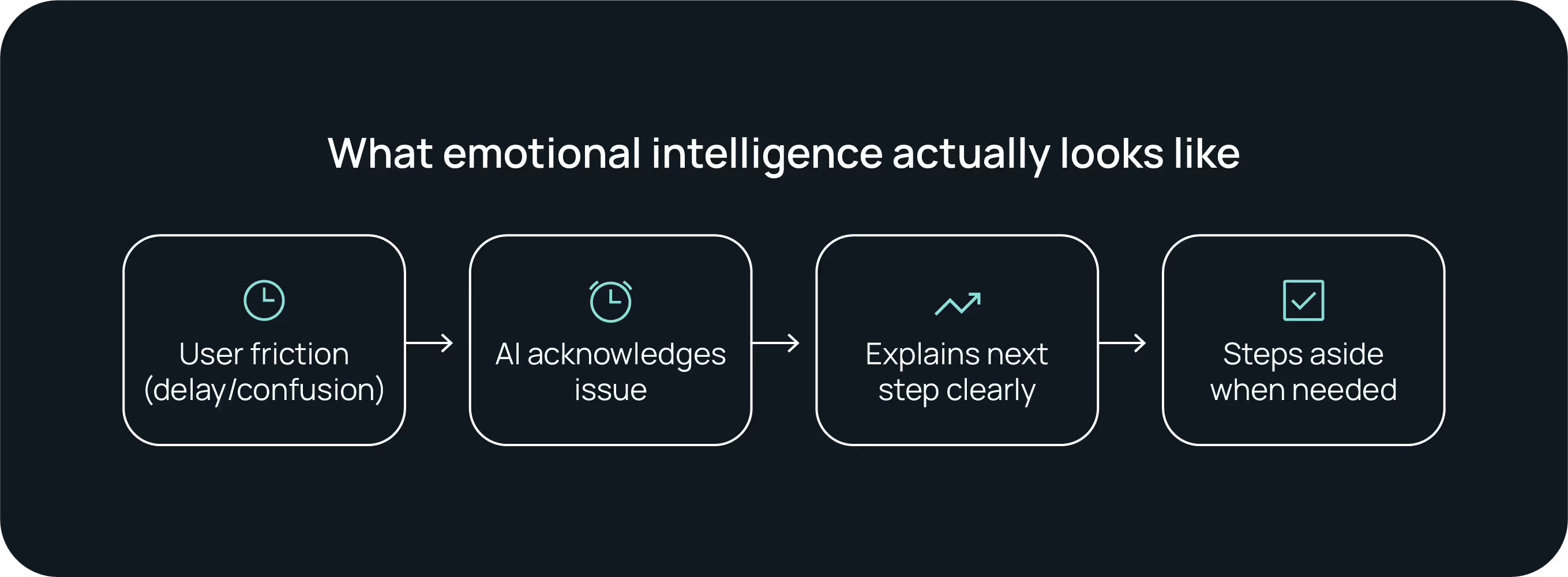

Emotional intelligence in a generative AI agent isn’t about expressing feelings. It’s about recognizing friction and reducing it. That means acknowledging when there’s a delay, explaining what comes next in resolving the customer’s issue, providing clarity when there’s ambiguity—and stepping aside (quickly) when a human is needed.

Trust is earned through clarity, responsiveness, and system-level self-awareness. Not sentiment.

Why this definition matters

For years, brands have defined their customer service strategy around how humans support customers across multiple channels. That made sense—until now. With generative AI agents becoming ready and capable, “human-like” can no longer be the goal.

What matters is performance.

Success now depends on metrics that reflect real business impact. Containment, average handle time, number of interactions served, ease of maintenance, and overall cost to serve.

Generative AI agents aren’t here to mimic human agents. They’re here to automate intelligently and elevate everything around them.