As AI agent solutions for the contact center proliferate, so does the jargon used to describe them. The terminology isn’t always used consistently, which makes navigating the landscape of AI for CX difficult. Some terms remain close to the meaning AI experts intend, while others take on new connotations specific to customer service. And when different CX tech vendors use the same terms to mean different things, confusion reigns.

This list of essential terms and concepts should provide some clarity. Let’s jump in.

AI, generative AI, agentic AI—What’s the difference?

Artificial Intelligence (AI)

AI is a set of technologies that enable computers to simulate the way humans think, including the ability to reason, learn, problem-solve, and create content. Where traditional programming relies on rules and algorithms, AI learns from data, which allows it to identify patterns, make predictions, and adapt to new conditions.

There are several different types and classifications of AI. These are the types that are relevant for AI agents in contact centers.

Generative AI

Generative AI is AI that can create (generate) new content, including complex long-form text, human-like conversation, images, audio, and video. It’s first trained on a vast amount of data. Then, it draws on the patterns and relationships in its training data to understand requests and create new content.

Agentic AI

Agentic AI refers to solutions and platforms that can act autonomously without explicit human direction. Where traditional software follows step-by-step instructions, agentic AI solutions are goal-directed and do not require the coding of specific steps or deterministic paths. Instead, they orchestrate multiple components, including generative AI, to reason and take action to solve problems on their own. Agentic AI can learn from experience and improve its own performance over time. Because of these advanced capabilities, agentic AI is rapidly expanding automation possibilities in customer service.

What about conversational AI?

Until practical applications of generative AI for customer service came along, conversational AI took center stage in customer-facing automation. Every solution provider with a chatbot touted its conversational AI capabilities because they enabled a more natural conversational experience for customers. So, what, exactly, is conversational AI?

Conversational AI

Conversational AI uses Natural Language Processing (NLP) to interpret and respond to human language. Because it can converse naturally, understand intent, and determine sentiment, it enables technologies like chatbots to interact with customers.

Chatbots powered by conversational AI can provide effective automated service for simple inquiries. But they hit a roadblock with complex issues, nuanced conversations, and issues that require completing tasks in backend systems.

To complicate matters, you’ll find some vendors who refer to their AI agent as a conversational AI agent simply to emphasize the conversational nature of the solution, even though it relies on generative AI, not NLP.

AI agents in the contact center

Outside the realm of customer service, the term AI agent refers to any AI solution that operates autonomously without step-by-by instructions, including those that work in the background to analyze data, improve process efficiency, or automate routine tasks. But in the contact center, the word agent has a specific meaning—a team member who serves customer directly. As a result, the term AI agent takes on that meaning. An AI agent in the contact center serves customers directly.

Even so, AI agent can refer to a wide range of customer service solutions with very different capabilities. It all depends on how each vendor talks about their products. When ASAPP refers to an AI agent, we mean something specific—a generative AI agent.

Generative AI agent

Within the contact center, a generative AI agent is a multi-layered solution that leverages the language and reasoning capabilities of generative AI to serve customers directly over voice or chat. It integrates with other tools and systems and uses APIs to retrieve data and perform tasks necessary to resolve the customer’s issue. It works autonomously and is capable of complex problem-solving. Because it is adaptive and context-aware, it learns and improves over time.

Intelligent Virtual Agent (IVA)

The term IVA typically refers to a voice or chatbot that uses conversational AI to interact with customers and handle simple inquiries and transactions. It lacks the flexibility and broad capabilities of a generative AI agent.

AI agent

In customer service, this is a catch-all term that can refer to a wide range of solutions, from conversational AI chatbots to fully agentic solutions built on a foundation of orchestrated generative AI models. From a more technical viewpoint, an AI agent is a system that uses the capabilities of generative AI to reason, problem-solve, and autonomously perform tasks. Robust generative AI agent solutions actually include multiple AI agents, using each for the tasks it performs best.

So, when a vendor uses this term, stop and ask for more information about how their solution is structured and which technologies it uses.

Critical components of a generative AI agent

A robust, enterprise-ready solution is much more than a generative AI model. Here are a few terms you’re likely to encounter when researching solutions.

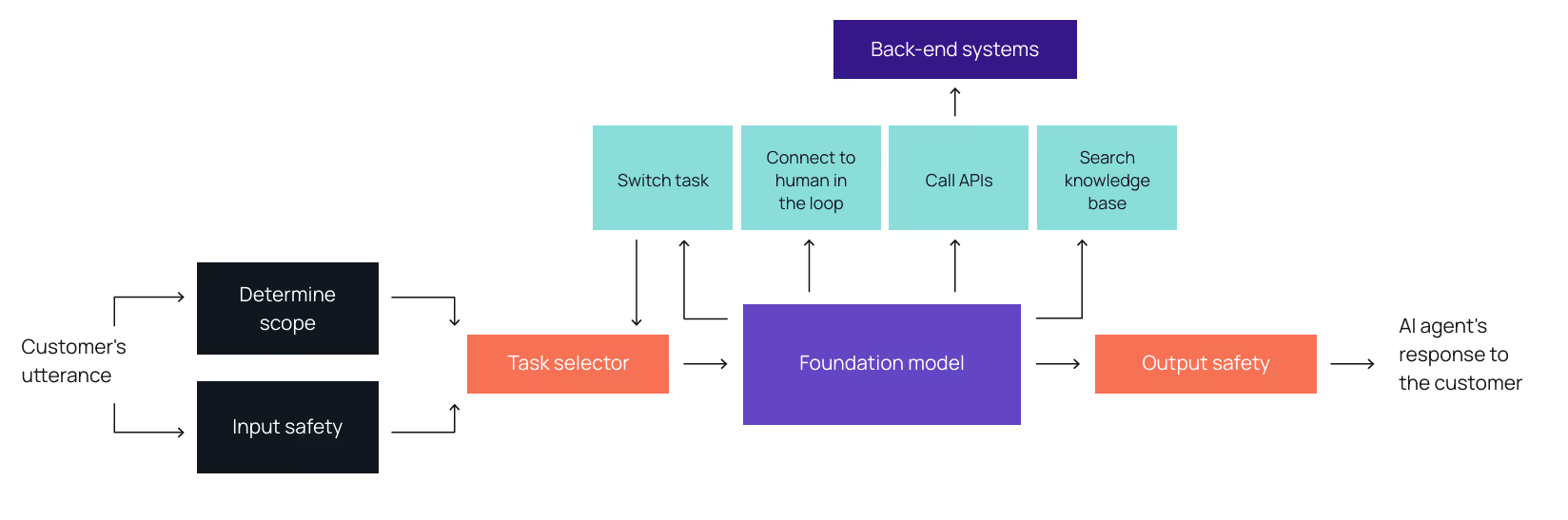

Multi-agent architecture

A system in which multiple specialized AI agents work together, coordinated by an orchestration layer. Because each AI agent is used for the specific tasks it performs best, this approach improves speed, accuracy, and scalability in generative AI agent solutions for customer service.

Orchestration layer

The orchestration layer is the backbone of any enterprise-ready agentic AI solution. It manages the complex, simultaneous interactions and workflows across all components of the solution, including multiple AI models, prompt management, data retrievals, safety mechanisms, and API calls.

APIs

Application Programming Interfaces (APIs) are protocols that allow different systems and software applications to interact with one another. APIs are a crucial component of a generative AI agent because they allow it to access backend systems to retrieve data and perform tasks necessary to resolve a customer’s issue.

Retrieval-Augmented Generation (RAG)

RAG is a process that grounds an AI agent on your authoritative sources of information. To ensure the AI agent’s responses align with your policies and brand expression, RAG enables the AI to refer to your knowledge base or other sources of truth instead of simply relying on its training data.

Prompt engineering

Generative AI agents are designed to produce responses based on prompts. If you’ve experimented with any generative AI tools, you know that the quality of your prompts affects the quality of the output. Prompt engineering includes a range of techniques and strategies for structuring the prompts and instructions the AI relies on. The goal is to improve the AI’s ability to understand intent and nuance so that it produces more accurate and reliable responses.

Testing a generative AI agent

Before launching your first AI agent use case, you and your solution provider will thoroughly test your solution to ensure its safety and reliability. This process involves multiple rounds of testing using different techniques. Based on these tests, you’ll make adjustments to improve performance. You’ll want to be familiar with these common terms related to testing.

AI hallucination

Hallucination refers to instances in which the AI produces a response that is not based on information from a trusted source, such as your knowledge base. When they lack the specific information they need, AI models have a tendency to rely on their intrinsic knowledge. Sometimes that yields accurate information, and sometimes it doesn’t. But this tendency underscores the importance of mechanisms to prevent, detect, and mitigate hallucinations before they reach your customers.

Red teaming

Red teaming is a method of testing an AI agent to probe for weaknesses in security and AI safety. This testing helps ensure that the AI agent will not not leak sensitive data, generate content that’s biased or inaccurate, or engage in other behaviors that would be harmful to your customers or your business.

Simulation testing

The flexible and unscripted nature of generative AI makes it difficult to test well. The most effective way to test how an AI agent performs is to run realistic simulations of customer service scenarios with different customer personas. This type of testing goes beyond validating individual tasks and responses. The simulations include complete, multi-turn conversations, API calls, and information retrievals. This approach ensures that the AI agent provides correct information, adheres to your company policies, and stays on-brand across a variety of conversational paths.

Measuring and monitoring a generative AI agent

As you incorporate generative AI agents into your customer service operation, you will need to update your approach to performance management and quality assurance, including the metrics you use. In some cases, you’ll continue to rely on the same metrics, but they will take on a new meaning and a new role in your quality management strategies.

Explainability

Explainability refers to the degree to which humans can understand and interpret the AI agent’s reasoning and actions. A solution with a high degree of explainability provides more than a record of its responses and the tasks it has performed. It also explains in clear language how it reasoned through the issue and what data or information it used in that reasoning. This level of explainability gives you the visibility you need to effectively monitor and optimize your AI agent.

Fine-tuning

Fine-tuning is the process of refining your AI agent’s behavior so that it more closely aligns with your policies, expectations, and business objectives. This process involves closely monitoring performance to identify opportunities for improvement. That might include modifying task instructions, re-engineering prompts, updating APIs, revising or filling gaps in your knowledge base, or providing more direct feedback to the AI agent. Fine-tuning is most intense in the period surrounding the launch of a use case. But you will continue to fine-tune as needed after that.

Average Handle Time (AHT)

AHT has long been considered a key efficiency metric in contact centers. But for interactions handled by an AI agent, AHT is more closely aligned with customer effort than operational efficiency. It should still be measured, but it will play a different role in your KPIs with an AI agent.

Containment Rate

Traditionally, containment was viewed as a binary choice – either the automated service contained an interaction or it didn’t. But with regard to AI agents capable of resolving an ever-growing range of complex issues, containment will need to become a more nuanced measure. Interactions that your AI agent escalates or consults with a human agent to resolve are not automatically failures. If the AI agent reduced the amount of time your human agents needed to spend on those interactions, it’s still increasing efficiency, despite the lack of containment.

First Contact Resolution (FCR)

FCR is one of the most critical metrics in every contact center. That won’t change with AI agents. Because their purpose is to resolve customer issues, FCR will be a much more important KPI than containment.

Escalation rate

Even with a highly capable AI agent, some interactions will still be escalated to a human to resolve. In fact, for some use cases, you might anticipate that a significant percentage of interactions will require a handoff to a human. In those cases, if your AI agent is saving the human agent time by gathering information and performing tasks that lay the groundwork for resolution, it’s still improving operational efficiency. So, you’ll want to consider escalation rates carefully for each use case. Every escalation isn’t a failure.

Labor hours saved

This one is a new metric that will be critical in evaluating the impact of your AI agent, not just with the initial deployment, but every time you launch a new use case. If you switched your AI agent off, how many more labor hours would you need to handle your interaction volume? If your AI agent is simply taking over the load from your IVR and traditional bots, or only handling simple inquiries that don’t use up much labor, it’s not delivering the savings you’re looking for.

Human in the loop

Human in the loop refers to the inclusion of human judgment and input to improve AI performance. What that human involvement looks like varies substantially from one AI agent solution to the next. For some, human involvement occurs only during training and testing. Humans monitor every action and response to provide feedback and redirection to the AI agent and to inform performance improvement. In those cases, once you’ve launched your AI agent, humans become nothing more than an escalation point, just as they are with traditional bots.

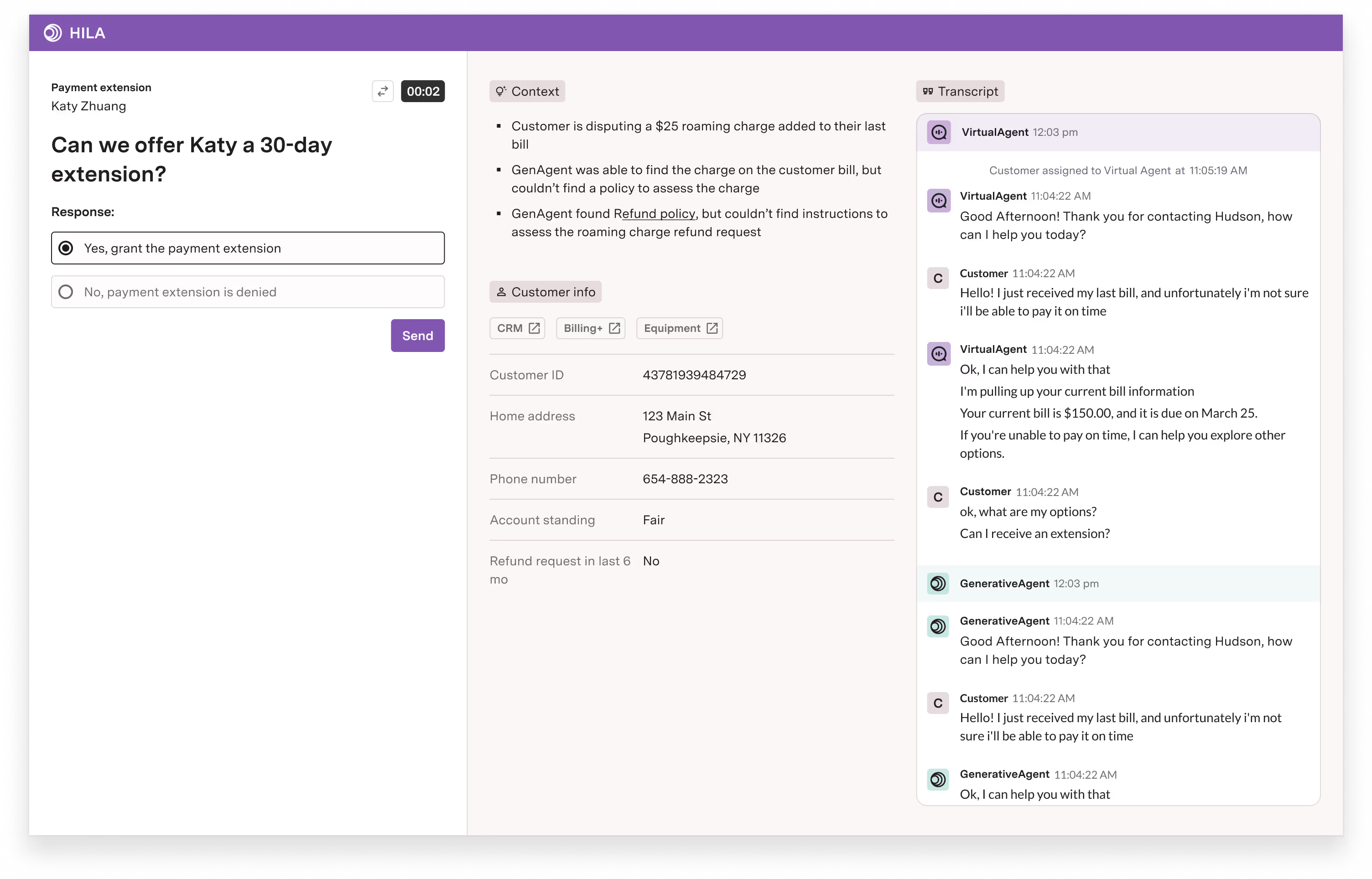

ASAPP takes a different approach. GenerativeAgent® includes a Human-in-the-Loop Agent (HILATM) workflow that enables real-time human-AI collaboration. In this model, the AI agent can consult a human any time it hits a roadblock to ask for guidance, information, or approval. Once it gets what it needs, it continues resolving the customer’s issue on its own, without handing off the interaction to a human.