Nirmal Mukhi

.avif)

Nirmal Mukhi is the Chief Architect at ASAPP, where he builds machine learning capabilities and products. Prior to joining ASAPP, Nirmal held leadership positions in engineering and research at IBM, where he was R&D lead for Watson Education, and served as CTO at TRANSFR. He has over 30 publications (with 4500+ citations), 15 patents, and has appeared on a Discovery Channel documentary about AI.

The challenge of scaling up AI agent use cases—solved

We’ve been building AI agents long enough to know that making an agent perform really well, while difficult, is only one part of the challenge enterprises need to solve. The other is making agents maintainable as you scale. That requires exercising a different set of muscles.

First, let’s be clear about what we mean by scalability. It isn’t just about interaction volume. For an AI agent, scalability is about the number, variability, and complexity of the use cases it handles. The effort to tackle a single use case well, so that every customer has the kind of experience you intend, is high. How do you ensure that you don’t have to repeat that level of effort as you expand to a second, seventh, or twentieth use case?

There’s an aphorism in software development that applies well here—good programmers build for reuse. That’s truer than ever when building agentic systems.

Commonalities in use case workflows

Consider this practical example.

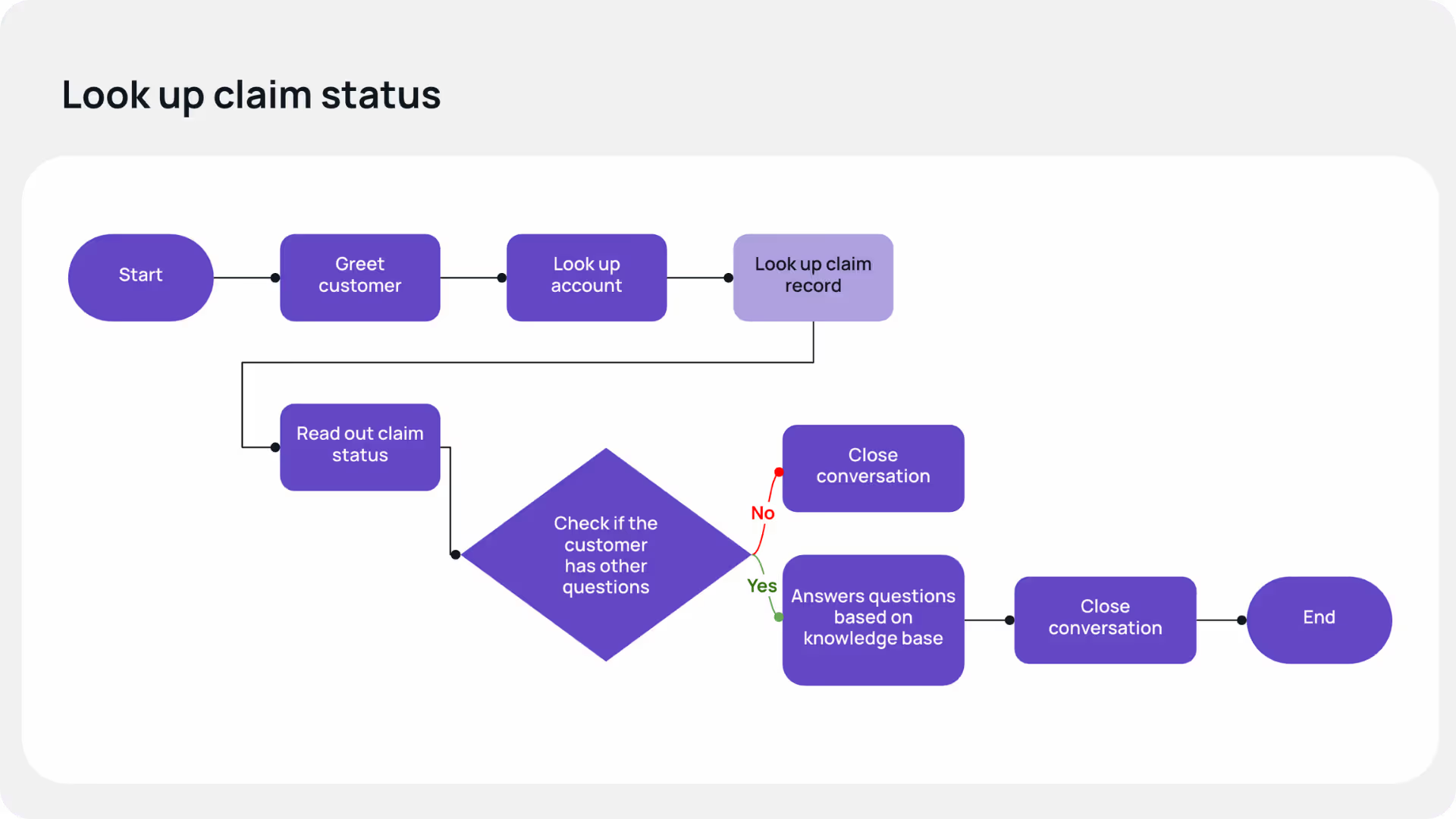

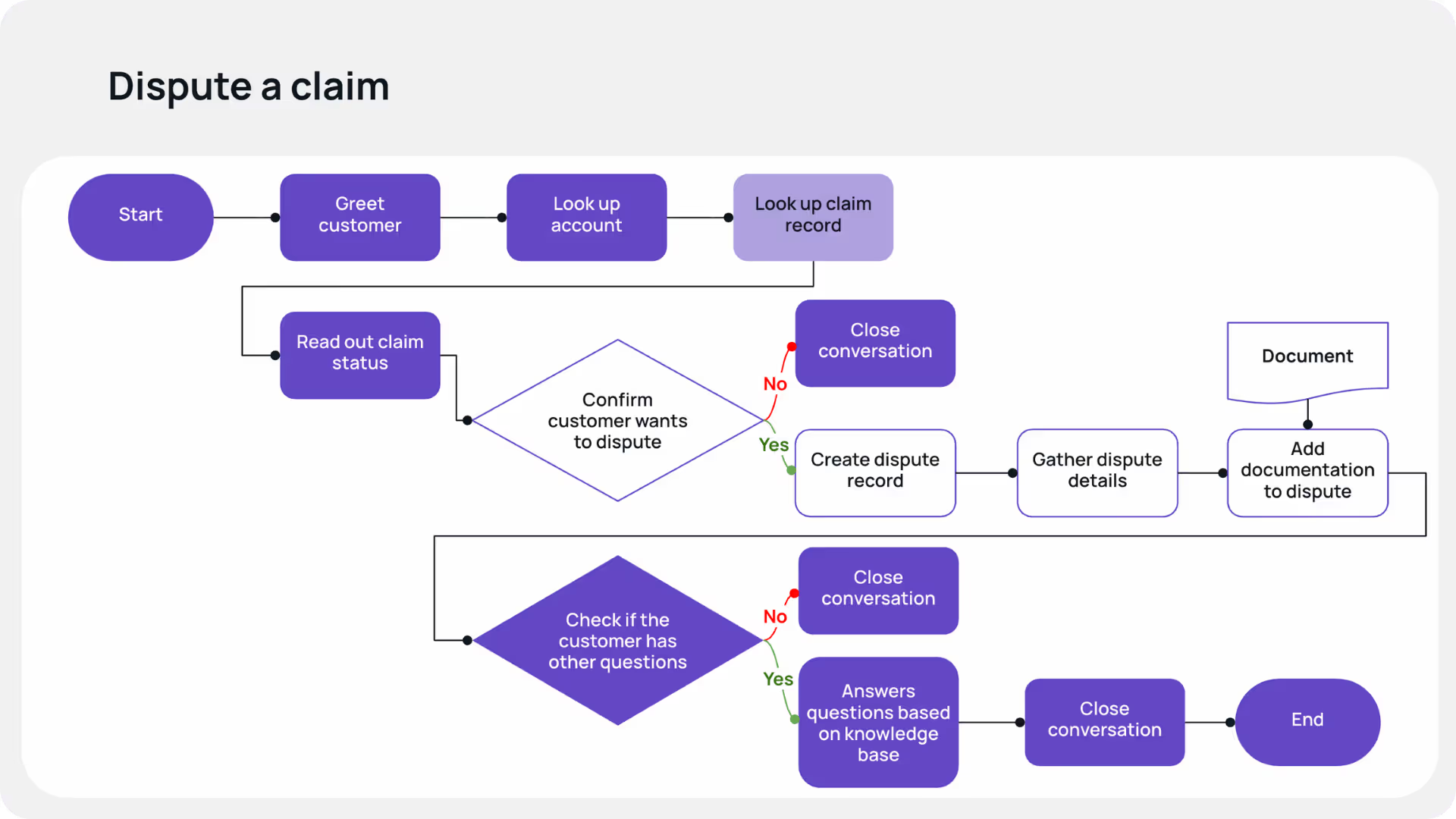

Let’s say you’re working on building agents for an insurance company for the following use cases:

- Look up claim status

- File a claim

- Dispute a claim

ASAPP often starts by building out one use case to prove the value of the product. In this case, let’s say we began with the easiest use case, looking up claim status. But very quickly, our customers want to translate that success to other use cases like filing a claim or disputing one.

Here are rough flowcharts for workflows associated with these use cases. The steps these different workflows have in common are colored in purple, with the intensity of the purple reflecting the commonality of the step.

The commonalities here are not unusual. In an enterprise, many steps are repeated across different workflows. These steps often use the same tools, set of policies or business logic, and knowledge base documents, in order to be successfully executed.

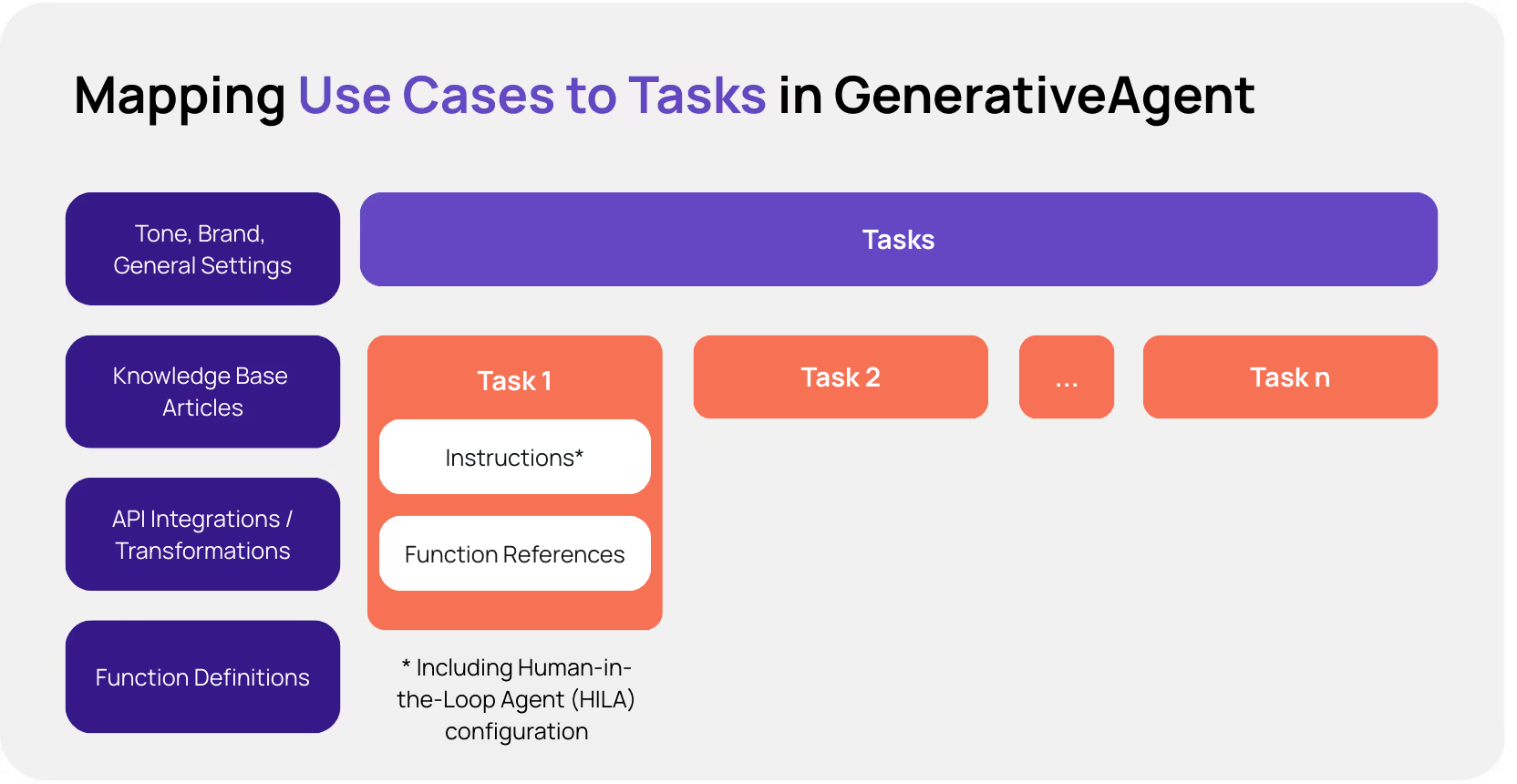

When building out use cases with GenerativeAgent®, part of ASAPP's Customer Experience Platform (CXP), each use case maps to a task, which encompasses the instructions needed to run that use case and references to functions. Task instructions detail the goal to be achieved and the steps to be executed to accomplish that goal successfully. Function references cover integrations with APIs and other agentic systems via REST/MCP, as well as internal functions to access memory, escalate to a human agent, or even just consult a human agent in the background to support GenerativeAgent’s logic. Instructions include both prompt-like natural language and more complex logic described using Jinja templating. Tasks implicitly use associated knowledge base articles to help accomplish goals. Other common settings related to tone and brand are also injected into instructions automatically.

As we build out additional use cases, we add more tasks. GenerativeAgent has a built-in task selector that chooses the right task depending on the customer’s needs, and can switch back and forth between tasks during a conversation as needed.

Creating new use cases—and a maintenance problem

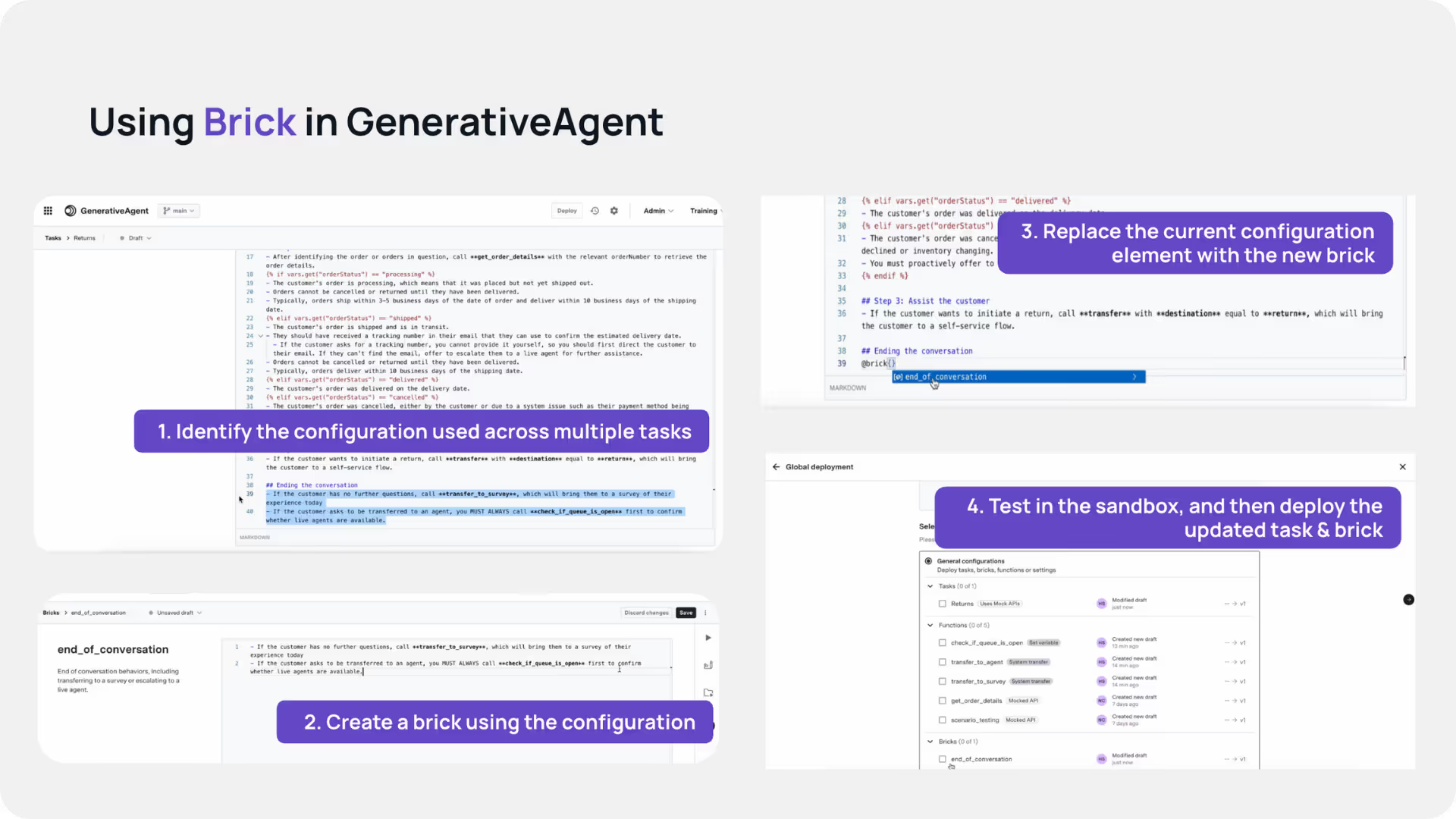

For our insurance use cases, we would begin with a single task for looking up claim status. Let’s say we next had to add three more tasks for the other use cases. In the past, we would address this situation simplistically by taking the relevant fragments of task instructions from the claim status lookup task and copying them into the new use cases. While this works, it results in similar logic repeated in multiple places.

Let’s say that sometime in the future, the process for looking up an account changes. Instead of looking up the account by asking for an account number and validating account information, the new process is to connect the caller’s phone number and look up the account that way. Because we’ve repeated the same logic across multiple tasks, we would have to update the instructions in each task. It quickly becomes a maintenance nightmare.

In our first attempt to solve the maintenance problem, we used the GenerativeAgent memory capabilities to support daisy-chaining logic. We would create a top-level task to capture information (for example, look up an account, find active claims, and look up the corresponding claim records, and store these details in memory) and then shift to a more specific task (such as reading out claim status, adding documentation to a claim, or filing a dispute).

With this approach, changes could be centralized. But having logic split up between tasks required the system to transition between tasks at the right time and with perfect accuracy, which created risk.

Which is why we created bricks.

A solution at last—building with bricks

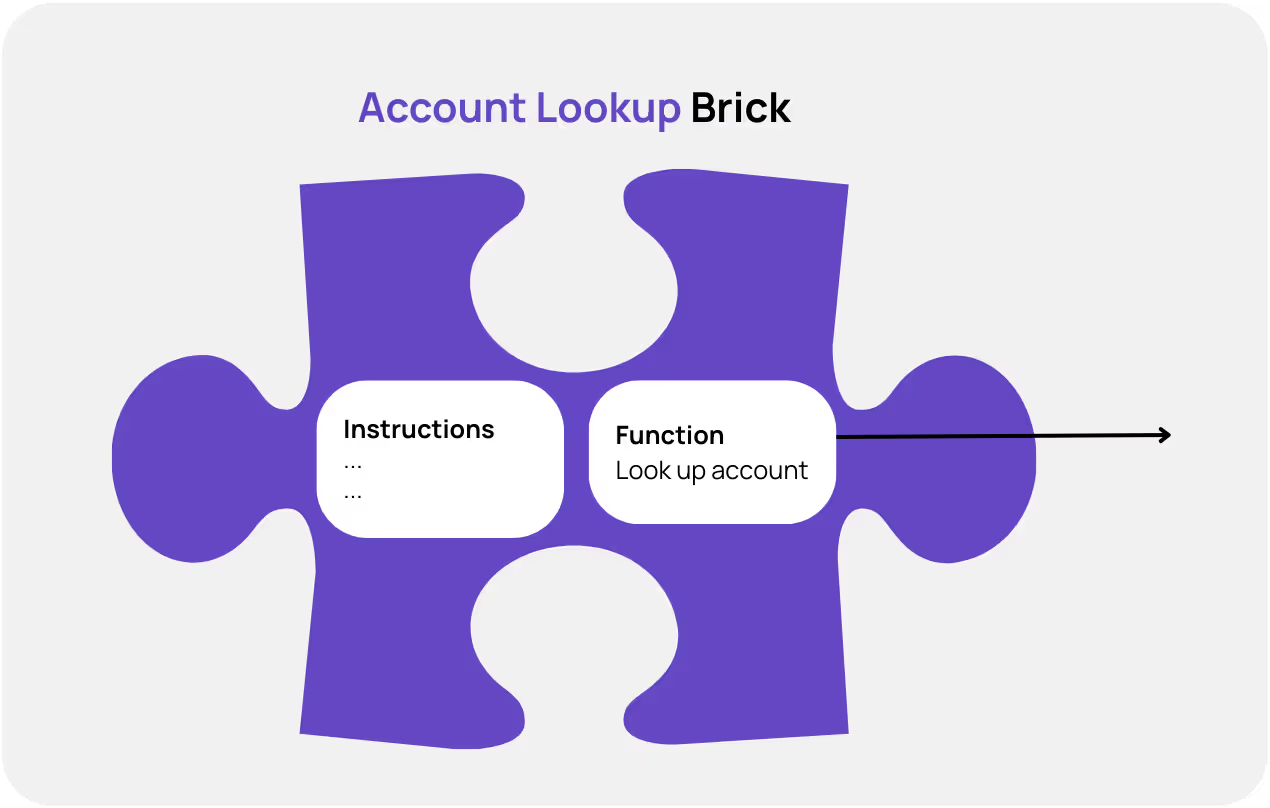

Bricks are versioned, reusable prompt fragments that can be inserted into tasks, allowing teams to share logic, tone, policies, and workflow steps across use cases without duplication.

Using bricks, we could break the workflows down to create the following sets of reusable logic:

- A customer greeting brick: This would encompass the common logic needed to greet the customer, ask them about the reason they’re calling, and clarify as needed to get to a specific request.

- An account lookup brick: This would use the customer’s account number to look up their account. If the account number happened to be available in the input context (for example, if it was captured by the IVR), that would be used. If not, the brick’s logic could request the account number from the customer before it makes a function call to look up the account. It would also have logic to deal with situations where the account lookup failed (for example, verifying the account number or eventually escalating to a human agent if it was not able to perform the lookup successfully due to an API failure).

Similarly, we would create 5 additional bricks:

- Look up the latest claim record using information from an account.

- Read out claim status using information in the claim record.

- Add documentation to a claim record by asking the customer to upload a document, verifying that the upload succeeded, and then associating it with the claim record being discussed.

- Check if the customer has any additional questions and use the knowledge base to answer them.

- Close out a conversation with the customer.

These bits of logic could then be assembled within specific task instructions. GenerativeAgent allows bricks to be dropped in within such instructions so that bricks can be chained and connected in appropriate ways to fulfill the goals of the task.

One way to think about bricks in GenerativeAgent is that they are a repository of common library routines. For this set of four use cases, we would use these seven separate bricks. The task instructions would just contain the unique instructions that are specific to the use case, along with some glue logic to combine bricks in appropriate ways, resulting in over 75% of instructions being reused.

The strategic impact of building with bricks

From an engineer’s perspective, building with bricks is nothing short of…beautiful! And from a practical perspective, it brings down the cost of building and maintaining multiple use cases in significant ways.

For enterprises that use GenerativeAgent, the benefits of bricks are extensive. Shorter build time means faster time-to-value. And lower maintenance costs mean you get more consistent returns on your investment over time. As conditions change, you’ll inevitably need to update task instructions. Building with bricks ensures less configuration drift and a more consistent brand voice as you make necessary updates. It also improves governance and compliance.

But the biggest benefit is the ability to scale your AI agent and build value for your business—one brick at a time.

To learn more about the Brick functionality, visit ASAPP's Brick documentation page.

The author would like to thank Hannah Yu, Hernán Amarillo, and Guillermo Coro for contributing to this blog post.

Reclaiming the strategic value of voice in the agentic enterprise

The brands we trust most are the ones that know us best. They earn our loyalty and business.

Decades ago, when many businesses were smaller—and local—it was easy to know your customers. But as businesses scaled and globalized, personal customer relationships were replaced by standardized processes and layers of technology.

Today, customers now encounter a maze of customer service options that feel noisy, disjointed, and hard. Meanwhile, enterprises grapple with fractured workflows, siloed data, and serious blind spots in the customer lifecycle.

Why voice still matters

In recent years, industry leaders pushed hard for digital transformation. Many organizations assumed customers would migrate to chat, messaging, and social channels for service. Investments followed. Expectations rose.

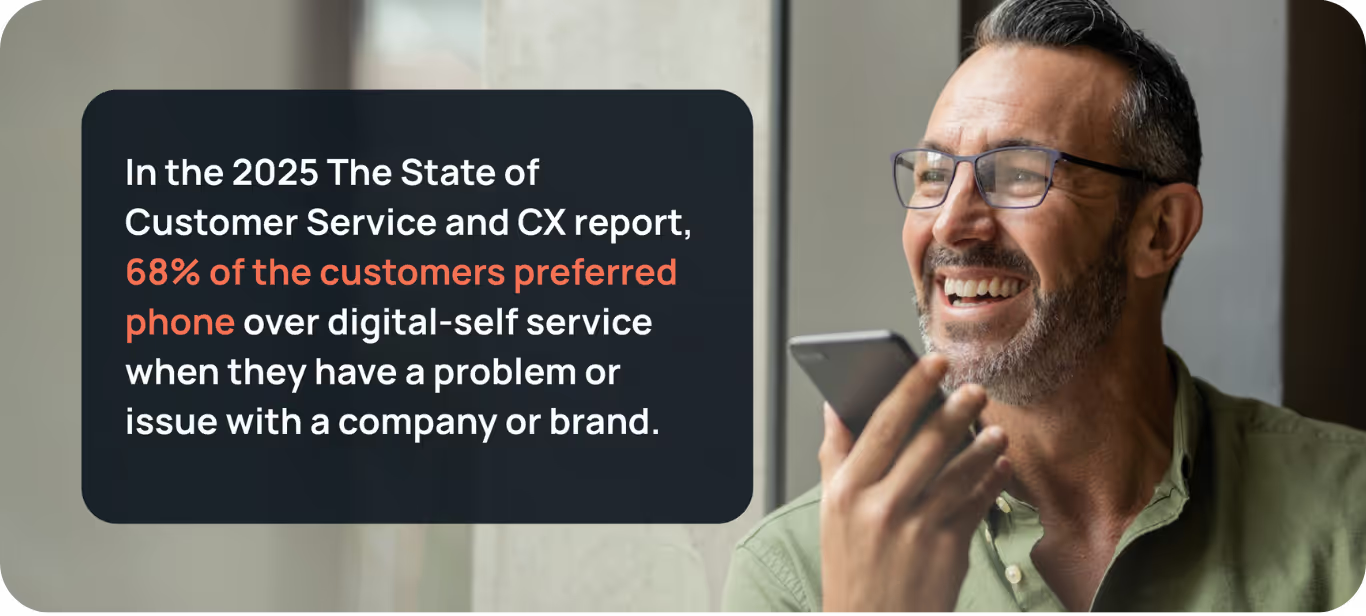

But the results were disappointing. Voice remained the top channel in most contact centers, even among younger consumers. In fact, research still consistently shows that 7 in 10 consumers reach for the phone when they need support or service. For example:

It’s not hard to understand why. Negotiating a complex issue is often easier with an actual conversation than a series of chat messages. And for customers, a phone call feels more personal. It lets them talk freely and express themselves fully, with tone and sentiment that would be lost in a chat, even with a human agent.

> Watch the webinar, "Voice isn’t dead—it just needed better AI," with CX Expert and NYT Bestselling Author Shep Hyken.

The challenge voice presents for enterprises

For contact centers, voice is the hardest and most expensive channel to support. Chatbots and other self-service options deflect a non-trivial percentage of digital interactions away from human agents. But automating voice has been a much bigger challenge.

Without effective automation, voice requires a large staff of agents. And with turnover still staggeringly high, recruiting and onboarding are both endless and costly. The revolving door for agents leaves enterprise contact centers and the BPOs they often rely on with too many new agents in the mix. And new agents are far less effective than experienced veterans.

“Many times in our history, we have taken a binary approach to enhancing the customer experience. Voice or digital, automation or agent, efficiency or satisfaction. We’ve often treated these as mutually exclusive choices, when in reality they shouldn’t have to be.”

How generative AI changes the value equation for voice

Generative AI can completely bend the value curve for voice with autonomous AI agents. Current top-tier AI agents for customer service fully resolve a wide range of issues through familiar conversational experiences. That reduces costs while simultaneously improving customer satisfaction with less friction and fragmentation.

The cost savings from reducing the load for human agents are substantial. But there’s more going on here. AI agents allow customers to continue picking up the phone when they need help. Because AI agents adapt to the conversation and other contextual information, they let customers speak naturally. The conversation can flow in a way that’s intelligent, empathetic, and emotionally aware.

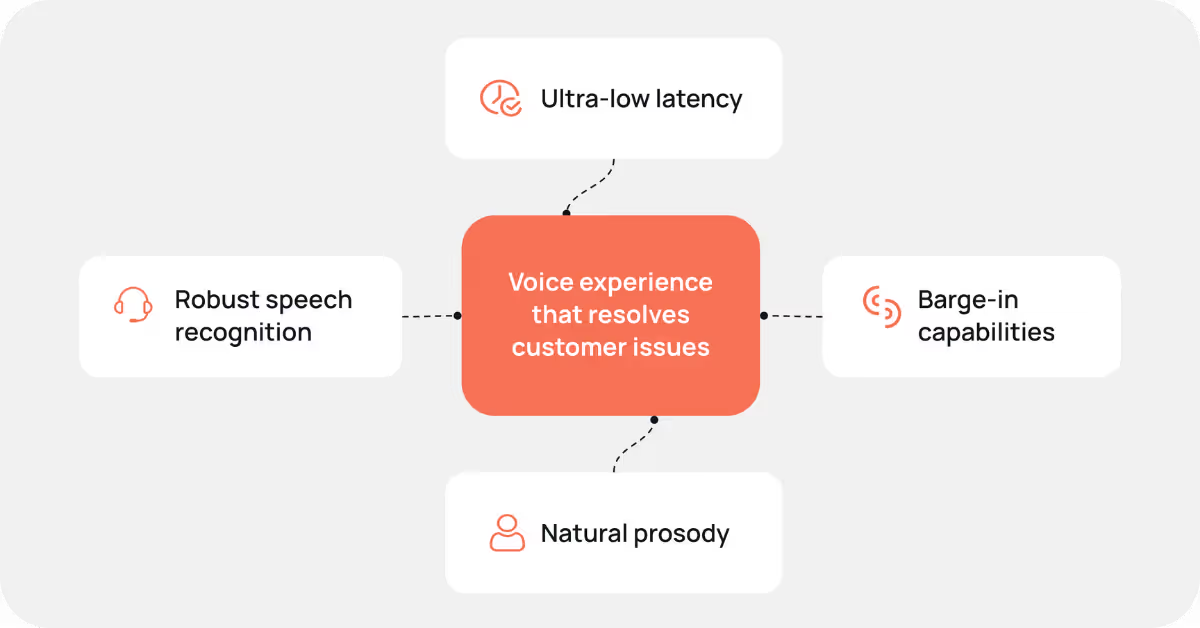

Relentless innovation in voice performance and quality makes this possible. Robotic, laggy, or error-prone AI agents erode trust fast. So, the voice experience must be clear, responsive, and natural. Practical elements that enable this include:

- Ultra-low latency: No awkward pauses, so the conversation feels natural.

- Barge-in capabilities: Customers can interrupt and clarify just like they would with a human, without confusing the system.

- Natural prosody: Speech output is finely tuned for tone, emphasis, and rhythm, ensuring the AI’s voice sounds engaging and on-brand.

- Robust speech recognition: Handles diverse accents, speaking styles, and noisy environments, reducing the need for customers to repeat themselves.

With these capabilities in place, customers encounter a familiar and satisfying voice experience that resolves their issues efficiently. That builds trust, loyalty, and customer lifetime value—all while cutting the cost to serve.

The rise of the agentic enterprise

The introduction of autonomous AI agents is a major leap forward for customer service, but it’s just a taste of what’s coming. In the near future, entire networks of AI agents will be deployed throughout the enterprise like a nervous system. These AI agents will always be on, listening, and acting to serve customers, initiate internal workflows, and gather data to drive value for the business.

In the agentic enterprise, AI will be more than a tool. It will be the connective tissue that ties functional areas to one another and customers to your brand. This shift is no mere efficiency play. It’s a new system of intelligence that will learn from every engagement with a customer or agent, then adapt to provide even better service in the future.

Recentering voice in the agentic enterprise

As generative AI changes the economic realities of voice interactions, the role of voice in CX strategy will change.

Customer service leaders will no longer begrudgingly accept that voice is their most important channel.

Instead, they’ll embrace the unique qualities it offers for personal connection and customer satisfaction.

Digital channels will continue to grow and evolve. So will voice. In the agentic enterprise, the full range of customer interactions will become a consistent and cohesive data stream that drives the business. Customer conversations are gold mines of information, but it’s often hard to collect as structured data. Voice is especially data-rich. Every utterance is filled with information—not just what the customer said, but how they said it. And customers say more in a call than in a chat. Gathering and making sense of it all is impossible with legacy technology. But the network of AI agents that powers the agentic enterprise will learn from every interaction and create a persistent intelligence layer from that data.

Personal service at scale, finally

Historically, personal service hasn’t been possible at scale. CX tech providers have chipped away at the issue with integrations to pull a customer’s account information, AI-powered routing to connect the customer with the right agent more quickly, and predictive analytics to help agents make targeted recommendations that customers are likely to accept. It’s a valiant effort to personalize a generic experience. But it still doesn’t translate into genuinely personal service.

The network of AI agents in the agentic enterprise will change that. The AI will gather and analyze data from every customer interaction at an unprecedented scale, not just for business intelligence, but to craft more personal experiences.

If a customer states a preference during a call, the AI agent will ask if it should store that information for future reference—and then it will. Later, when the customer interacts with your brand again, the AI will use that stated preference as context to improve service.

Or it might take proactive action based on the cumulative contextual information it’s gathered. Imagine a customer calling to book a trip with an airline they use regularly, one that has an agentic customer experience platform with interaction memory. The AI agent that takes the call might know that the customer is likely calling to book their annual Thanksgiving trip to Chicago, and that they typically prefer a Wednesday afternoon flight. So, the AI agent begins the conversation by proactively verifying the customer's preferences for this trip, with the corresponding flight options ready to be presented.

To the enterprise, that’s all data-driven and AI-powered. But to the customer, it’s personal service, the kind they thought they couldn’t get anymore. It makes them feel like your brand knows them enough to earn their loyalty.

Personal service is more than a nicety for your customers. It’s good business. And in the agentic enterprise, it will be business as usual.

AutoSummary's 3R Framework Raises the Bar for Agent Call Notes

Taking notes after a customer call is essential for ensuring that key details are recorded and ready for the next agent, yet it can be difficult to prioritize when agents have other tasks competing for their time. Could automated systems help bridge this gap while still delivering high-quality information? How should the data from customer interactions be organized so that it is useful and easily accessed in the future?

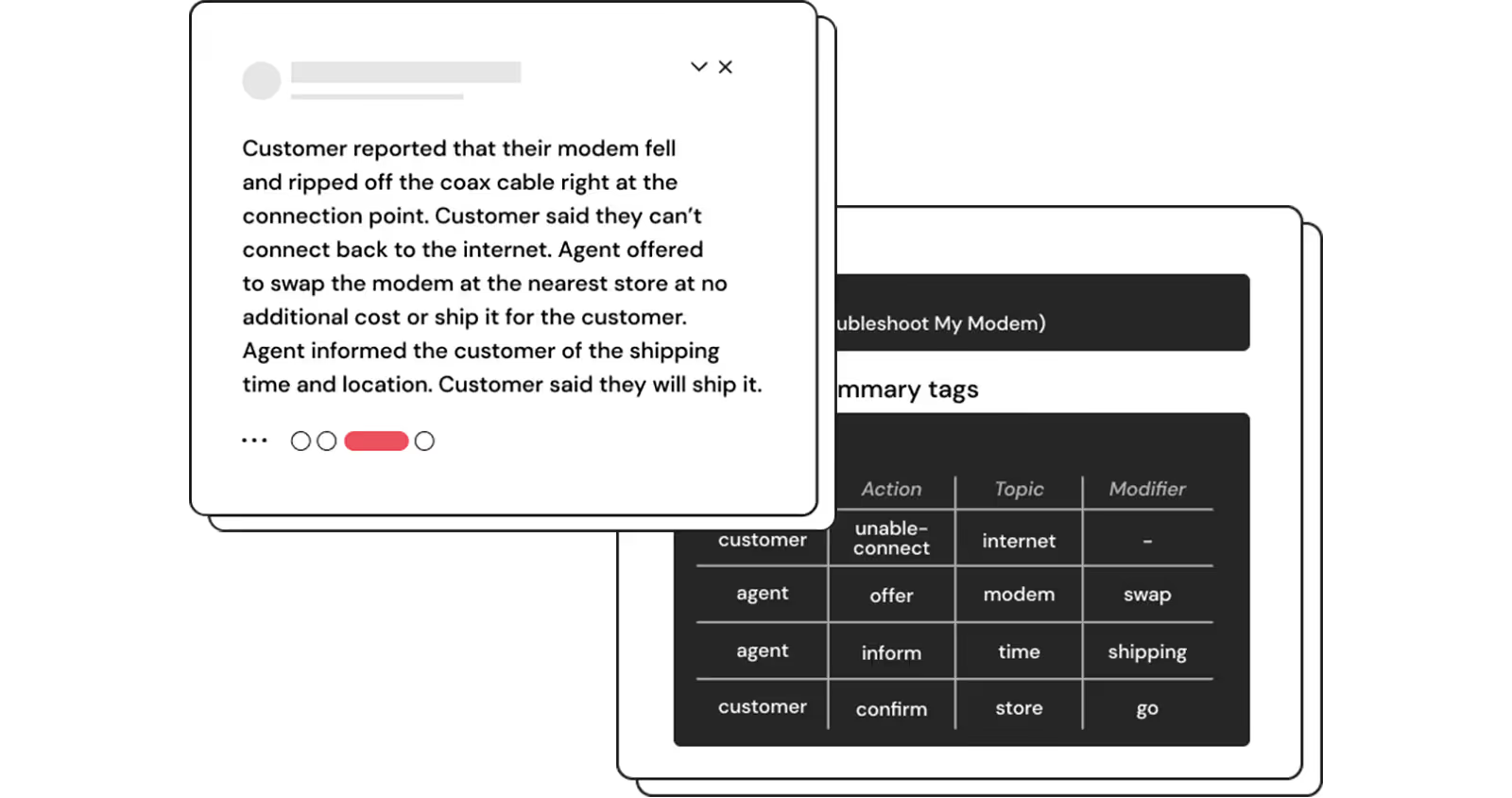

As we were developing AutoSummary, the ASAPP AI Service for automating call dispositioning, we asked our customers for input. ASAPP conducted customer surveys and discovered that agent notes needed to include Reason, Resolution, and Result for every conversation. This 3R Framework was key to success. Here’s a more detailed explanation:

- Reason – Agent notes need to focus on the reason for the customer interaction. This crucial bit of data, if accurately noted, immediately helps the next agent assisting the same customer with their issue. They’re able to dig into earlier details and resolve issues more quickly and efficiently while also impressing customers with their empathy and understanding of the situation.

- Resolution – It is essential to document the steps taken toward resolution if an agent needs to continue where another left off. When an agent clearly understands the problem and its context, it becomes much easier to follow a series of steps or flowcharts to resolve.

- Result – All interactions have a result that should be documented. This allows future customer service agents to see whether the problem was solved effectively, as well as any other important details.

ASAPP designed AutoSummary to automate dispositioning using the 3R framework as a foundation. And, depending on the needs of the customer, AutoSummary can also provide additional information, like an analytics-ready structured representation of the steps taken during a call. We created AutoSummary with two goals in mind:

- Maintain a high bar for what’s included: A summary is, essentially, a brief explanation of the main points discussed during an interaction. Although summaries lengthen as conversations continue, we maintain a limit so that agents can read the note and become caught up in 10-20 seconds. We also eliminate any data that could be superfluous or inaccurate. Our strict standards guarantee a quality output while still being concise.

- Engineer for model improvement: While AutoSummary creates excellent summaries, a fundamental component of all ASAPP’s AI services is the power to rapidly learn from continuous usage. We designed a feedback system and work with our customers so that any changes agents make to the generated notes are fed back into our models. Thus, we’re constantly learning from what the agents do – and over time, as the model improves, we receive fewer modifications.

We’re always learning what our customers want and translating that into effective product design. For us, it’s been great to see how successful these summaries are in terms of business metrics such as customer satisfaction, brand loyalty, and agent retention. We strongly believe that good disposition notes for all customer interactions improve every metric mentioned above–and more!

On average, our customers who use Autosummary save over a minute of call handling time per interaction, which saves them millions of dollars a year. Who wouldn’t want those kinds of results?