Most enterprises still evaluate generative AI like it’s a toy, measuring novelty instead of reliability. The AI sounded good. It used the right tone. It didn’t hallucinate (much).

But that’s not the real measurement of your generative AI agent. That's a demo theater.

In Priya’s article, she made the case for discarding “human-like” as the benchmark and replacing it with outcome-focused performance. I agree—and I’ll go one step further.

This article lays out what you need to measure, why it matters, and how to do it right. Focusing just on the parts that protect your brand and your bottom line.

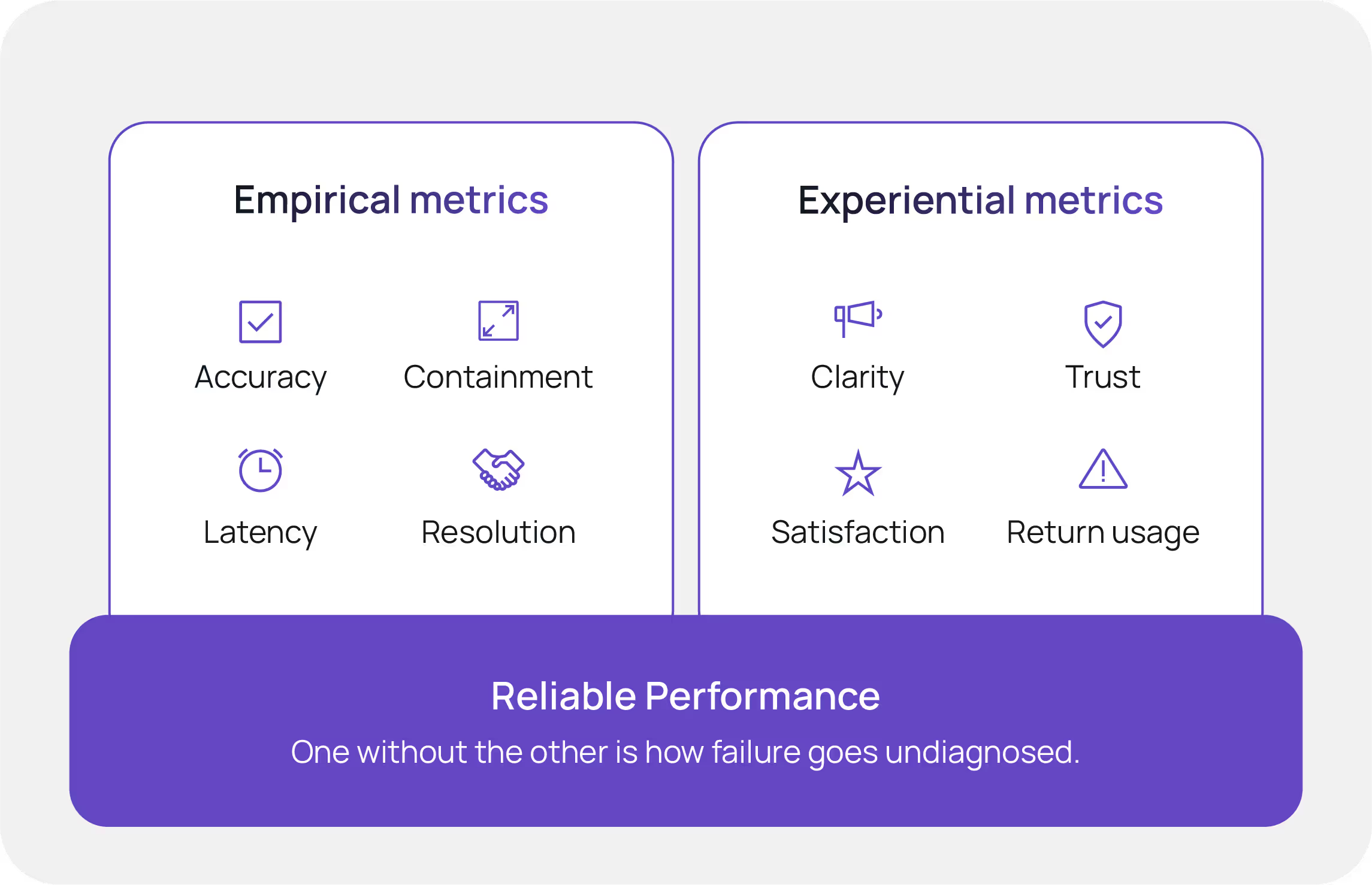

Two measurement categories that actually matter

Let’s not overcomplicate this. There are two buckets worth tracking:

- Empirical metrics: These tell you what the system is doing—accuracy, resolution rates, escalation, latency, and error rates. If these numbers don’t exist in your reporting layer, you’re flying blind.

- Experiential metrics: These tell you how it feels to the user—clarity, effort, trust, satisfaction, and return usage. They don’t replace hard data; they validate it.

To effectively measure your generative AI agent, you need both types of metrics. Measuring only one is how failed pilots go undiagnosed until you hit scale and start losing customers.

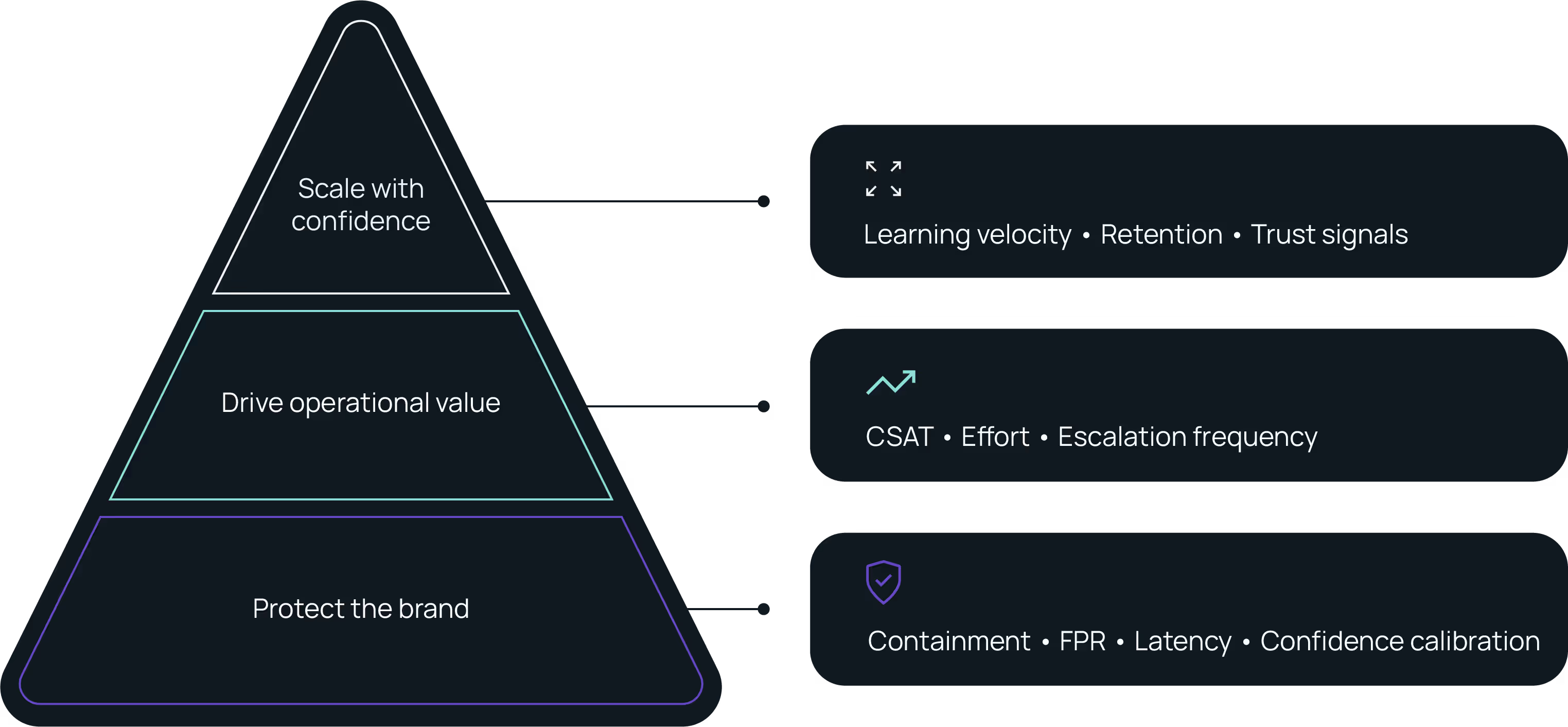

Key metrics (and what they actually protect)

If you’re only measuring sentiment or “positive interactions,” you’re not measuring anything meaningful. Metrics should exist to detect risk, quantify impact, and drive action. Below are the metrics for measuring the performance of your generative AI agent. They are signals that tell you if the agent is delivering business value or quietly failing at scale.

Empirical metrics

First Contact Resolution

- Why it matters: It’s the most honest proxy for whether your generative AI agent works.

- Track: % of sessions fully resolved by AI without handoff or reopen.

- Target: Exceed human baseline within 90 days.

Error Rate

- Why it matters: Uncaught errors compound quietly and publicly.

- Track: Incorrect intents, misrouted flows, wrong data returned.

- Fix: Tighten prompts, adjust training data, and raise confidence thresholds.

Containment

- Why it matters: Abandonment and escalation are signals of failure.

- Track: % of users staying in the channel. Break down by intent and flow.

- Guard against: Over-containment at the cost of CX.

Escalation Frequency

- Why it matters: Escalation is expensive. Frequent escalation = low trust or poor design.

- Track: Trigger reasons—low confidence, policy boundaries, repeat queries.

Latency

- Why it matters: Delay is the enemy of confidence, especially in voice interactions.

- Track: Time to first response and time to resolution.

- Expectations: <1.5s for simple tasks.

Confidence Calibration

- Why it matters: A model that doesn’t know when it’s wrong is dangerous.

- Track: Alignment between model confidence and actual outcome accuracy.

- Use: To govern automation vs. escalation logic.

Learning Velocity

- Why it matters: The cost of AI failure is in how long it stays broken.

- Track: Time from gap detection → fix → deployment.

- Target: Days, not weeks or months.

Experiential metrics

CSAT / NPS

- Track: AI-handled vs. human-handled outcomes. Break down by workflow.

- Avoid: Using this in isolation. Always pair with resolution + error rates.

Effort

- Track: Survey or behavioral proxies (rephrasing, repeated queries).

- Use: To identify friction points, not as a vanity score.

Trust Signals

- Track: Drop-offs after vague messages (“Checking now…”).

- Fix: Clearer next-step prompts and timeout handling.

Sentiment Drift

- Track: Sentiment trends across interactions/conversations. Watch for frustration triggers.

- Act: Adjust flows where tone or repetition causes friction.

Retention & Adoption

- Track: Opt-in vs. opt-out rates. Usage trends that indicate customers are willing to engage with the AI agent again. Interpretation: Low repeat = a trust gap. Don’t ignore it.

Governance - you don’t scale what you don’t control

Metrics aren’t a dashboard exercise. They should be seen as operational insurance. Here’s what enterprise governance actually looks like in practice:

Baselines and Targets

- Establish human-agent benchmarks before go-live.

- Set 30/60/90-day performance targets by metric.

- Don’t launch new intents without clear success criteria.

Data Collection & Instrumentation

- Log every decision point: intents, actions, engagement, latency.

- Map user paths. Track where they drop, re-enter, or escalate.

- Ensure privacy compliance. No excuses.

Analytics Infrastructure

- Real-time dashboards. Alerting tied to thresholds.

- Weekly ops reports. Monthly trend reviews for execs.

- Tie reports to value creation, not marketing wins.

Feedback Loops

- Cross-functional reviews: product, compliance, CX, ops.

- Every high-error workflow gets an owner and a fix timeline.

- Log every model or flow change with before/after metrics.

Risk Monitoring

- Maintain an incident log. Track failure types and recurrence.

- Build automated test suites. Run edge-case regression tests pre-release.

- Use confidence metrics to throttle automation intelligently.

Versioning

- Track model versions, knowledge and behavior versioning, prompt changes, config updates.

- Have rollback plans. If something breaks, reverting should take minutes, not days.

Reporting

- Executive summaries should surface impact, not volume.

- Include performance, risks, and a point of view.

- No number without a decision attached.

Everything listed above is table-stakes to your AI strategy, and everything not measured should be seen as optional. These metrics are how you catch failure early, prove success under scrutiny, and course-correct before customers or compliance teams notice.

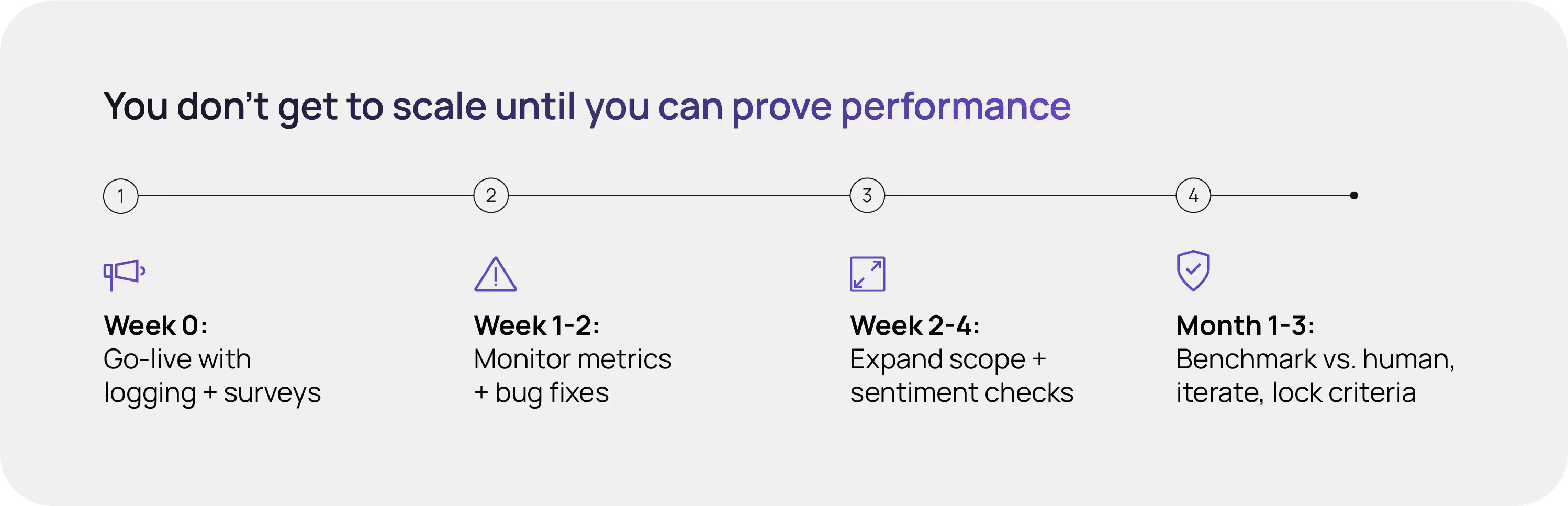

Day 0 to Day 90—a deployment measurement roadmap

Generative AI agent rollouts fail most often because teams launch without a measurement plan. They ship, hope, and retroactively scramble to explain what happened. That doesn’t work in production environments. The roadmap below is what a responsible deployment looks like. It’s measured, accountable, and built to catch issues before they scale.

Week 0

- Go live with initial scope and intents. Logging on. Surveys embedded.

- Validate baselines against expectations.

Week 1–2

- Monitor for latency, error spikes, and common escalations.

- Fix easy bugs. Prioritize high-friction intents.

Week 2–4

- Add intents. Tighten thresholds. Improve containment logic.

- Begin sentiment + CSAT analysis.

Month 1–3

- Compare against human baselines. Resolve or escalate any workflows below target.

- Iterate on underperforming areas with measurable updates.

- Lock success criteria before expanding scope.

If you can’t answer what changed between Day 1 and Day 90 with metrics, you’re not running a system, you’re running a guess. This timeline isn’t about speed but about control. You don’t get to expand scope until you can prove performance.

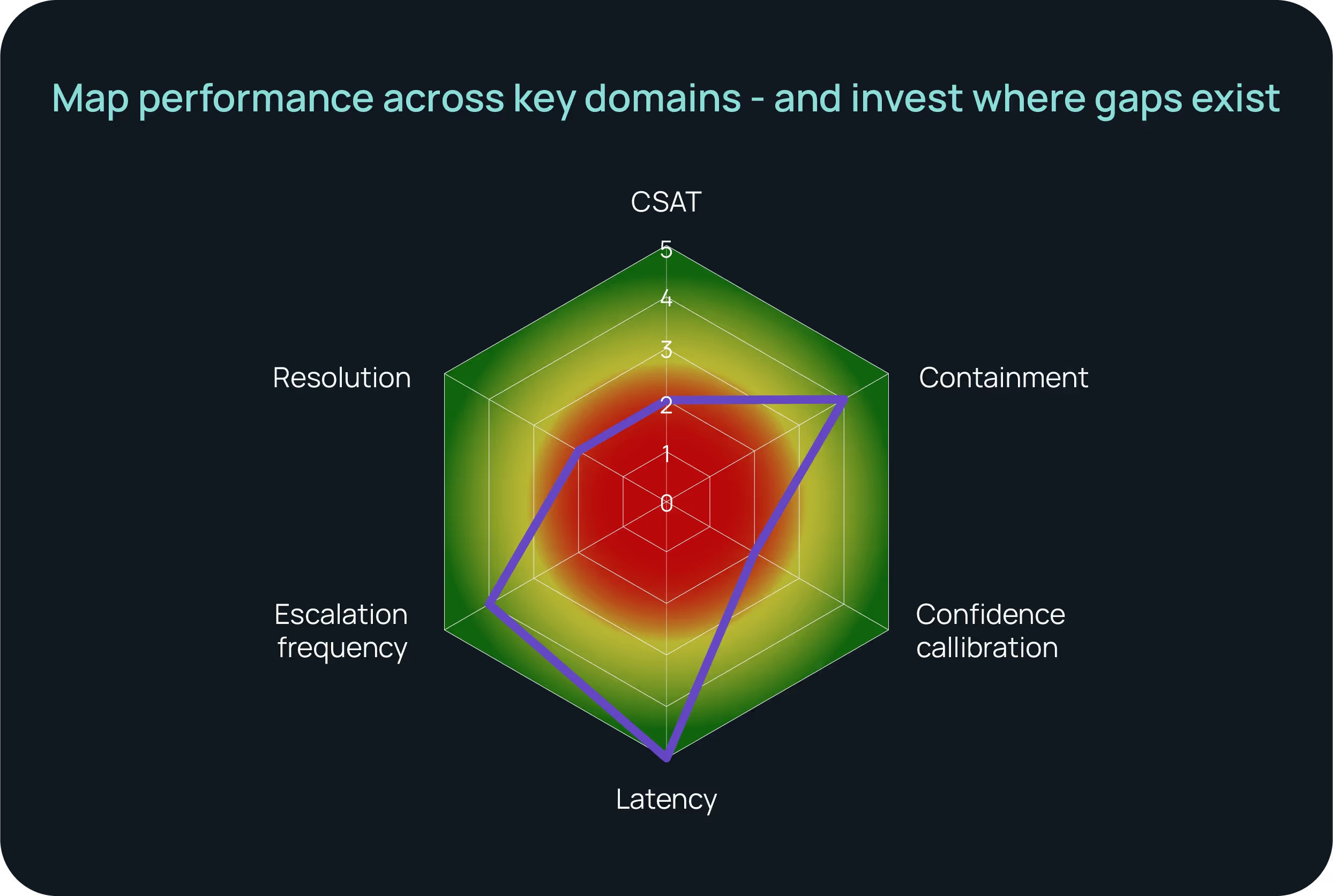

Making the numbers actionable

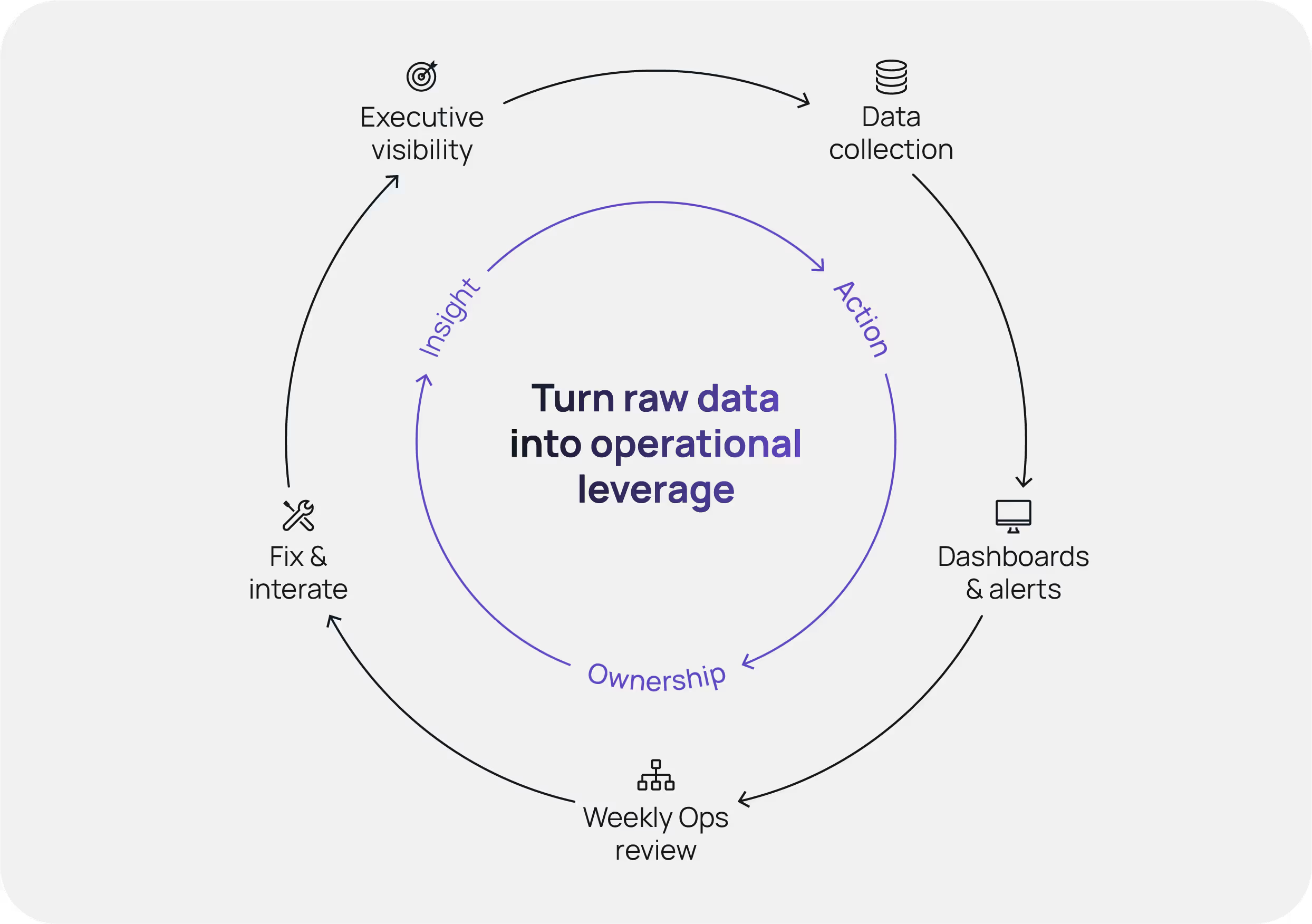

Metrics that sit on a dashboard don’t change outcomes, so unless the numbers lead to decisions, priorities, or escalations, they’re just background noise. This section details how to turn raw data into operational leverage so you can fix what’s broken, scale what works, and hold teams accountable.

Dashboards

- Resolution, containment, CSAT, latency, escalation.

- Drillable by intent, channel, region.

Heatmaps

- Identify problem workflows. Prioritize by volume and cost.

Sentiment Trends

- Visualize friction. Pair with NLU accuracy and rephrasing rates.

Progress Radar

- Map six performance pillars against target thresholds.

- Use this to align product and ops on where to invest.

If no one owns the metric, no one owns the problem. Build infrastructure that connects insight to action, including weekly reviews, threshold alerts, and executive visibility. Measurement isn’t the hard part. Acting on it is. And this is where most teams fail.

A final note for leaders

If your team can’t show how a generative AI agent performs, how it fails, and how it recovers (measured in real numbers, not impressions), you’re not in control of your generative AI agent but potentially exposed.

This isn’t about whether the generative AI agent sounds natural. It’s about whether it delivers, under pressure, with traceable decisions and minimal risk. That’s what earns trust from customers and from the business.

So ask the only question that really matters. When something goes wrong, how fast do we know it, and what happens next? If there’s no clear answer, you have work to do.