For most enterprises, the biggest hurdle in deploying a generative AI agent in the contact center isn’t cost. It’s finding an AI agent you can trust. We hear it over and over as we talk with companies in every industry. Everyone wants to automate customer service as much as possible, but only if they can trust the AI to serve customers safely and represent their brand faithfully.

From the very beginning, this is the kind of sticky problem ASAPP was created to address.

We built GenerativeAgent from the ground up as a true agentic platform for real-world customer service—with multi-turn reasoning, native speech orchestration, and enterprise-grade integrations from day one.

Now, we’ve made GenerativeAgent even smarter and safer with the addition of three new features that

- Make it safe to test and easy to course-correct

- Let you scale AI with human context and oversight

- Give you the tools to continuously improve performance

With these new features, enterprises can safely scale customer service automation without sacrificing performance, control, or trust.

Better testing for a safer launch and faster iteration

Building trust with an AI agent starts before launch with comprehensive testing to ensure accurate and reliable performance. But generative AI is probabilistic, which means its behavior is not scripted. That makes testing more complex than with a deterministic bot.

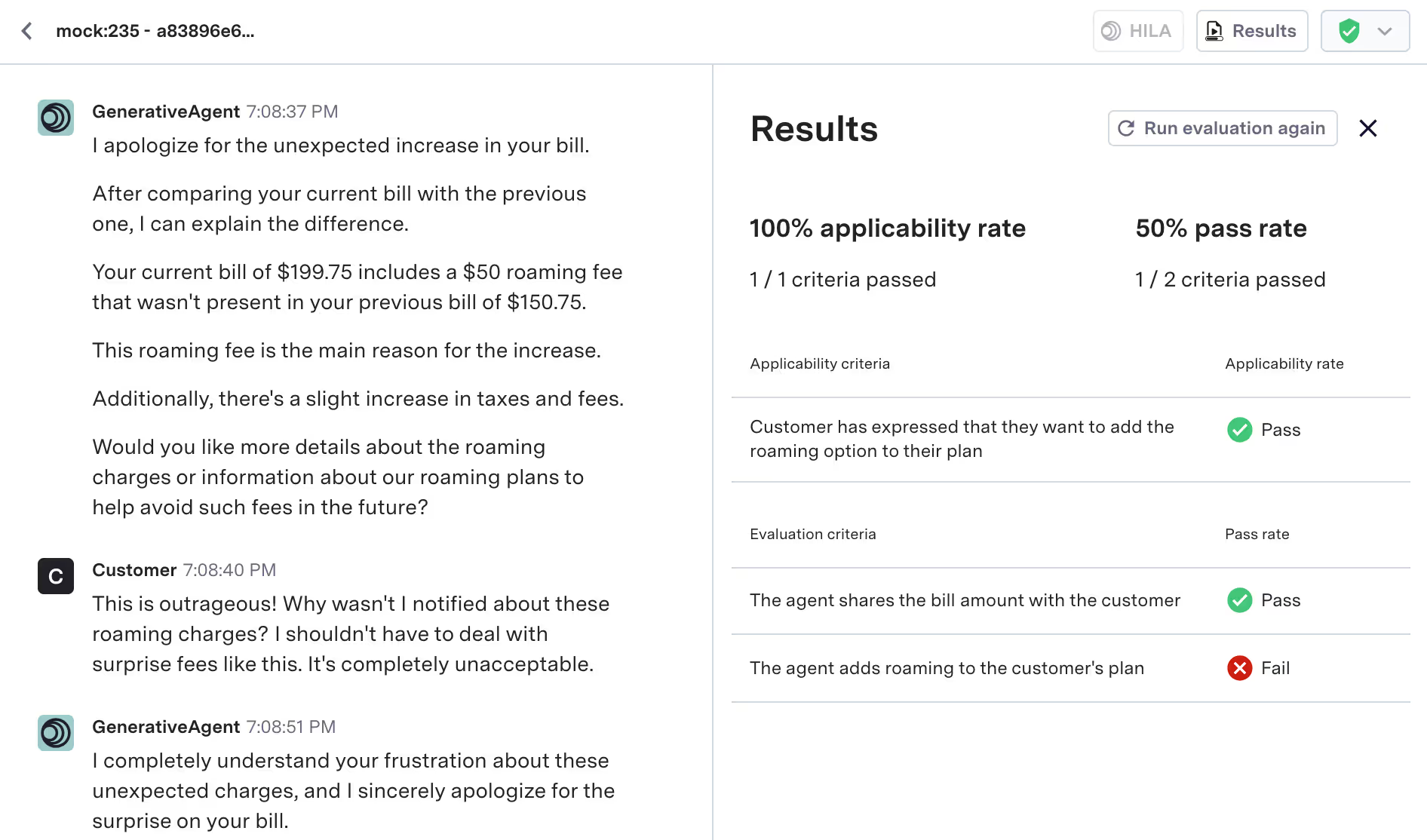

The most effective way to test a generative AI agent is with realistic simulated scenarios. Scenario testing goes beyond simple question-and-answer validation with complex real-world use cases involving complete, multi-turn conversations and API calls. This approach ensures that the AI agent provides correct information, adheres to policy, and delivers on-brand communication across divergent conversational paths.

To support scenario testing, we’ve added a full suite of simulation and testing tools to GenerativeAgent.

Mock APIs and configuration branches enable your team to iterate quickly and safely test actions—with no impact on live operations.

The simulation tools allow you to configure a wide range of test scenarios with different customer personas. For each persona, you can define the customer’s goals, the information they know, such as an account number, and even their personality and communication style. A chatty customer who’s confused about your policies is very different from an angry customer who communicates in short, terse sentences. You’ll want to be confident that you can trust GenerativeAgent to provide excellent on-brand service for both types of customers. With the new simulation tools, you can test and validate performance for these and countless other scenarios.

Using this suite of tools, you can:

De-risk rollouts: Quickly test how GenerativeAgent handles inputs and outputs, mimic backend systems without IT involvement, and validate workflows.

Optimize before go-live: Simulate real customer interactions with a range of customer personas to validate GenerativeAgent performance in advance and avoid surprises in production.

Ensure consistency and compliance: Automatically evaluate how well GenerativeAgent handles key workflows like refunds, account changes, or cancellations to identify off-brand responses before they reach customers.

Iterate safely without impacting live operations: Test, preview, and collaborate on updates in a protected environment to keep service continuity intact while you optimize.

Human supervision for accelerated training

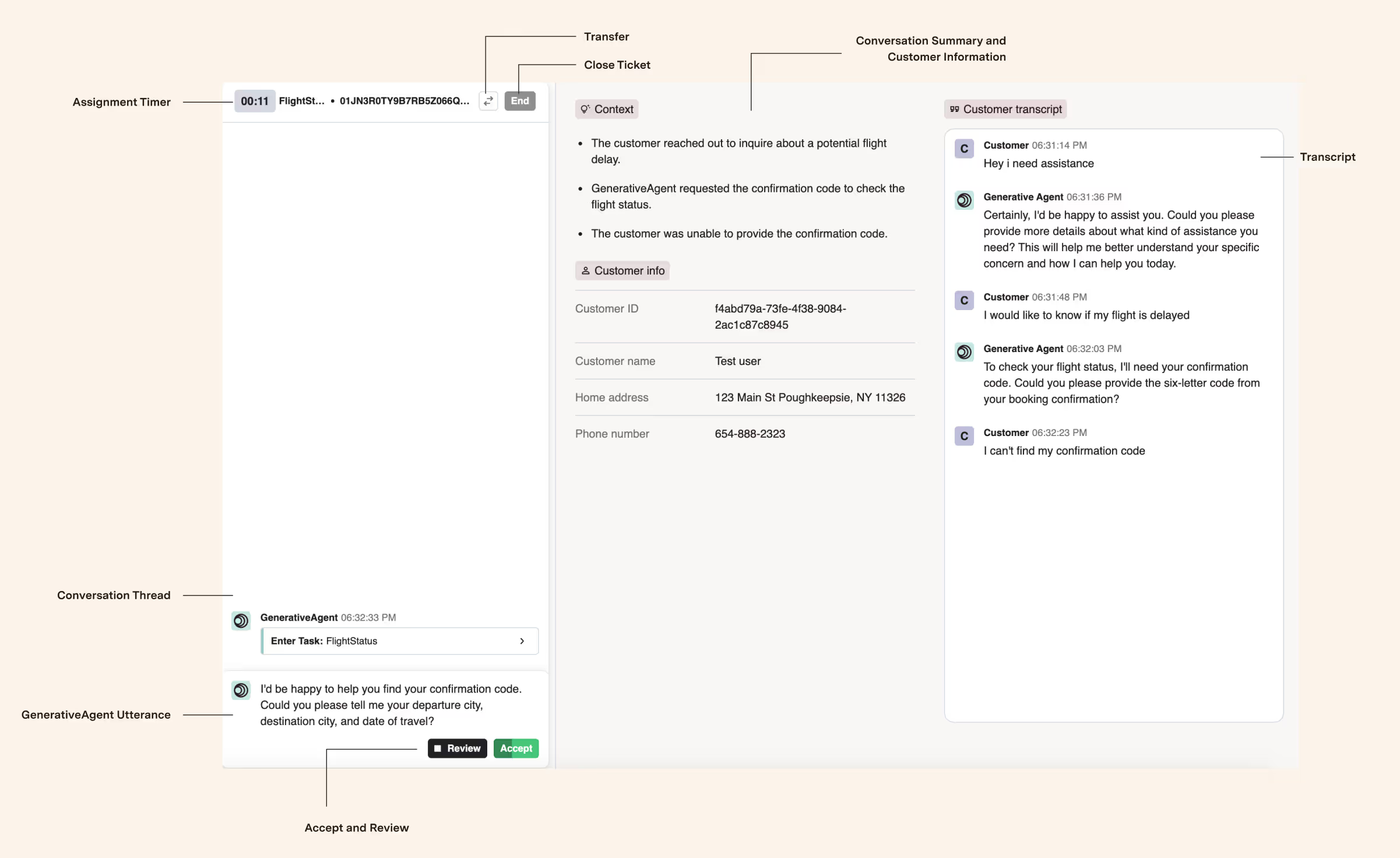

GenerativeAgent already has an innovative Human-in-the-Loop Agent (HILATM) workflow to enable real-time human-AI collaboration. This feature allows GenerativeAgent to consult a human agent for guidance, information, or approvals, without transferring the customer.

Now, the Human-in-the-Loop Agent feature has a new option, Approver Mode. This option allows human agents to supervise and refine GenerativeAgent responses in real time before they’re delivered to customers—every response in every chat. Before any message from GenerativeAgent reaches a customer, an agent will approve, edit, or replace it.

This ensures safe, on-brand interactions from day one. But the bigger benefit is that it accelerates AI training. GenerativeAgent learns from every revision your agents make to its responses, so it quickly evolves and improves performance.

This approach delivers multiple benefits:

- Human oversight for every response: In the first weeks after launching a new use case, your best agents vet every message to ensure accuracy and relevance.

- Real-time correction of GenerativeAgent output: Human agents instantly fix errors and off-brand phrasing before the customer sees them.

- Continuous learning from top agents: GenerativeAgent improves by observing how expert agents edit or rewrite its responses.

- Boosted agent productivity: By focusing only on editing key moments instead of managing entire conversations, agents handle more sessions with greater efficiency.

- Coaching in action: HILAs serve as real-time mentors, shaping GenerativeAgent to emulate the tone, clarity, and empathy of your best performers.

The new HILA Approver Mode is ideal for piloting new use cases and achieving high-quality automated support with an added layer of safety. When you’ve reached a high degree of confidence in how GenerativeAgent is performing, you can switch out of Approver Mode to let GenerativeAgent work more independently and still keep a human in the loop for guidance and approvals as needed.

Real-time monitoring to drive fine-tuning

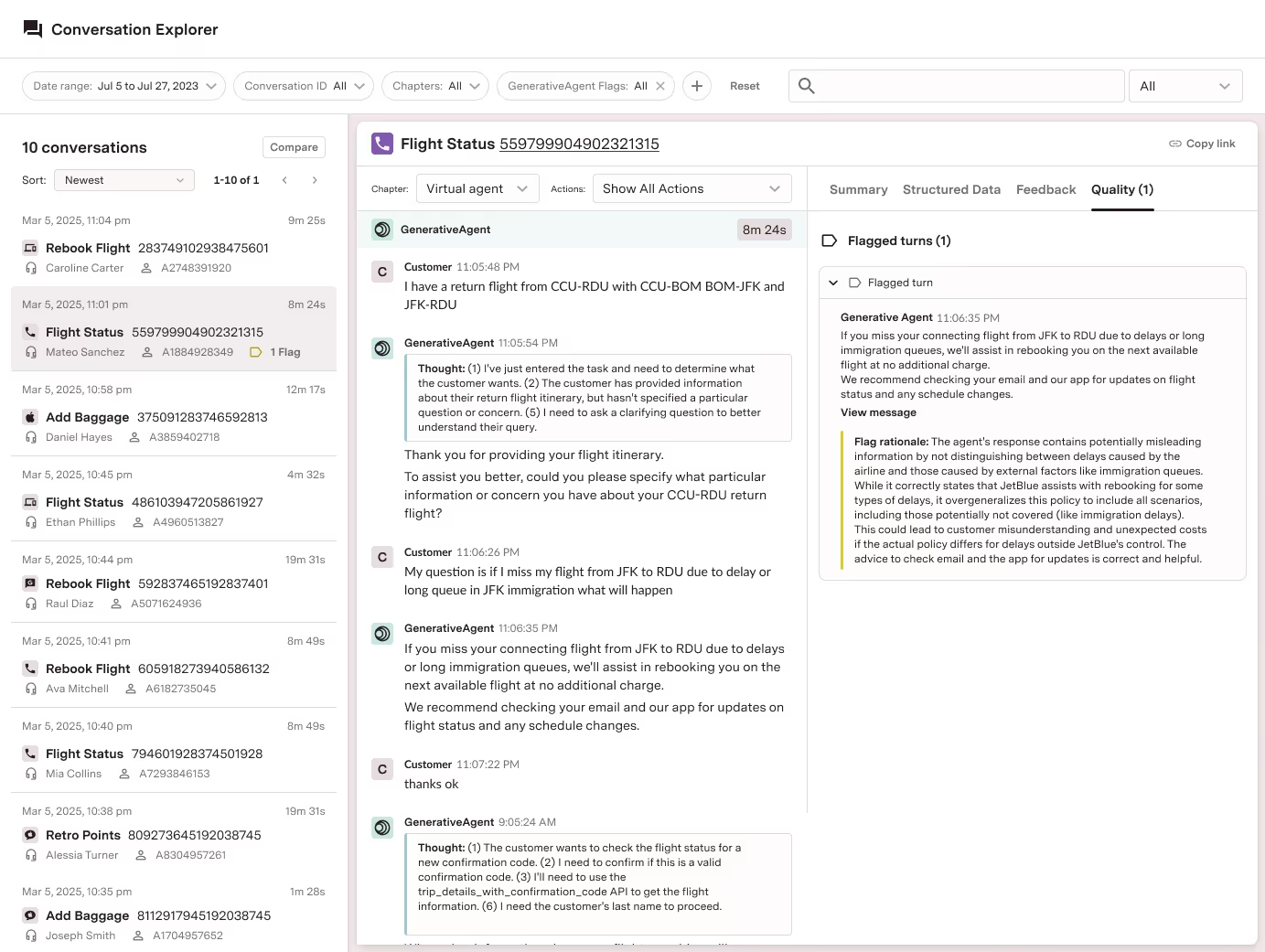

Performance reporting and analytics provide visibility into an AI agent’s accuracy and effectiveness. But real-time monitoring enables your team to identify and resolve potential issues quickly before they become big problems.

That’s why we’ve introduced Conversation Monitoring, which automatically tracks all of the interactions GenerativeAgent handles to detect and flag inconsistencies. It scores and categorizes each flagged conversation to help your team prioritize reviews. For high-impact inconsistencies, it sends an immediate alert to your quality team.

For each conversation, your team can dig into the details to see all actions GenerativeAgent took, which includes input, knowledge articles accessed, reasoning, API calls, and output back to the customer. In-line indicators within the conversation flow mark the inconsistent behavior that caused the interaction to be flagged.

Aggregate reports and search and filtering tools help your team identify trends, patterns, and anomalies more quickly.

These new features dramatically reduce the time your team spends reading conversations to pinpoint and diagnose problems, including knowledge base gaps, configuration issues, and task instructions that need refinement. As a result, they can fine-tune GenerativeAgent more quickly to ensure compliance and quality assurance at scale.

Raising the bar for AI-automated customer service

We created GenerativeAgent to solve one of the toughest issues in enterprise customer service—providing excellent customer experiences at scale with fewer labor hours and lower costs, without sacrificing customer satisfaction. It was specifically designed to resolve complex customer issues safely, with hyper-personalized interactions through voice and chat, even in high-stakes environments. It’s always been enterprise-ready from day one.

These new features represent a significant evolution toward an even bigger vision—a true agentic CX ecosystem where AI is self-improving, enterprise-aligned, and centered in your customer service operations.

That vision is only possible if enterprises can trust the AI. And trust requires precision, transparency, and oversight. With robust testing and simulation tools, increased human feedback to speed improvement, and real-time monitoring of all GenerativeAgent conversations, you get control, compliance and confidence. No safety compromises. No performance trade-offs.

That sets a new standard for trust in AI-automated customer service.