Gonzalo Chebi

Gonzalo Chebi, PhD, is a Data Scientist at ASAPP, where he works on improving our products and models using several data sources, building insights based on them, and analyzing the impact our features are providing to our customers. Prior to ASAPP, Gonzalo got his PhD at the University of Buenos Aires, where he worked with robust approaches in Logistic Regression applied to high-dimensional data. He has also worked as a Quantitative Researcher at a Fintech company focused on algorithmic trading strategies and models for cryptocurrencies.

A contact center case study about call summarization strategies

All agents at a contact center are typically required to write summary – or disposition – notes for each conversation. These notes are intended to be used for several purposes. They provide context if the issue needs to be revisited in follow up calls. This avoids the need for the customer to repeat the problem and saves the agent time. Also, supervisors can use these notes to see how often certain situations arise and identify coaching opportunities. Good disposition notes will include the customer’s contact reason, key actions taken to solve it, and the conversation outcome.

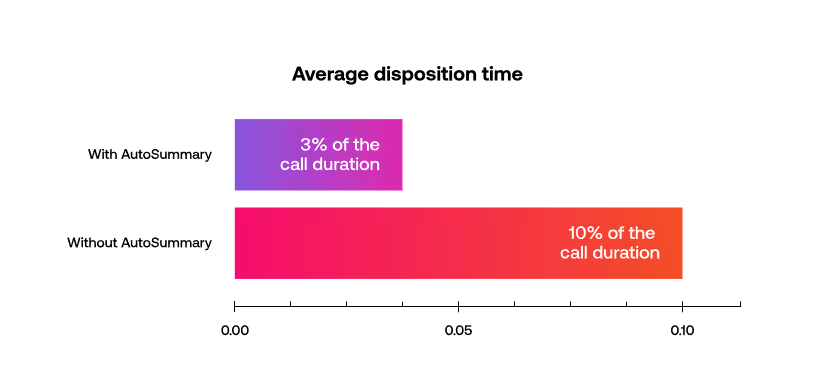

The time required to take these notes is, on average, 10% of the actual call duration and agents may only capture some aspects of the conversation. This is why many contact center leaders are looking for ways to reduce the time spent writing these notes and increase their quality.

Findings from a large enterprise contact center

Like most contact centers, agents in this company were writing all notes at the end of each conversation. Aiming to increase agent’s utilization (i.e. the proportion of time agents are talking with a customer) they shifted to having their agents write the notes during, and not after, the conversation. They encourage them to write these notes in “natural pauses” inside the conversation. This way, agents reduce significantly the time between when a conversation ends and the next conversation starts.

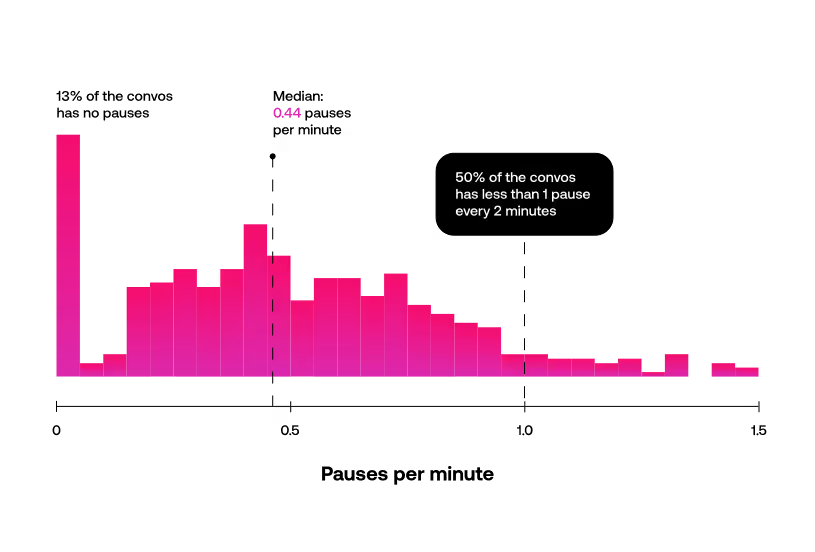

In reviewing call data, we learned that in a big proportion of the voice calls, these natural pauses do not occur very often. To understand this, for each conversation, we first identify the time intervals in which the customer or the agent are talking. This can be observed in Figure 1 below. Based on these customer and agent turn intervals, we can identify the pauses in the conversation. For this analysis, we only keep the pauses which have a duration of at least 10 seconds.

As we show in the histogram from Figure 2, we estimate that half of the calls have less than one pause every two minutes and 13% of the calls have no pauses at all. Moreover, for most of those pauses, agents are busy actively working on the issue (looking for information, filling forms, etc.), so taking notes is not a possibility.

This means that when an agent takes notes in the middle of the conversation, they are usually creating an artificial pause. In other words, they are transferring the time it would have taken to take the notes at the end, to more time spent on the call with each customer. Moreover, when they don’t finish notes during the call, note-taking for that call spills over into the next call, which significantly increases the complexity for the agent.

Having agents take notes during the conversation does not improve efficiency and may harm the overall customer experience.

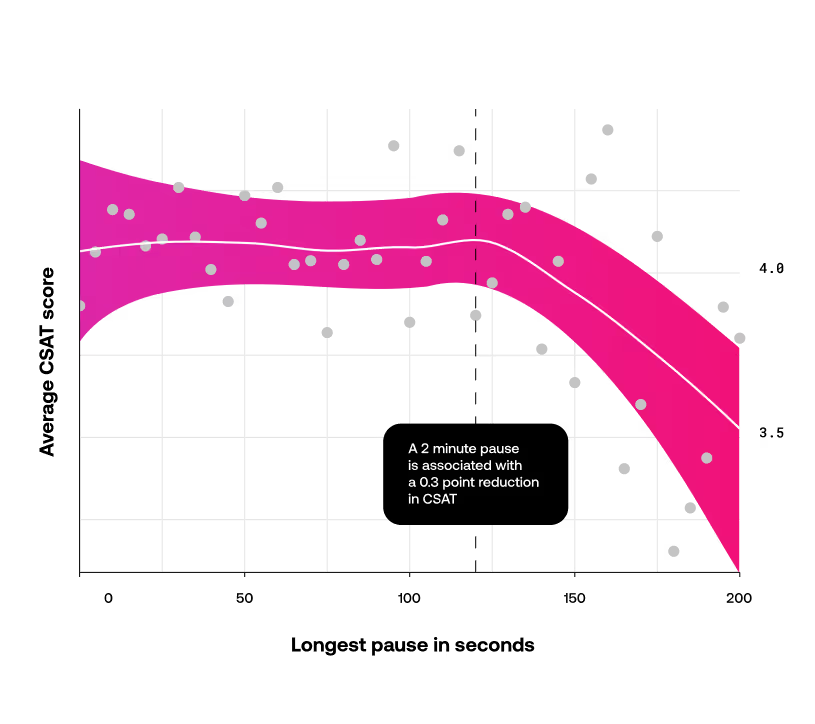

On the customer side, pauses in the middle of the conversation likely have negative consequences. Our data consistently shows that conversations with longer response times are associated with a lower Customer Satisfaction (CSAT) score, as we show in Figure 3 (the CSAT is on a scale from 1 to 5 here). In addition to waiting through pauses, the overall time the customer (and the agent) spend on the call is longer.

The value of automating call summaries

We already showed that taking notes during the call does not improve agent efficiency and may harm the overall customer experience. On the other hand, automating conversation summaries can be a way to reduce or completely eliminate dispositioning time for the agents as well as increase the general quality of the summaries.

The customer in this case study is initially making the automated summaries visible in their agent desk, enabling the agents to review and edit.

This has significantly reduced the time agents devote to this task.

As confidence in the AutoSummary model grows, companies may opt to remove manual reviews completely from agents’ task list—and take the additional efficiency gains available. Other customers bypass this step and use AutoSummary without any agent engagement from the start.