Gina Clarkin

Gina Clarkin is a product marketing manager at ASAPP. She works to bring advanced technologies to market that help companies better solve real-world problems. Prior to joining ASAPP, she honed her product marketing craft at tech companies with firmware, wireless, and contact center solutions.

How to Equip Your Contact Center for Human / AI Collaboration

What most AI strategies miss—the human factor

Preparing for the workforce transformation AI agents will bring

AI is transforming customer service—but not always for the better. Gartner recently predicted that over 40% of AI agent initiatives will be scrapped by 2027, leaving contact centers frustrated and customers dissatisfied. Gartner cites that the primary causes include poor integration with existing workflows, lack of human oversight, and insufficient alignment with business goals.

Even when AI can handle complex tasks, human involvement remains critical for certain interactions. Yet many contact centers still deploy AI in isolation, creating bottlenecks and diluting the transformative potential of generative AI in customer service.

Enter Human-in-the-Loop Agent (HILA™)—a proven approach that blends AI efficiency with real-time human expertise, ensuring speed to resolution, accuracy, and exceptional customer experiences.

In the next section, we’ll explore why traditional human-in-the-loop approaches fall short and how HILA is fundamentally different, backed by analyst validation and real-world results.

The Challenge: Why Current AI Approaches Fall Short

While generative AI agents can now automate even complex interactions, contact centers still struggle when AI agents operate in AI-only systems that were not designed for true human/AI collaboration. Common pitfalls include:

- Limitations of the status quo: Nearly every “expert” source describes effective human-in-the-loop as a seamless transfer of conversation and context from AI to human. Vendors support this approach as the only option.

- Loss of automation efficiencies: AI-only systems automatically escalate to a human agent, putting customers back in the queue to wait for an available agent. This results in disjointed and frustrating experiences that still incur the cost of a live agent for a majority of the interaction.

- Workflow misalignment: AI tools not integrated with human processes cause additional inefficiencies, as AI transfers to live agents across systems, losing context and any ability for the AI to learn from human inputs.

- Inconsistent experiences: Customers receive variable outcomes when human judgment isn’t applied, or applied within a high-friction experience.

- Data & integration problems: Integrating generative AI with legacy customer service platforms is often difficult, resulting in data silos and inconsistent experiences for both customers and agents.

Nearly 95% of generative AI pilot programs fail to produce measurable business outcomes, largely due to poor integration with existing workflows

— MIT, The GenAI Divide: State of AI in Business 2025

This gap isn’t just a technology problem—it’s an operational one. Without the ability to blend human expertise with AI, in the right circumstances and to the right extent, many initiatives stall or fail entirely.

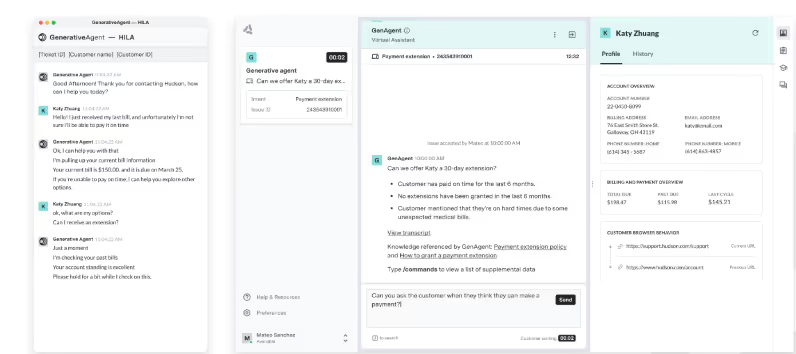

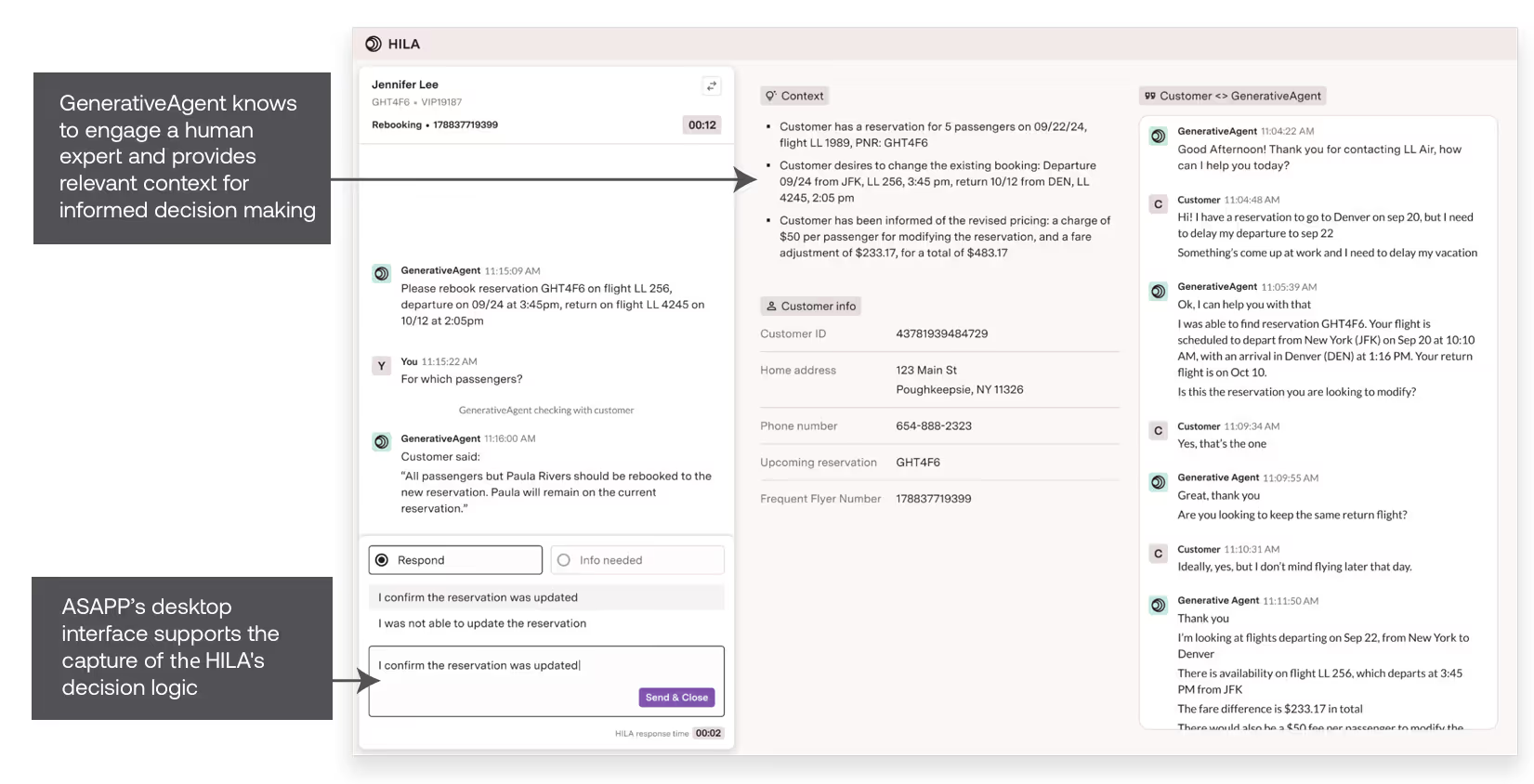

HILA™ (Human-in-the-Loop Agent): A proven approach

ASAPP Human-in-the-Loop Agent (HILA™) addresses these challenges by embedding humans directly into AI workflows to unblock the AI. Let that sink in—humans supporting AI, not the other way around. With a UI/UX designed specifically for this human/AI collaboration. Instead of taking a transferred interaction from the AI agent whenever it hits a roadblock, a HILA supports the AI agent in real time. So the AI agent handles complex interactions with a human helping behind the scenes only when asked—for exceptions, approvals, or performing actions in other systems that AI can’t access - and the AI continues to resolve the interaction. The AI, in this case GenerativeAgent, is smart enough to know when and how to involve the HILA but keeps ownership of the interaction.

In its Approver Mode, HILA can provide oversight for each GenerativeAgent response before it’s sent to the customer, and the inputs actually help train the AI. HILAs can review and accept or edit each AI response. Every agent correction becomes valuable feedback to improve GenerativeAgent over time. This is ideal for launching new intents, so organizations can build confidence in the accuracy and safety of GenerativeAgent as it learns, then make the shift to full HILA.

Key benefits of HILA

- Faster resolution: Combining AI efficiency with human assistance behind the scenes accelerates problem-solving.

- Scalable personalization: Agents supported by AI can deliver faster, more consistent, personalized experiences.

- Concurrency - since the HILA is handling questions, approvals, and an occasional task, they can handle 2-3, or more, interactions at the same time easily.

- Frictionless experiences - customers no longer have to endure awkward forced transfers, repetitive conversations without context, or extra wait time for a human agent. Just fast resolution from a high-quality experience.

Analyst Validation: Why Industry Experts Endorse Human-AI Collaboration

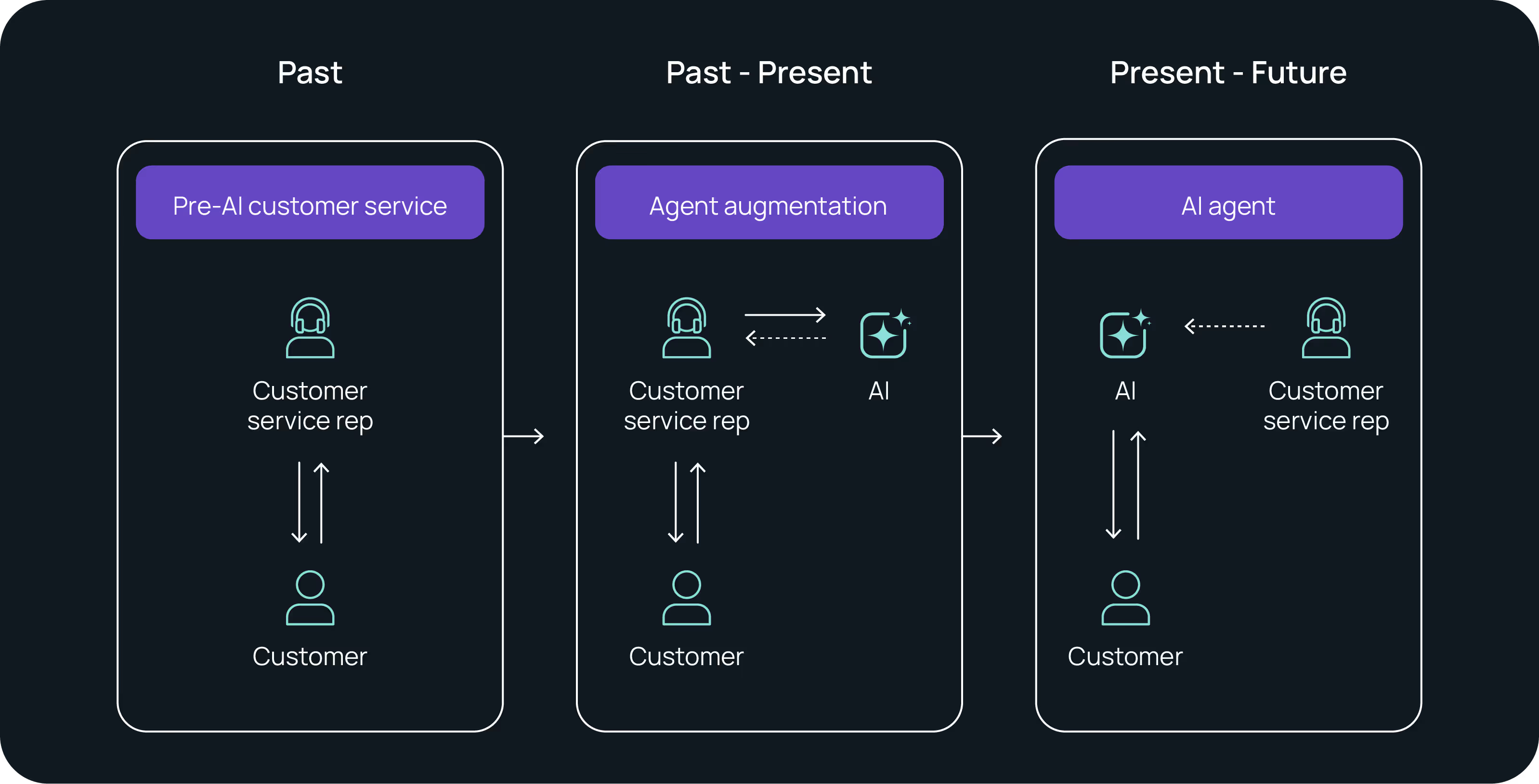

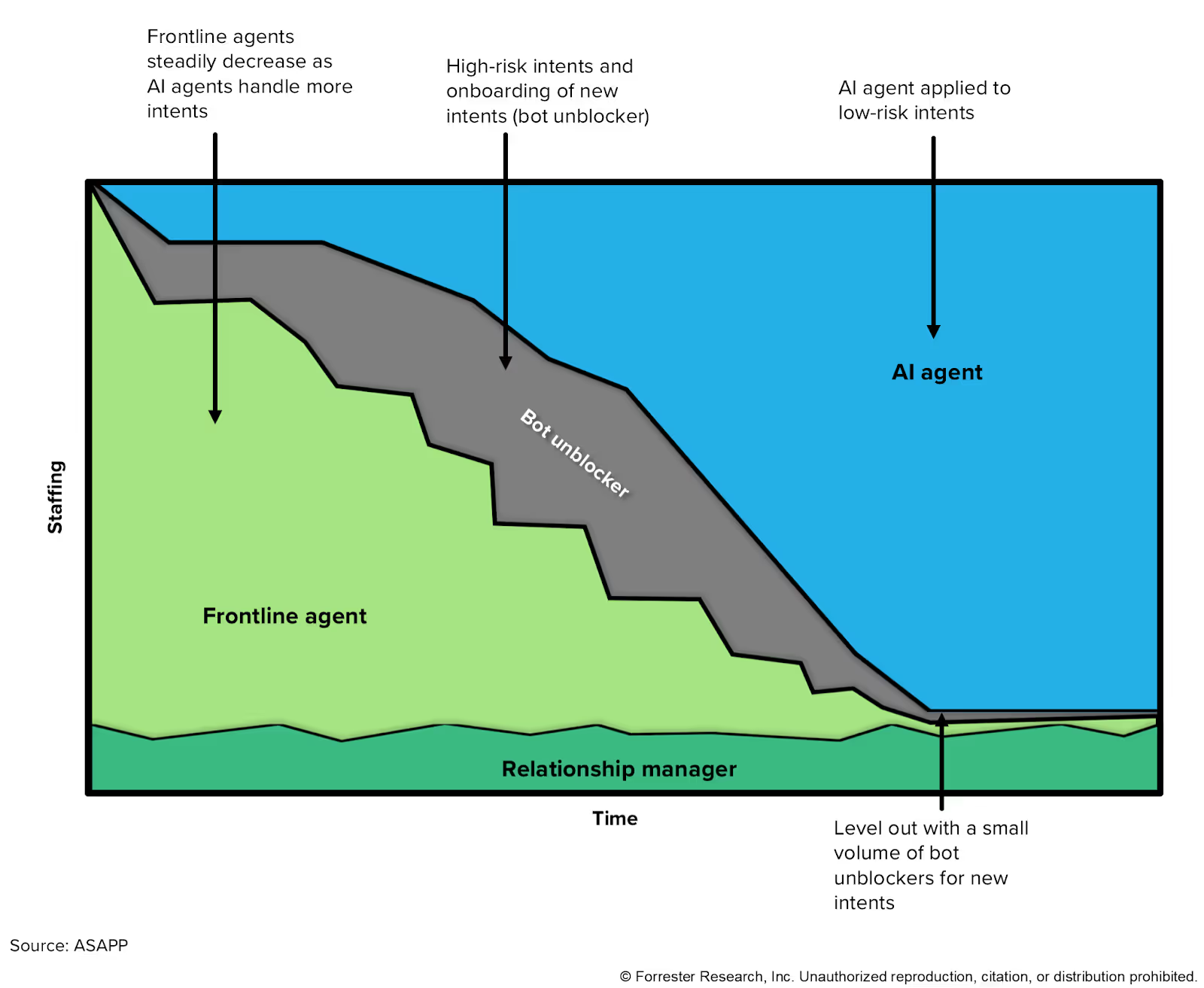

Analysts agree: the future of AI agents will impact the customer service workforce. But the real value is not in an AI-only approach, but AI-led, where AI and humans work seamlessly together. Recent reports underscore the critical role of human-AI collaboration:

First, brands must transition to allowing AI to lead customer interactions end-to-end. When an exception arises, the AI must consult with human subject matter experts (SMEs) in the background who will “unblock” the AI in real time.

This is a completely new interaction paradigm and will require a new workspace optimized for enabling (and capturing) human judgment calls, not handling conversations.

— Tacit Knowledge Will Power the AI-Led Contact Center, Forrester January, 2025

Evidence in Action: Real Results with HILA

HILA isn’t just theory—it’s delivering real-world impact today. Here’s what contact centers see when they adopt this approach.

Customer Success Snapshots:

- A leading airline launched its first GenerativeAgent intent (use case) with HILA Approver Mode, to ensure GenerativeAgent reflected its brand in every conversation. Not only did containment stay steady, but CSAT was 91%. And, edit rates dropped 23%, validating that HILA input was effectively helping the AI learn and improve.

- A travel & hospitality customer piloted HILA across 5,000 monthly interactions and saw their average agent handle time (AHT) reduced by 60% in week one alone and a 50% reduction in potential CS compliance issues over the first 60 days.

- A worldwide leader in online protection uses HILA in its customer installation use case to help address issues - like unique error codes - that require human expertise. Instead of having a customer wait in the queue for a live agent, GenerativeAgent asks a HILA for approval to send the customer a unique link to their email to resolve the issue. The HILA confirms, sends the email, and GenerativeAgent continues to resolve the issue with the customer.

Another added benefit is concurrency—real concurrency—as HILAs can easily manage two or three (or, in some cases, four or five) interactions at the same time, depending on the experience of the human agent and the workflows involved.

HILA doesn’t just help contact centers move faster; it helps train AI to reduce risk and makes the human agent’s work more rewarding.

Actionable Takeaways for Contact Center Leaders

If you’re evaluating AI for your contact center, keep these principles in mind:

- Don’t rely on automation alone. AI is powerful, but human expertise and oversight ensure speed, accuracy, and customer trust.

- Measure beyond cost savings. Look at resolution speed, containment without repeat/FCR, CSAT. And, if using HILA for oversight, edit rate reduction.

- Choose solutions with intentional design for human/AI collaboration. Platforms that enable human-AI workflows where a HILA can unblock AI outperform AI-only tools.

- Think of human/AI collaboration not only within the customer experience, but also across contact center operations - how to manage and optimize AI-led workflows.

Conclusion: The Future of AI in Contact Centers is Human + AI

AI is here to stay—but the organizations winning with AI aren’t the ones removing humans from the equation. They’re the ones integrating AI with human expertise in real time, reducing risk and accelerating performance.

Companies will transition interaction volumes to AI gradually; as the AI model learns, it will be supported by behind-the-scenes human ‘unblockers.’

— Forrester, “AI Agents Will Transform The Customer Service Workforce,” June, 2025

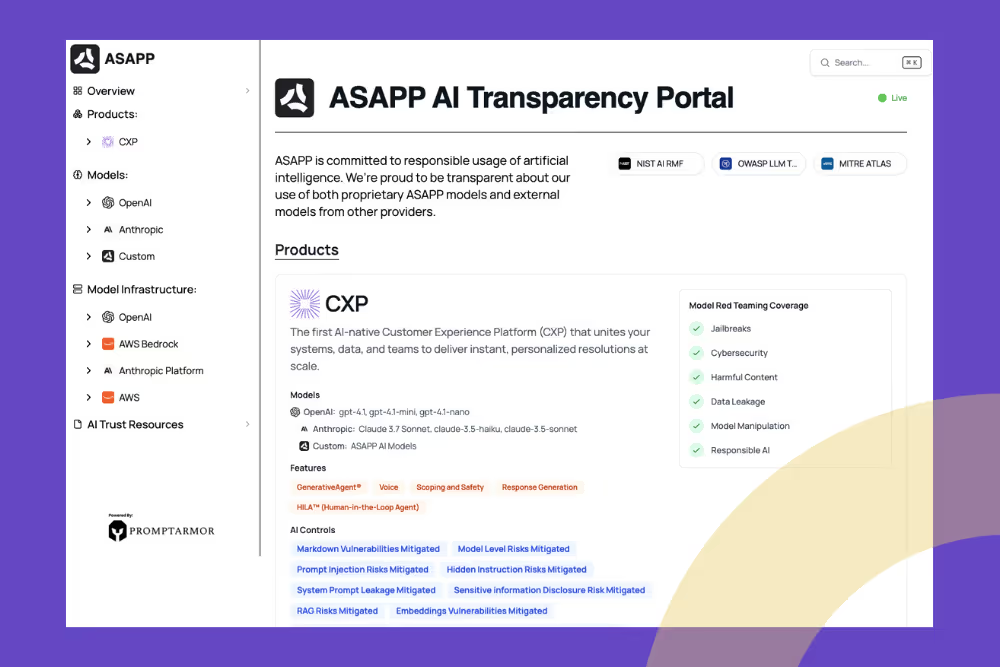

ASAPP is proud to have contributed to this research. Our approach to generative AI is grounded in real-world enterprise contact center performance. ASAPP’s GenerativeAgent is designed for agent-AI collaboration from the ground up—featuring human-in-the-loop workflows that keep seasoned agents engaged in unblocking AI, handling complex exceptions, and optimizing outcomes. We believe the future of CX lies in aligning automation with human judgment, trust, and control.

We invite you to read both reports to better understand how AI will reshape customer service roles—and how forward-thinking organizations can lead this transformation.

HILA isn’t just a buzzword—it’s a proven approach validated by industry analysts and real-world results. Ready to learn more?

CTA Options:

Access the complimentary Forrester reports to see why Human-AI collaboration is the key to AI success.

- AI agents will transform the customer service workforce

- Tacit knowledge will power the AI-led contact center

Book a demo to experience how HILA can transform your contact center operations.

Get critical visibility into GenerativeAgent behavior with Conversation Explorer

AI-driven customer interactions are becoming the new standard in customer service. But how do you really know if your AI agent is doing a good job? Are its responses always accurate? Is it truly understanding customers and resolving their issues? Ensuring consistent quality across all those automated conversations can feel daunting. That's where Conversation Explorer and Conversation Monitoring come in. Together, these tools are designed to give you a clear picture of your AI-powered customer service and the confidence that GenerativeAgent is delivering quality at scale.

The visibility challenge

While many providers offer “life-like” or “concierge” AI agent experiences, there are significant challenges that are unique to ensuring these new autonomous solutions behave as intended, especially when it comes to brands entrusting them with their customer experience. We’ve discussed safety in previous blogs, so let’s focus on the visibility challenge here.

- The "Black Box" Problem: Many AI agents, especially those driven by large language models (LLMs), operate as "black boxes," making it difficult to understand their decision-making processes.

- Complexity of Agent Workflows: Agent workflows often involve multi-step processes like LLM calls, retrieval-augmented generation (RAG), and calls to external APIs, making tracing challenging.

- Monitoring and Observability Challenges: Monitoring gaps arise when monitoring tools aren't unified across all components, making it difficult to diagnose issues.

- Silent Failures: Without proper monitoring and notification, potential problems can go undetected, and undiagnosed, leading to "silent failures" until they cause major issues. (Not only will it create a bad experience for one customer, but because it's going unnoticed, it will likely cause similar issues for other customers.)

- Trust and User Understanding: It's hard for users to verify what AI agents are doing, and even if that information is surfaced, it may be difficult for non-experts to understand the agent's "thought process". This can lead to a lack of trust.

- Auditability Issues: Tracing and auditing agent actions can be difficult, even with autonomous agents that perform complex tasks involving human oversight.

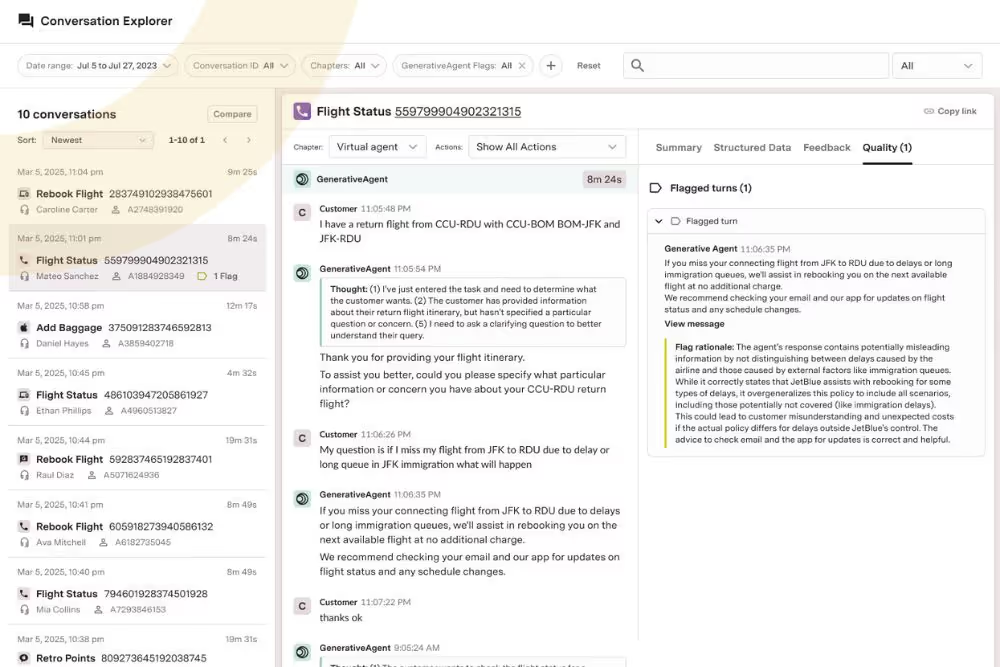

To help you avoid the visibility challenge, Conversation Explorer isn't just a basic log of interactions. It's a way to truly see how your GenerativeAgent handles conversations, offering a detailed view of each one to help you continuously improve the automated experience for customers. When paired with Conversation Monitoring, it forms a robust system for ensuring quality.

See what's happening: Transparency and context

At its core, Conversation Explorer lets you look at every conversation transcript alongside GenerativeAgent’s thought process. This includes seeing every action the AI takes, which gives you useful context. This transparency is key for identifying potential issues and fine-tuning your GenerativeAgent configuration to improve the customer experience.

With this, you can:

- Better Understand Customers: By seeing real conversations, you can get a better handle on what customers need and prefer.

- Improve Service Quality: The tool helps you pinpoint specific areas where your customer service interactions could be more effective.

- Make Decisions with Data: Having a thorough look at conversations helps you make informed choices based on actual performance.

Understand model actions: AI decision-making

A helpful feature in Conversation Explorer is its ability to show you how your GenerativeAgent makes decisions and uses the tasks, knowledge bases, and APIs you've set up. You can turn on specific model actions from the control panel to follow the AI's reasoning process and see its decision points within the conversation flow.

Need more detail? Just click on an inline model action. This brings up more information, like the full response from a function. This offers deep insight into the specifics of each AI action right within the conversation, helping you better understand how GenerativeAgent processes information and responds.

Find issues: confidence in quality with Conversation Monitoring

Conversation Explorer works in concert with Conversation Monitoring to deliver quality assurance at scale. Conversation Monitoring works automatically in real-time behind the scenes, checking 100% of your GenerativeAgent's interactions for suspected inconsistencies. When a high-impact anomaly is detected, it flags the conversation and sends a real-time alert to notify the right team members.

These real-time alerts link directly to the interaction, allowing team members to jump straight into the flagged conversation in Conversation Explorer for quick review to determine if action is required. From Conversation Explorer, you can:

- Quickly Locate Issues: Filter to find conversations that have been flagged for review.

- Understand Why It Was Flagged: The "Quality tab" allows you to review all interactions flagged within a conversation and navigate directly to that specific point in the transcript. This helps you diagnose what happened and why it was flagged by the monitoring system. You can then check if the flagged item was truly an error and understand the reasoning behind the GenerativeAgent's actions. Conversation Monitoring assesses issues like appropriate resource use, information accuracy, understanding customer intent, and potential misrepresentation.

- Reduce Manual Review: Because Conversation Monitoring automatically flags anomalies, assigns an impact score and provides direct links, it cuts down on the need for extensive manual review.

This information also flows into GenerativeAgent reporting, so users can see a higher level of performance over time, while using Conversation Explorer to dig deeper into flagged conversation examples.

This combined approach allows your teams to investigate, quickly find and address errors, and identify possible gaps in your own content to continuously improve the GenerativeAgent's configuration and overall performance. It helps you optimize your GenerativeAgent while building confidence in its capabilities. Conversation Monitoring can even help to identify categories of flagged conversations, highlighting areas where you have opportunities to improve.

In Summary

We’ve been intentional in our design for collecting, processing, and sharing information about GenerativeAgent actions. Conversation Explorer, especially when used hand-in-hand with Conversation Monitoring, is a practical tool for anyone who wants to truly understand, adjust, and improve their AI-driven customer interactions. By offering clear views, insights into how the GenerativeAgent thinks, and an efficient way to review flagged conversations, it helps teams maintain quality assurance at scale and continue to make the GenerativeAgent customer experience even better…and avoid the visibility challenge.

Will the real human in the loop please stand up?

Even if you weren’t around in the 1950s, chances are you’ve heard some variation of this line from the classic television game show, To Tell the Truth. In the show, celebrity panelists were introduced to three contestants, each claiming to be the same person. The real person had to tell the truth, while the impostors could lie. After a round of questioning, the panelists voted on who they believed was the real deal. The big reveal—often a surprise—delivered the show's signature drama. The format proved compelling enough to last through multiple revivals, staying on air in various forms until 2022.

Today, a new version of this guessing game is playing out in the world of generative AI for customer service. But unlike the lighthearted game show, the stakes here are much higher—especially for companies whose competitive edge depends on delivering outstanding customer experiences.

What is a HILA?

HILA, or Human-in-the-Loop Assistance, is a concept rooted in the AI/ML world that is now critical to generative AI contact center solutions. As providers race to bring AI agents into customer-facing roles, there’s an understandable focus on mitigating risk—ensuring responses are safe, compliant, and on-brand.

There are legitimate challenges to overcome for generative AI to truly revolutionize the contact center, and ensuring generative AI agent success with human-in-the-loop is part of this transformation.

There are multiple interpretations of HILA, but the common thread is clear: AI should assist humans, not the other way around. HILA is about amplifying human decision-making—not requiring it for every single task.

Let’s Meet Our Contestants

HILA #1

This version of HILA prioritizes safety by requiring human agents to approve AI-generated responses before they reach customers. While this approach ensures accuracy and compliance—particularly valuable when monitoring new or sensitive intents—it also introduces friction. The approval workflow adds delays, increases handling time, and reduces the cost-efficiency of automation, even for interactions that the AI could likely manage reliably on its own.

HILA #2

Here, AI handles interactions until it encounters a scenario it can't confidently resolve—like comparing billing cycles or navigating nuanced policy exceptions. At that point, it hands off to a human, typically with a detailed summary to streamline the transition. While helpful in certain situations, this method can degrade the customer experience by introducing a forced transfer and increasing reliance on more expensive human agents.

HILA #3

There’s something different about this HILA – it’s designed to collaborate flexibly with the AI agent, getting involved behind the scenes when necessary, unblocking the AI, then letting the AI handle the rest to successful resolution.

So, who is the "real" human-in-the-loop?

Okay, so we spoiled the surprise. But imagine flipping the script: AI leads the interaction, and humans support it—stepping in with guidance or approval when necessary, but without taking over the conversation. No hard handoffs. No context lost. Just continuous collaboration behind the scenes.

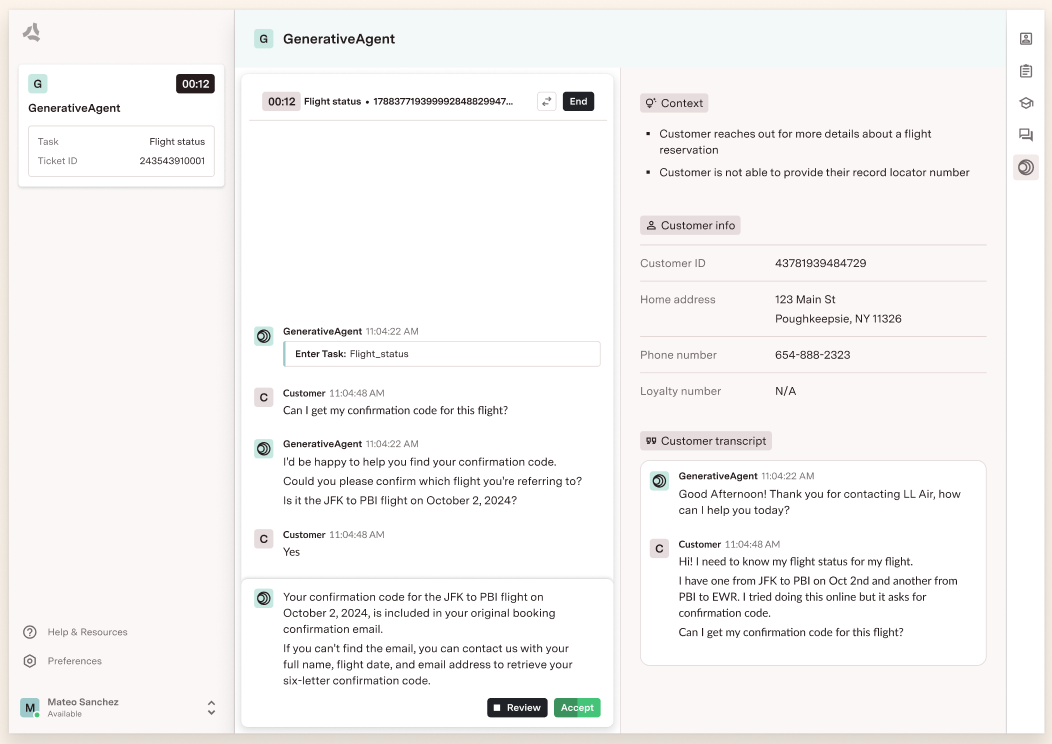

This is the GenerativeAgent® HILA paradigm: human in the loop agent. Human agents supporting AI supporting customers.

Let that sink in.

By putting humans in a supporting role—where their judgment elevates the AI rather than replaces it—we unlock scalable, safe automation while maintaining high-quality customer interactions.

How ASAPP’s GenerativeAgent leverages HILA

Here are the approaches we take with GenerativeAgent to put the HILA model into practice—blending human input with AI automation to support better outcomes.

Real-Time Human-AI Collaboration: GenerativeAgent reaches out to human agents in real time when it needs clarity, input, or permission—without handing off the conversation. This preserves continuity and keeps the interaction fully automated from the customer’s point of view.

Agent-Centered Workspace Design: A next-gen interface gives human agents relevant context, smart summaries, and intuitive tools. It captures their tacit knowledge and enables new ways of collaborating with AI—without burdening them with repetitive tasks.

Continuous Optimization: GenerativeAgent captures human decision rationale to learn and improve over time, elevating automation’s potential for your contact center without additional configuration effort.

A workspace built for this new paradigm

This new model—humans assisting AI assisting customers—requires a different kind of workspace. Not one built around handling conversations, but one designed to support judgment calls.

ASAPP developed its HILA experience through deep research with contact center agents and direct input from enterprise customers. The result: an interface designed specifically for human-in-the-loop agents, enabling fast, fluid collaboration with AI in a streamlined workflow.

Flexible, role-based human-in-the-loop design

And GenerativeAgent lets you define the role of the human in the loop—and decide how they support AI across your workflows. You stay in control of which intents AI handles, when and how it should seek human help, and what happens when something goes wrong.

Why it matters for your contact center strategy

When GenerativeAgent HILA is built into the core of your AI strategy, it changes what’s possible. Contact centers will gain practical advantages that make scaling GenerativeAgent and automation safer, smarter, and more manageable. Contact centers using GenerativeAgent HILA can:

- Expand automation safely: Define which intents the AI should handle and exactly what happens when it hits a roadblock.

- Improve AI performance: Leverage human feedback to continuously optimize GenerativeAgent’s responses and decision-making.

- Increase capacity while maintaining quality: Reduce reliance on expensive human agents by letting GenerativeAgent handle complex interactions, with intelligent human support as needed.

- Accelerate adoption and expansion: Bridge gaps in data, policy, and tooling with real-time human support—so you can roll out automation faster and at greater scale.

Common scenarios for human support

- Knowledge or system gaps: When GenerativeAgent lacks access to certain data or APIs, a human agent can fill in the blanks—or directly execute system tasks AI can't access.

- Authorization rules: For sensitive actions—like offering discounts or closing accounts—humans can provide explicit approvals based on company policy.

- Customer request: If a customer asks for a human, you can choose to transfer—or have the AI consult a human to keep the interaction moving, especially useful when queues are long.

- System-initiated triggers: When something’s unclear (e.g., API errors, ambiguous data), GenerativeAgent can ask a human for help mid-interaction—resolving the issue without starting over.

This kind of flexible, consultative support model helps organizations adopt automation faster and expand its use across more complex workflows—without compromising on control or quality.

Final thoughts

Generative AI is poised to reshape the contact center—but unlocking its full potential requires a smarter approach to human-in-the-loop collaboration. The HILA model ensures your AI is not only ready for production today, but also able to continuously improve and scale tomorrow.

As the technology evolves, so does the role of the human agent. It’s no longer about choosing between AI or people—it’s about empowering both to do what they do best. With the right human-in-the-loop strategy, companies can find the ideal balance between automation and judgment, leading to more efficient operations and better customer experiences.