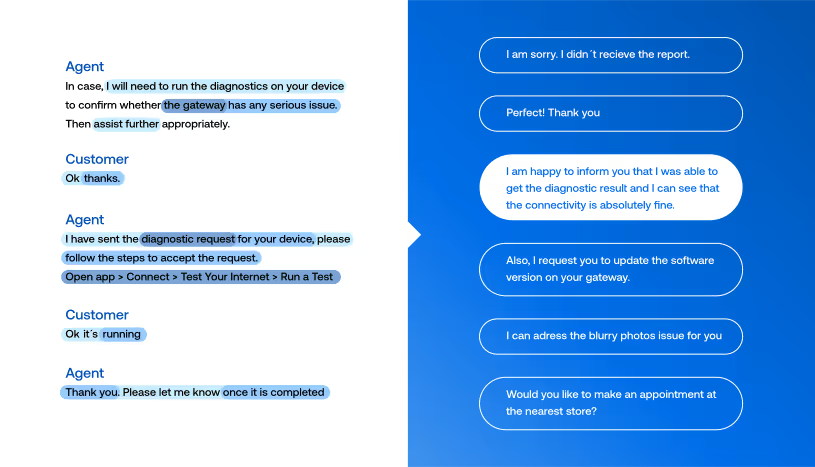

At ASAPP we develop AI models to improve agent performance in the contact center. Many of these models directly assist contact center agents by automating parts of their workflow. For example, the automated responses generated by AutoCompose - part of our ASAPPMessaging platform - suggest to an agent what to say at a given point during a customer conversation. Agents often use our suggestions by clicking and sending them.

How we measure performance matters

While usage of the suggestions is a great indicator of whether the agents like the features, we’re even more interested in the impact the automation has on performance metrics like agent handle time, concurrency, and throughput. These metrics are ultimately how we measure agent performance when evaluating the impact of a product like the AutoCompose capabilities of ASAPPMessaging, but these metrics can be affected by things beyond AutoCompose usage, like changes in customer intents or poorly-planned workforce management.

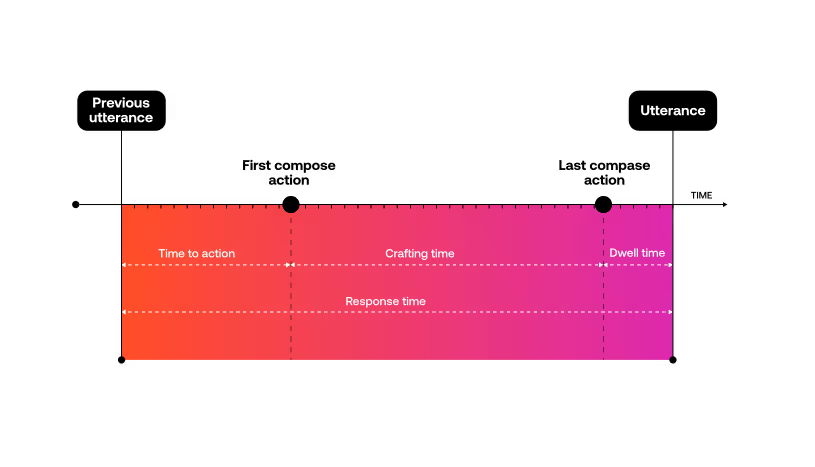

To isolate the impact of AutoCompose usage on agent efficiency, we prefer to measure the specific performance gains from each individual usage of AutoCompose. We do this by measuring the impact of automated responses on agent response time, because response time is more invariant to intent shifts and organizational effects than handle time, concurrency and throughput.

By doing this, we can further analyze:

- The types of agent utterances that are most often automated

- The impact of the automated responses when different types of messages are used (in terms of time savings)

Altogether, this enables us to be data-driven about how we improve models and develop new features to have maximum impact.

Going beyond greeting and closing messages

When we train AI models to automate responses for agents, the models look for patterns in the data that can predict what to say next based on past conversation language. So the easiest things for models to learn well are the types of messages that occur often and without much variation across different types of conversations, e.g. greetings and closings. Agents typically greet and end a conversation with a customer the same way, perhaps with some specificity based on the customer’s intent.

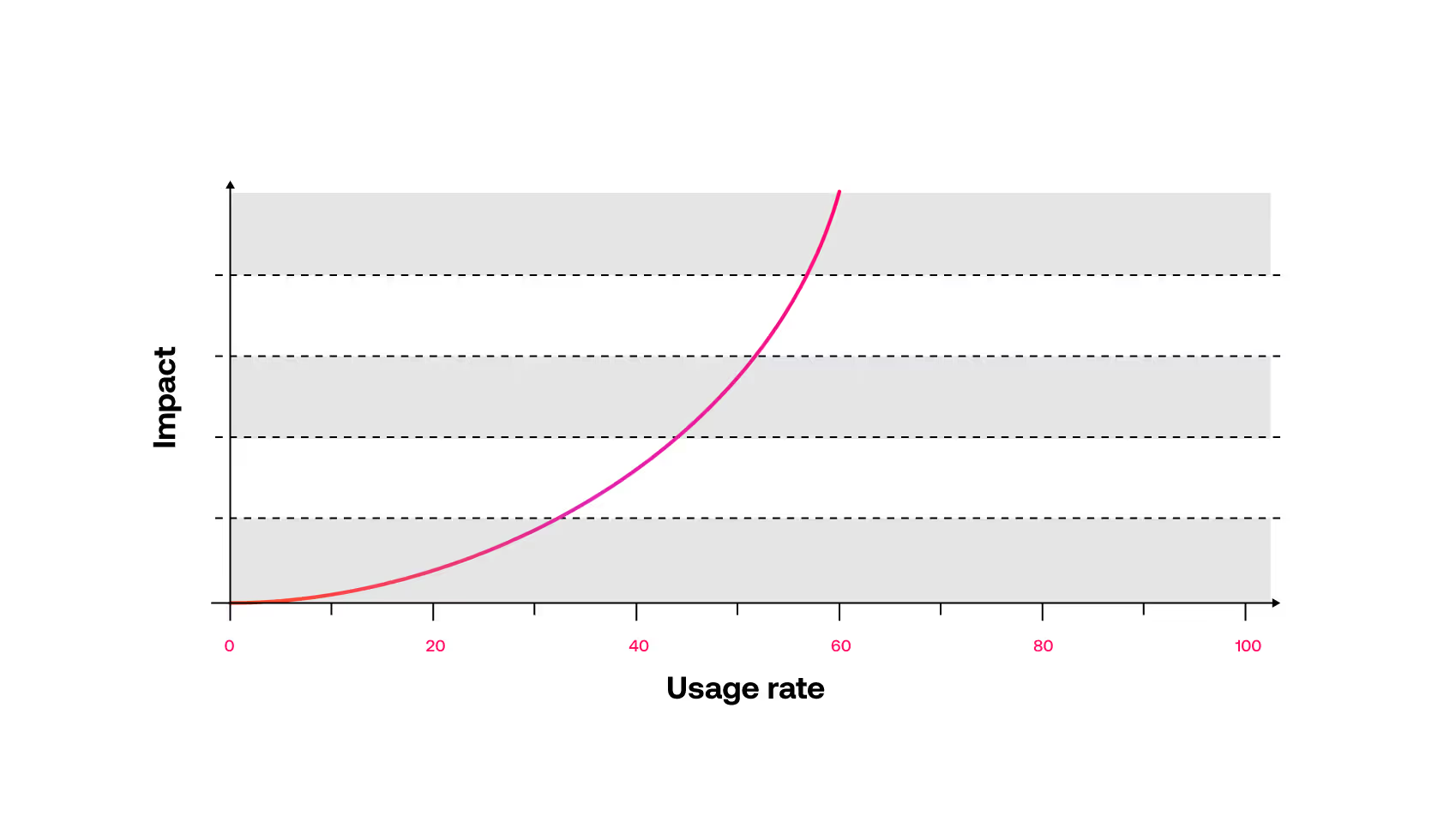

Most AI-driven automated response products will correctly suggest greeting and closing messages at the correct time in the conversation. This typically accounts for the first 10-20% of automated response usage rates. But when we evaluate the impact of automating those types of messages, we see that it’s minimal.

To understand this, let’s look at how we measure impact. We compare agents’ response times when using automated responses against their response times when not using automated responses. The difference in time is the impact—it’s the time savings we can credit to the automation.

Without automation, agents are not manually typing greeting and closing messages for every conversation. Rather they’re copying and pasting from notepad or word documents containing their favorite messages. Agents are effective at this because they do it several times per conversation. They know exactly where their favorite messages are located, and they can quickly copy and paste them into their chat window. Each greeting or closing message might take an agent 2 seconds. When we automate those types of messages, all we are actually automating is the 2-second copy/paste. So when we see automation rates of 10-20%, we are likely only seeing a minimal impact on agent performance.

The impact lies in automating the middle of the conversation.

If automating the beginnings and endings of conversations is not that impactful, what is?

Automating the middle of the conversation is where response times are naturally slowest and where automation can yield the most agent performance impact.

- Heather Reed, Product Manager, ASAPP

The agent may not know exactly what to say next, requiring time to think or look up the right answers. It’s unlikely that the agent has a script readily available for copying or pasting. If they do, they are not nearly as efficient as they are with their frequently used greetings and closings.

Where it was easy for AI models to learn the beginnings and endings of conversations, because they most often occur the same way, the exact opposite is true of the middle parts of conversations. Often, this is where the most diversity in dialog occurs. Agents handle a variety of customer problems, and they solve them in a variety of ways. This results in extremely varied language throughout the middle parts of conversations, making it hard for AI models to predict what to say at the right time.

ASAPP’s research delivers the biggest improvements

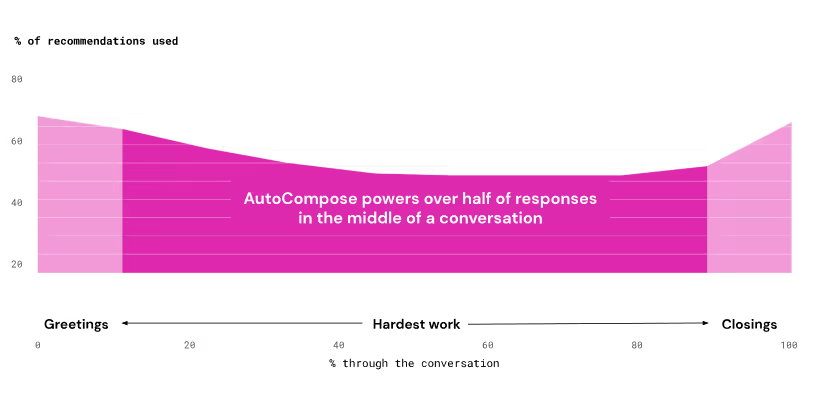

Whole interaction models are exactly what the research team at ASAPP specializes in developing. And it’s the reason that AutoCompose is so effective. If we look at AutoCompose usage rates throughout a conversation, we see that while there is a higher usage at the beginnings and endings of conversations, AutoCompose still automates over half of agent responses in between.

The low response times in the middle of conversations are where we see the biggest improvements in agent response time. It’s also where the biggest opportunities for improvements are realized.

Whole interaction automation shows widespread improvements

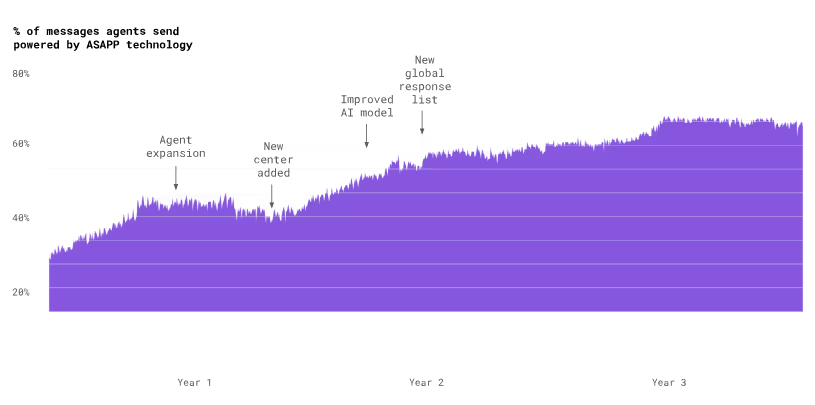

ASAPP’s current automated response rate is about 80%. It has taken a lot of model improvements, new features, and user-tested designs to get there. But now agents effectively use our automated responses in the messaging platform to reduce handle times by 25%, enabling an additional 15% of agent concurrency, for a combined improvement in throughput of 53%. The AI model continues to get better with use, improving suggestions, and becoming more useful to agents and customers.

.avif)