Trust isn't claimed. It's demonstrated.

At ASAPP, we've spent over a decade building AI for the most demanding contact centers in the world. We know that when enterprises deploy AI in customer conversations, they're putting their brand reputation on the line with every interaction. That responsibility demands more than promises; it demands proof.

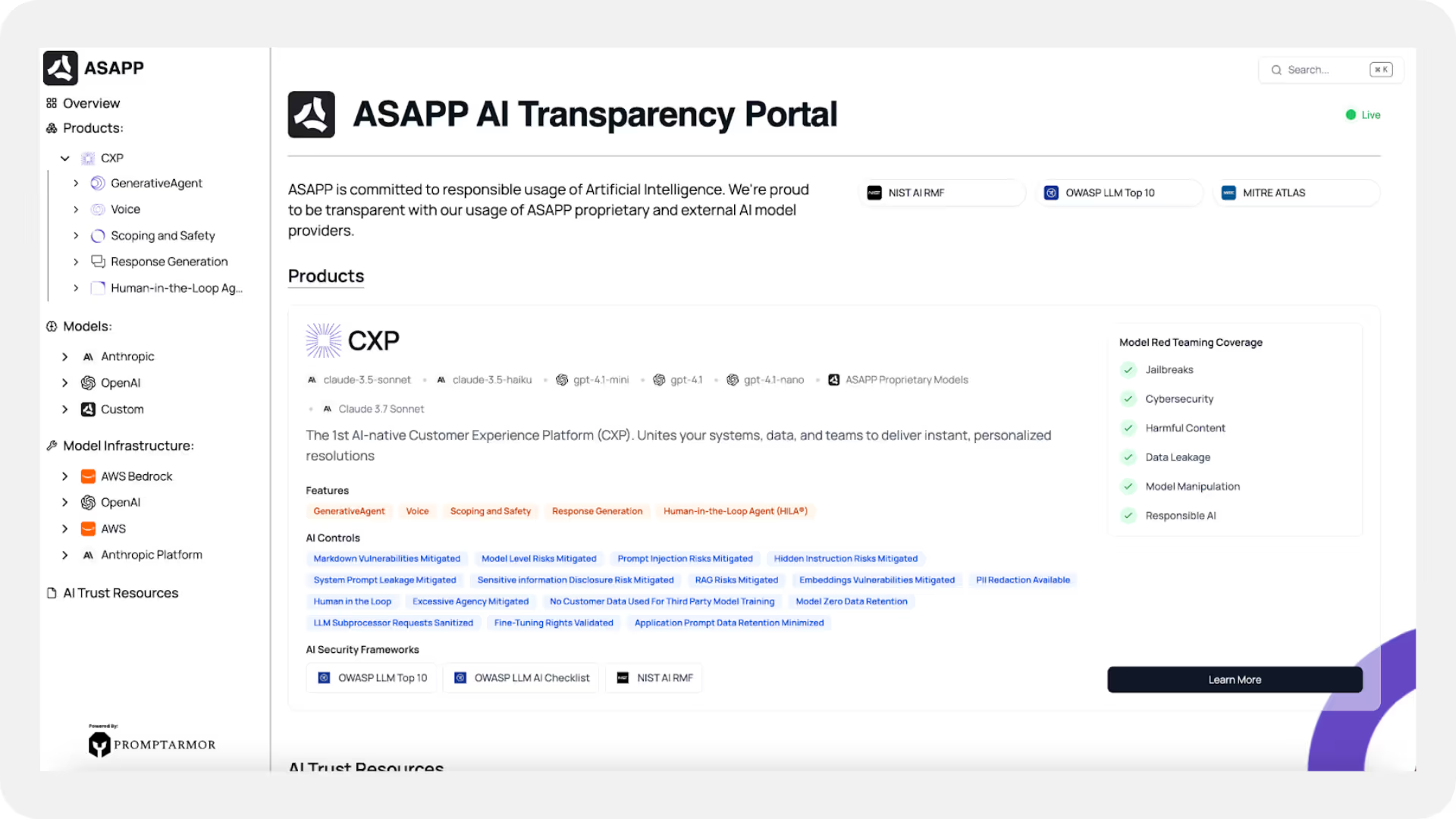

Today, we're launching our AI Transparency Portal, developed in partnership with PromptArmor, to give security and compliance teams exactly that.

Why Did We Build This?

As customer interactions are increasingly driven by autonomous AI agents, expectations around transparency are much higher, not only by regulators, but also by our risk-aware customers. Questions like “What models does your platform use? How does our data flow through your systems? What controls do you have against emerging LLM threats like prompt injection?” become a norm for any security assessments.

These are the right questions to ask. And we’ve decided to proactively anticipate our customers’ questions and address these in our AI Transparency Portal.

Noticing the trend around AI transparency in security questionnaires early on, our Security & Privacy Assurance team has identified the need and sought a solution that would put critical AI-risk information in a convenient, self-explanatory portal. The result is a transparency portal that maps LLM controls against OWASP Top 10 for LLM, NIST AI-RMF, and MITRE ATLAS, while providing clear documentation on data flows, model provenance, and security architecture.

What Makes ASAPP Different

We're not a startup that bolted an LLM onto an existing product, claiming we’re doing something new. ASAPP was founded as an AI-native company, and we've been building and refining models for contact centers since our inception and currently hold more than 60+ issued patents in that space. Our usage of LLMs as part of our platform architecture is deliberately hybrid:

- We leverage frontier LLMs for conversational capability while maintaining purpose-built, proprietary AI models for speech recognition, knowledge retrieval, and predictive analytics.

- Our proprietary models are trained on domain-specific data and optimized for the precision that enterprise contact centers require.

- We orchestrate LLMs in proprietary ways to build powerful AI agents capable of autonomously completing complex workflows using a variety of systems and tools to do so.

Why does this matter?

This matters a lot for the proper risk assessment by our customers. With surgical precision, we can articulate exactly which capabilities are powered by which models, what data each component processes, and how each is governed. That clarity is what modern AI governance demands.

What's in the AI Transparency Portal

The ASAPP AI Transparency Portal provides:

- Inventory of AI Assets: AI capabilities, model types, and providers

- Framework Mapping: Alignment of LLM controls against dominant LLM frameworks

- Data Flow Diagrams: Visual data flows from ingestion to outputs

- Security Controls: LLM built-in defenses against LLM-specific threats such as jailbreaks or harmful content generation

- Governance Practices: Our model evaluation and monitoring processes

- References: References to respective articles, blogs, our security trust center, and technical documentation

For Security and Compliance Teams

If you're evaluating AI vendors, you know the challenge: AI security questionnaires are proliferating, frameworks are still maturing, and every vendor claims to be "responsible". We built this portal to cut through that noise and give you actionable information upfront.

Access the portal at trust.asapp.com/ai