AI systems have additional considerations over traditional software. A key difference is in the maintenance cost. Most of the cost of an AI system happens after the code has been deployed. ML models degrade over time without ongoing investment in data and hyperparameter tuning.

The cost structure of AI systems are directly affected by these design decisions; the level of service, and improvement over time are categorically different across different levels. Knowing the level of the AI system can help practitioners and customers predict how the system will change over time – whether it will continuously improve, remain the same, or even degrade.

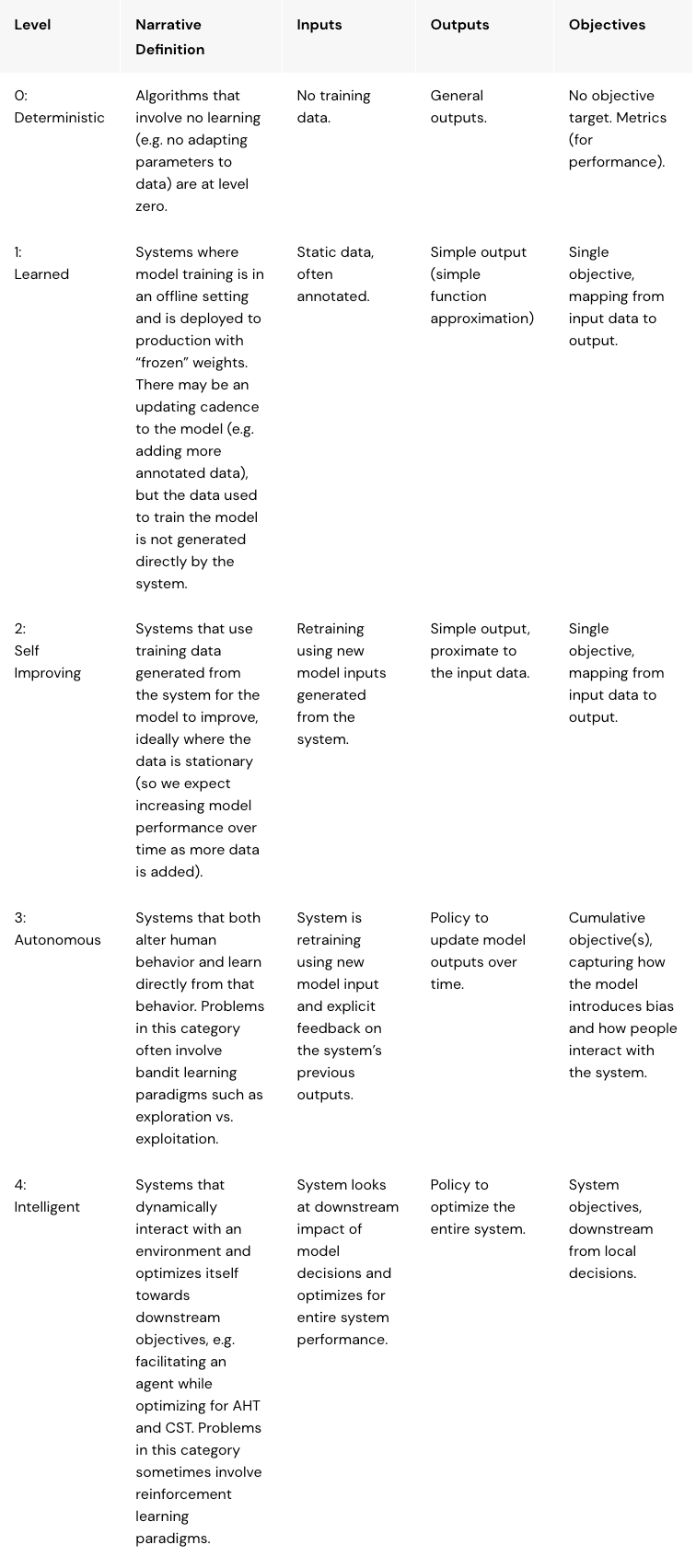

Levels of AI Systems start at traditional software (Level 0) and progress up to fully Intelligent software (Level 4). Systems at Level 4 essentially maintain and improve on their own – they require negligible work. At ASAPP we call Level 4 AI Native®.

Moving up a level has trade-offs for practitioners and customers. For example, moving from Level 1 to Level 2 reduces ongoing data requirements and customization work, but introduces a self-reinforcing bias problem that could cause the system to degrade over time. Choosing to move up a level requires practitioners to recognize the new challenges, and the actions to take in designing an AI system.

While there are significant benefits in scalability (and typically performance/robustness/etc) in moving up levels, it’s important to say that most systems are best designed at Level 0 or Level 1. These levels are the most predictable: performance should remain roughly stable over time, and there are obvious mechanisms to improve performance (e.g. for Level 1, add more annotated training data).

AI Levels

Designing AI systems is different from traditional software development because the behavior of the system is learned – and can potentially change over time once deployed. When practitioners build AI systems, it can be useful to talk about their “level”, just like SAE has levels for self-driving cars.

Moving up a level has trade-offs for practitioners and customers. This requires practitioners to recognize the new challenges, and the actions to take in designing an AI system

Michael Griffiths, Senior Director, Data Science, ASAPP

Level 0: Deterministic

No required training data, no required testing data

Algorithms that involve no learning (e.g. adapting parameters to data) are at level zero.

The great benefit of level 0 (traditional algorithms in computer science) is that they are very reliable and, if you solve the problem, can be shown to be the optimal solution. If you can solve a problem at level 0 it’s hard to beat. In some respect, all algorithms–even sorting algorithms (like binary search) – are “adaptive” to the data. We do not generally consider sorting algorithms to be “learning”. Learning involves memory–the system changing how it behaves in the future, based on what it’s learned in the past.

However, some problems defy a pre-specified algorithmic solution. The downside is that for problems that defy human understanding (either once, or in number) it can be difficult to perform well (e.g. speech to text, translation, image recognition, utterance suggestion, etc.).

Examples:

- Luhn Algorithm for credit card validation

- Regex-based systems (e.g. simple redaction systems for credit card numbers).

- Information retrieval algorithms like TFIDF retrieval or BM25.

- Dictionary-based spell correction.

Note: In some cases, there can be a small number of parameters to tune. For example, ElasticSearch provides the ability to modify BM25 parameters. We can regard these as tuning parameters, i.e. set and forget. This is a blurry line.

Level 1: Learned

Static training data, static testing data

Systems where you train the model in an offline setting and deploy to production with “frozen” weights. There may be an updating cadence to the model (e.g. adding more annotated data), but the environment the model operates in does not affect the model.

The benefit of level 1 is that you can learn and deploy any function at the modest cost of some training data. This is a great place to experiment with different types of solutions. And, for problems with common elements (e.g. speech recognition) you can benefit from diminishing marginal costs.

The downside is that customization to a single use case is linear in their number: you need to curate training data for each use case. And that can change over time, so you need to continuously add annotations to preserve performance. This cost can be hard to bear.

Examples:

- Custom text classification models

- Speech to text (acoustic model)

Level 2: Self-learning

Dynamic + static training data, static testing data

Systems that use training data generated from the system for the model to improve. In some cases, the data generation is independent of the model (so we expect increasing model performance over time as more data is added); in other cases, the model intervening can reinforce model biases and performance can get worse over time. To eliminate the chance of reinforcing biases, practitioners need to evaluate new models on static (potentially annotated) data sets.

Level 2 is great because performance seems to improve over time for free. The downside is that, left unattended, the system can get worse – it may not be consistent in getting better with more data. The other limitation is that some systems at level two might have limited capacity to improve as they essentially feed on themselves (generating their own training data); addressing this bias can be challenging.

Examples:

- Naive spam filters

- Common speech to text models (language model)

Level 3: Autonomous (or self-correcting)

Dynamic training data, dynamic test data

Systems that both alter human behavior (e.g. recommend an action and let the user opt-in) and learn directly from that behavior, including how the systems’ choice changes the user behavior. Moving from Level 2 to 3 potentially represents a big increase in system reliability and total achievable performance.

Level 3 is great because it can consistently get better over time. However, it is more complex: it might require truly staggering amounts of data, or a very carefully designed setup, to do better than simpler systems; its ability to adapt to the environment also makes it very hard to debug. It is also possible to have truly catastrophic feedback loops. For example, a human corrects an email spam filter – however, because the human can only ever correct misclassifications that the system made, it learns that all its predictions are wrong and inverts its own predictions.

Level 4: Intelligent (or globally optimizing)

Dynamic training data, dynamic test data, dynamic goal

Systems that both dynamically interact with an environment and globally optimizes (e.g. towards some set of downstream objectives), e.g. facilitating an agent while optimizing for AHT and CSAT, or optimizing directly for profit. For example, an AutoCompose system that optimizes for the best series of clicks to optimize the conversation.

Level 4 can be very attractive. However, it is not always obvious how to get there, and unless carefully designed, these systems can optimize towards degenerate solutions. Aiming them at the right problem, shaping the reward, and auditing its behavior are large and non-trivial tasks.

Why consider levels?

Designing and building AI systems is difficult. A core part of that difficulty is understanding how they change over time (or don’t change!): how the performance, and maintenance cost, of the system will develop.

In general, there is increasing value as you move up levels, e.g. one goal might be to move a system operating at Level 1 to be at Level 2 – but complexity (and cost) of system build also increases as levels go up. It can make a lot of sense to start with a novel feature at a “low” level, where the system behavior is well understood, and progressively increase the level – as understanding the failure cases of the system becomes more difficult as the level increases.

The focus should be on learning about the problem and the solution space. Lower levels are more consistent and can be much better avenues to explore possible solutions than higher levels, whose cost and variability in performance can be large hindrances.

This set of levels provides some core breakpoints for how different AI systems can behave. Employing these levels – and making trade-offs between levels – can help provide a shorthand for differences post-deployment.

Matrix Layout