Natural language classification is widely adopted in many applications, such as user intent prediction, recommendation systems, and information retrieval. At ASAPP, natural language classification is a core technique behind our automation capabilities.

Conventional classification takes a single-step user query and returns a prediction. However, natural language input from consumers can be underspecified and ambiguous, for example when they are not experts in the domain.

Natural language input can be hard to classify, but it’s critical to get classification right for accurate automation. Our research goes beyond conventional methods, and builds systems that interact with users to give them the best outcome.

Yoav Artzi

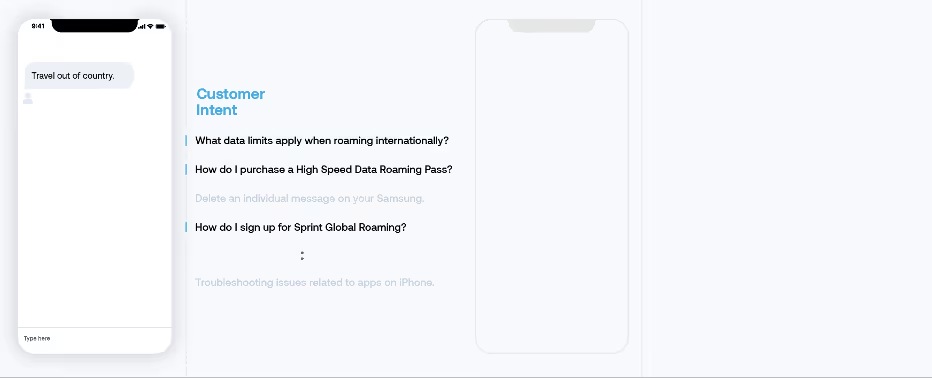

For example, in an FAQ suggestion application, a user may issue the query “travel out of country”. The classifier will likely find multiple relevant FAQ candidates, as seen in the figure below. In such scenarios, a conventional classifier will just return one of the predictions, even if it is uncertain and the prediction may not be ideal.

We solve this challenge by collecting missing information from users to reduce ambiguity and improve the model prediction performance. My colleagues Lili Yu, Howard Chen, Sida Wang, Tao Lei and I described our approach in our ACL 2020 paper.

We take a low-overhead approach, and add limited interaction to intent classification. Our goal is two-fold:

- study the effect of interaction on the system performance, and

- avoid the cost and complexities of interactive data collection.

We add simple interactions on top of natural language intent classification, with minimal development overhead, through clarification questions to collect missing information. Those questions can be binary or multi-choice. For example, the question “Do you have an online account?” is binary, with “yes” or “no” as answers. And the question “What is your phone operating system?” is multi-choice, with “OS”, “android” or “Windows” as answers. Given a question, the user responds to the system by selecting one answer from the set. At each turn, the system determines whether to ask an informative question, or to return the best prediction to the consumer.

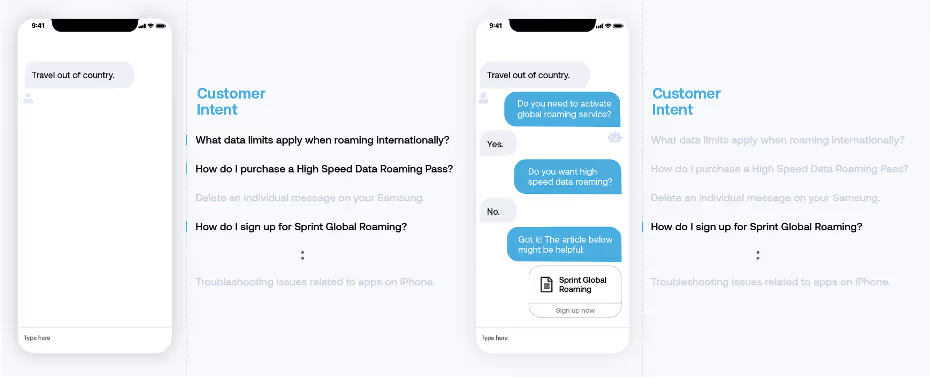

The illustration above shows a running example of interactive classification in the FAQ suggestion domain. The consumer interacts with the system to find an intent from a list of possibilities. The interaction starts with the consumer’s initial query, “travel out of country”. As our system finds multiple good possible responses, highlighted on the right, it decides to ask a clarification question, “Do you need to activate global roaming service?” When the user responds with ‘yes’ it helps the system narrow down the best response candidate. After two rounds of interaction, a single good response is identified. Our system concludes the interaction by suggesting the FAQ document to the user. This is one full interaction, with the consumer’s initial query, system questions, consumer responses, and the system’s final response.

We select clarification questions to maximize the interaction efficiency, using an information gain criterion. Intuitively, we select the question that provides most information about the intent label by observing its answer. After receiving the consumer’s answer, we update the beliefs of intent labels using Beyes’ rule iteratively. Moreover, we balance between the potential increase in accuracy and the cost of asking additional questions with a learned policy controller that decides whether to ask additional questions or return the final prediction.

We designed two non-interactive data collection tasks to train different model components. This allows us to crowdsource the data at large scale and build a robust system at low cost. Our modeling approach leverages natural language encoding, and enables us to handle unseen intents and unseen clarification questions, further alleviating the need for expensive annotations and improving the scalability of our model.

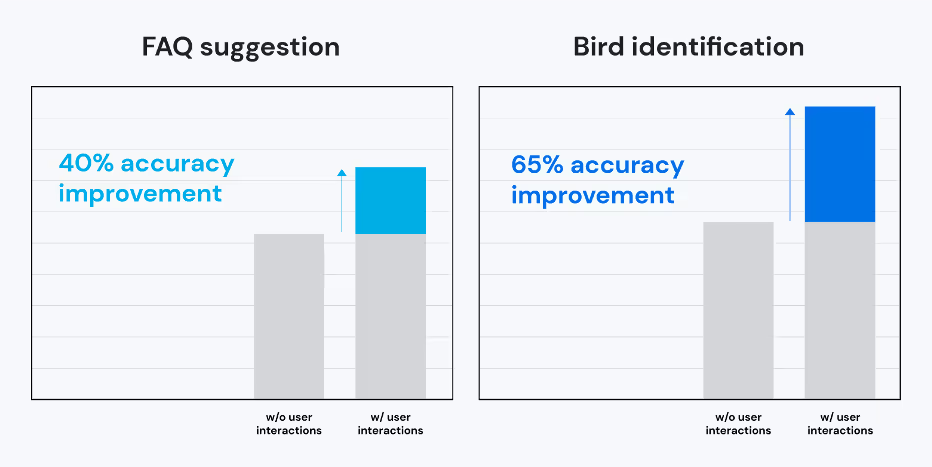

Our work demonstrates the power of adding user interaction in two tasks: FAQ suggestion and bird identification. The FAQ task provides a trouble-shooting FAQ suggestion given a user query in a virtual assistant application. The bird identification task helps identify bird species from a descriptive text query about bird appearance. When real users interact with our system, given at most five turns of interaction, our approach improves the accuracy of a no-interaction baseline by over 100% on both tasks for simulated evaluation and over 90% for human evaluation. Even a single clarification question provides significant accuracy improvements, 40% for FAQ suggestion and 65% for bird identification in our simulated analysis.

This work allows us to quickly build an interactive classification system to improve customer experience by offering significantly more accurate predictions. It highlights how research and product complete each other in ASAPP: challenging product problems inspire interesting research ideas and original research solutions improve product performance. Together with other researchers, Lili Yu is organizing the first workshop on interactive learning for natural language processing in the coming ACL 2021 conference to further discuss the method, evaluation and scenarios of interactive learning.